This is good suggestion. 👍 But the computational calculation is very higher if there is many validators.

In the above steps, a single candidate may assume multiple roles. If you want to exclude such a case, you can remove the winning candidate from the candidates. In this case, the thresholds must be recalculated because the total S of the population changes.

As above your mention, the selected validator is less than V+1. In this case, is the process of this selecting validators repeated?

The scheme of selecting a Proposer and Validators based on PoS can be considered as random sampling from a group with a discrete probability distribution.

S: the total amount of issued stakes_i: the stake amount held by a candidatei(Σ s_i = S)Random Sampling based on Categorical Distribution

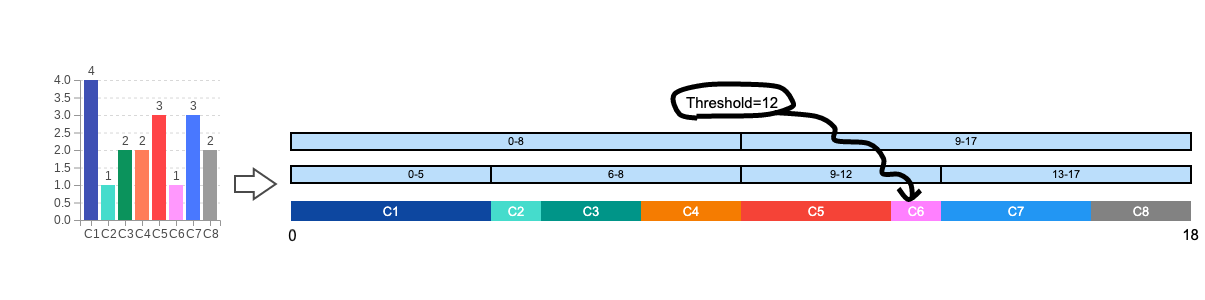

For simplicity, here is an example in which only a Proposer is selected from candidates with winning probability of

p_i = s_i / S.First, create a pseudo-random number generator using

vrf_hashas a seed, and determine the thresholdthresholdfor the Proposer. This random number algorithm should be deterministic and portable to other programming languages, but need not be cryptographic.Second, to make the result deterministic, we retrieve the candidates sorted in descending stake order.

Finally, find the candidate hit by the arrow

thresholdof Proposer.This is a common way of random sampling according to a categorical distribution by using a uniform random number. Similar to throwing an arrow on a spinning darts whose width is proportional to the probability of each item.

Selecting of a Consensus Group

By applying the above, we can select a consensus group consisting of one Proposer and

VValidators. This is equivalent to performingV+1categorical trials, which is the same as a random sampling model with a multinomial distribution. It's possible to illustrate this notion using a multinomial distribution demo I created in the past. This is equivalent to a model that selects a Proposer and Validators whenKis the number of candidates andn=V+1.As an example of intuitive code, I expand categorical sampling to multinomial.

In the above steps, a single candidate may assume multiple roles. If you want to exclude such a case, you can remove the winning candidate from the

candidates. In this case, thethresholdsmust be recalculated because the totalSof the population changes.Computational Complexity

The computational complexity is mainly affected by the number of candidates N. There is room for improvement by remembering the list of candidates that have been sorted by the stake.