OpenOOD: Benchmarking Generalized OOD Detection

| :exclamation: When using OpenOOD in your research, it is vital to cite both the OpenOOD benchmark (versions 1 and 1.5) and the individual works that have contributed to your research. Accurate citation acknowledges the efforts and contributions of all researchers involved. For example, if your work involves the NINCO benchmark within OpenOOD, please include a citation for NINCO apart of OpenOOD. |

|---|

This repository reproduces representative methods within the Generalized Out-of-Distribution Detection Framework,

aiming to make a fair comparison across methods that were initially developed for anomaly detection, novelty detection, open set recognition, and out-of-distribution detection.

This codebase is still under construction.

Comments, issues, contributions, and collaborations are all welcomed!

|

|---|

| Timeline of the methods that OpenOOD supports. More methods are included as OpenOOD iterates. |

Updates

-

17 Aug, 2024: :bulb::bulb: Wondering how OOD detection evolves and what new research topics could be in the new era of multimodal LLMs? Don't hesistate to check out our recent work Unsolvable Problem Detection: Evaluating Trustworthiness of Vision Language Models and Generalized Out-of-Distribution Detection and Beyond in Vision Language Model Era: A Survey.

-

27 Oct, 2023: A short version of OpenOOD

v1.5is accepted to NeurIPS 2023 Workshop on Distribution Shifts as an oral presentation. You may want to check out our presentation slides. -

25 Sept, 2023: OpenOOD now supports OOD detection with foundation models including zero-shot CLIP and DINOv2 linear probe. Check out the example evaluation script here.

-

16 June, 2023: :boom::boom: We are releasing OpenOOD

v1.5, which includes the following exciting updates. A detailed changelog is provided in the Wiki. An overview of the supported methods and benchmarks (with paper links) is available here.- A new report which provides benchmarking results on ImageNet and for full-spectrum detection.

- A unified, easy-to-use evaluator that allows evaluation by simply creating an evaluator instance and calling its functions. Check out this colab tutorial!

- A live leaderboard that tracks the state-of-the-art of this field.

-

14 October, 2022: OpenOOD

v1.0is accepted to NeurIPS 2022. Check the report here. -

14 June, 2022: We release

v0.5. -

12 April, 2022: Primary release to support Full-Spectrum OOD Detection.

Contributing

We appreciate all contributions to improve OpenOOD. We sincerely welcome community users to participate in these projects.

- For contributing to this repo, please refer to CONTRIBUTING.md for the guideline.

- For adding your method to our leaderboard, simply open an issue where you will see the template that has detailed instructions.

FAQ

APS_modemeans Automatic (hyper)Parameter Searching mode, which enables the model to validate all the hyperparameters in the sweep list based on the validation ID/OOD set. The default value is False. Check here for example.

Get Started

v1.5 (up-to-date)

Installation

OpenOOD now supports installation via pip.

pip install git+https://github.com/Jingkang50/OpenOOD

pip install libmr

# optional, if you want to use CLIP

# pip install git+https://github.com/openai/CLIP.gitData

If you only use our evaluator, the benchmarks for evaluation will be automatically downloaded by the evaluator (again check out this tutorial). If you would like to also use OpenOOD for training, you can get all data with our downloading script. Note that ImageNet-1K training images should be downloaded from its official website.

Pre-trained checkpoints

OpenOOD v1.5 focuses on 4 ID datasets, and we release pre-trained models accordingly.

- CIFAR-10 [Google Drive]: ResNet-18 classifiers trained with cross-entropy loss from 3 training runs.

- CIFAR-100 [Google Drive]: ResNet-18 classifiers trained with cross-entropy loss from 3 training runs.

- ImageNet-200 [Google Drive]: ResNet-18 classifiers trained with cross-entropy loss from 3 training runs.

- ImageNet-1K [Google Drive]: ResNet-50 classifiers including 1) the one from torchvision, 2) the ones that are trained by us with specific methods such as MOS, CIDER, and 3) the official checkpoints of data augmentation methods such as AugMix, PixMix.

Again, these checkpoints can be downloaded with the downloading script here.

Our codebase accesses the datasets from ./data/ and pretrained models from ./results/checkpoints/ by default.

├── ...

├── data

│ ├── benchmark_imglist

│ ├── images_classic

│ └── images_largescale

├── openood

├── results

│ ├── checkpoints

│ └── ...

├── scripts

├── main.py

├── ...Training and evaluation scripts

We provide training and evaluation scripts for all the methods we support in scripts folder.

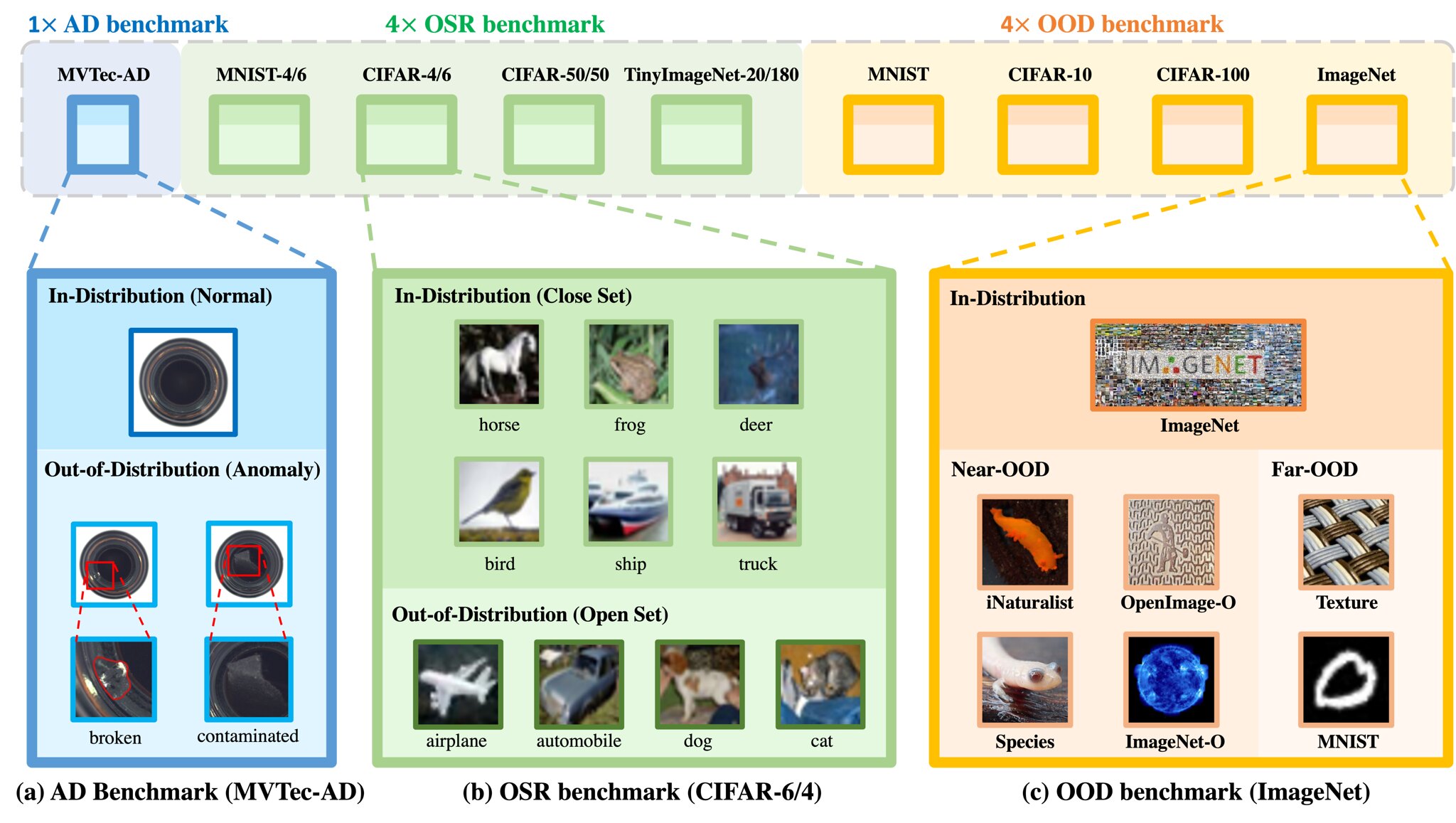

Supported Benchmarks (10)

This part lists all the benchmarks we support. Feel free to include more.

Anomaly Detection (1)

> - [x] [MVTec-AD](https://www.mvtec.com/company/research/datasets/mvtec-ad)Open Set Recognition (4)

> - [x] [MNIST-4/6]() > - [x] [CIFAR-4/6]() > - [x] [CIFAR-40/60]() > - [x] [TinyImageNet-20/180]()Out-of-Distribution Detection (6)

> - [x] [BIMCV (A COVID X-Ray Dataset)]() > > Near-OOD: `CT-SCAN`, `X-Ray-Bone`;> > Far-OOD: `MNIST`, `CIFAR-10`, `Texture`, `Tiny-ImageNet`;

> - [x] [MNIST]() > > Near-OOD: `NotMNIST`, `FashionMNIST`;

> > Far-OOD: `Texture`, `CIFAR-10`, `TinyImageNet`, `Places365`;

> - [x] [CIFAR-10]() > > Near-OOD: `CIFAR-100`, `TinyImageNet`;

> > Far-OOD: `MNIST`, `SVHN`, `Texture`, `Places365`;

> - [x] [CIFAR-100]() > > Near-OOD: `CIFAR-10`, `TinyImageNet`;

> > Far-OOD: `MNIST`, `SVHN`, `Texture`, `Places365`;

> - [x] [ImageNet-200]() > > Near-OOD: `SSB-hard`, `NINCO`;

> > Far-OOD: `iNaturalist`, `Texture`, `OpenImage-O`;

> > Covariate-Shifted ID: `ImageNet-C`, `ImageNet-R`, `ImageNet-v2`; > - [x] [ImageNet-1K]() > > Near-OOD: `SSB-hard`, `NINCO`;

> > Far-OOD: `iNaturalist`, `Texture`, `OpenImage-O`;

> > Covariate-Shifted ID: `ImageNet-C`, `ImageNet-R`, `ImageNet-v2`, `ImageNet-ES`;

Note that OpenOOD v1.5 emphasizes and focuses on the last 4 benchmarks for OOD detection.

Supported Backbones (6)

This part lists all the backbones we will support in our codebase, including CNN-based and Transformer-based models. Backbones like ResNet-50 and Transformer have ImageNet-1K/22K pretrained models.

CNN-based Backbones (4)

> - [x] [LeNet-5](http://yann.lecun.com/exdb/lenet/) > - [x] [ResNet-18](https://openaccess.thecvf.com/content_cvpr_2016/html/He_Deep_Residual_Learning_CVPR_2016_paper.html) > - [x] [WideResNet-28](https://arxiv.org/abs/1605.07146) > - [x] [ResNet-50](https://openaccess.thecvf.com/content_cvpr_2016/html/He_Deep_Residual_Learning_CVPR_2016_paper.html) ([BiT](https://github.com/google-research/big_transfer))Transformer-based Architectures (2)

> - [x] [ViT](https://github.com/google-research/vision_transformer) ([DeiT](https://github.com/facebookresearch/deit)) > - [x] [Swin Transformer](https://openaccess.thecvf.com/content/ICCV2021/html/Liu_Swin_Transformer_Hierarchical_Vision_Transformer_Using_Shifted_Windows_ICCV_2021_paper.html)Supported Methods (60+)

This part lists all the methods we include in this codebase. Up to v1.5, we totally support more than 50 popular methods for generalized OOD detection.

All the supported methodolgies can be placed in the following four categories.

We also note our supported methodolgies with the following tags if they have special designs in the corresponding steps, compared to the standard classifier training process.

Anomaly Detection (5)

> - [x] [](https://github.com/lukasruff/Deep-SVDD-PyTorch) ![training] ![postprocess] > - [x] []() ![training] ![postprocess] > - [x] [](https://github.com/lukasruff/Deep-SVDD-PyTorch) ![training] ![postprocess] > - [x] [](https://github.com/lukasruff/Deep-SVDD-PyTorch) ![training] ![postprocess] > - [x] [](https://github.com/lukasruff/Deep-SVDD-PyTorch) ![training] ![postprocess]Open Set Recognition (3)

> Post-Hoc Methods (2): > - [x] [](https://github.com/13952522076/Open-Set-Recognition) ![postprocess] > - [x] [](https://github.com/aimerykong/OpenGAN/tree/main/utils) ![postprocess] > Training Methods (1): > - [x] [](https://github.com/iCGY96/ARPL) ![training] ![postprocess]Out-of-Distribution Detection (41)

> Post-Hoc Methods (23): > - [x] [](https://openreview.net/forum?id=Hkg4TI9xl) > - [x] [](https://openreview.net/forum?id=H1VGkIxRZ) ![postprocess] > - [x] [](https://papers.nips.cc/paper/2018/hash/abdeb6f575ac5c6676b747bca8d09cc2-Abstract.html) ![postprocess] > - [x] [](https://papers.nips.cc/paper/2018/hash/abdeb6f575ac5c6676b747bca8d09cc2-Abstract.html) ![postprocess] > - [x] [](https://github.com/VectorInstitute/gram-ood-detection) ![postprocess] > - [x] [](https://github.com/wetliu/energy_ood) ![postprocess] > - [x] [](https://arxiv.org/abs/2106.09022) ![postprocess] > - [x] [](https://github.com/deeplearning-wisc/gradnorm_ood) ![postprocess] > - [x] [](https://github.com/deeplearning-wisc/react) ![postprocess] > - [x] [](https://github.com/hendrycks/anomaly-seg) ![postprocess] > - [x] [](https://github.com/hendrycks/anomaly-seg) ![postprocess] > - [x] []() ![postprocess] > - [x] [](https://ooddetection.github.io/) ![postprocess] > - [x] [](https://github.com/deeplearning-wisc/knn-ood) ![postprocess] > - [x] [](https://github.com/deeplearning-wisc/dice) ![postprocess] > - [x] [](https://github.com/KingJamesSong/RankFeat) ![postprocess] > - [x] [](https://andrijazz.github.io/ash) ![postprocess] > - [x] [](https://github.com/zjs975584714/SHE) ![postprocess] > - [x] [](https://openaccess.thecvf.com/content/CVPR2023/papers/Liu_GEN_Pushing_the_Limits_of_Softmax-Based_Out-of-Distribution_Detection_CVPR_2023_paper.pdf) ![postprocess] > - [x] [](https://arxiv.org/abs/2309.14888) ![postprocess] > - [x] [](https://arxiv.org/abs/2301.12321) ![postprocess] > - [x] [](https://github.com/kai422/SCALE) ![postprocess] > - [x] [](https://github.com/litianliu/fDBD-OOD) ![postprocess] > Training Methods (14): > - [x] [](https://github.com/uoguelph-mlrg/confidence_estimation) ![preprocess] ![training] > - [x] [](https://github.com/hendrycks/ss-ood) ![preprocess] ![training] > - [x] [](https://github.com/guyera/Generalized-ODIN-Implementation) ![training] ![postprocess] > - [x] [](https://github.com/alinlab/CSI) ![preprocess] ![training] ![postprocess] > - [x] [](https://github.com/inspire-group/SSD) ![training] ![postprocess] > - [x] [](https://github.com/deeplearning-wisc/large_scale_ood) ![training] > - [x] [](https://github.com/deeplearning-wisc/vos) ![training] ![postprocess] > - [x] [](https://github.com/hongxin001/logitnorm_ood) ![training] ![preprocess] > - [x] [](https://github.com/deeplearning-wisc/cider) ![training] ![postprocess] > - [x] [](https://github.com/deeplearning-wisc/npos) ![training] ![postprocess] > - [x] [](https://github.com/sudarshanregmi/T2FNorm) ![training] > - [x] [](https://github.com/kai422/SCALE) ![training] > - [x] [](https://github.com/jeff024/PALM) ![training] > - [x] [](https://github.com/sudarshanregmi/ReweightOOD) ![training] ![postprocess] > Training With Extra Data (4): > - [x] [](https://openreview.net/forum?id=HyxCxhRcY7) ![extradata] ![training] > - [x] [](https://openaccess.thecvf.com/content_ICCV_2019/papers/Yu_Unsupervised_Out-of-Distribution_Detection_by_Maximum_Classifier_Discrepancy_ICCV_2019_paper.pdf) ![extradata] ![training] > - [x] [](https://openaccess.thecvf.com/content/ICCV2021/html/Yang_Semantically_Coherent_Out-of-Distribution_Detection_ICCV_2021_paper.html) ![extradata] ![training] > - [x] [](https://openaccess.thecvf.com/content/WACV2023/html/Zhang_Mixture_Outlier_Exposure_Towards_Out-of-Distribution_Detection_in_Fine-Grained_Environments_WACV_2023_paper.html) ![extradata] ![training]Method Uncertainty (4)

> - [x] []() ![training] ![postprocess] > - [x] []() ![training] > - [x] [](https://proceedings.mlr.press/v70/guo17a.html) ![postprocess] > - [x] []() ![training] ![postprocess]Data Augmentation (8)

> - [x] []() ![preprocess] > - [x] []() ![preprocess] > - [x] [](https://openreview.net/forum?id=Bygh9j09KX) ![preprocess] > - [x] [](https://openaccess.thecvf.com/content_CVPRW_2020/html/w40/Cubuk_Randaugment_Practical_Automated_Data_Augmentation_With_a_Reduced_Search_Space_CVPRW_2020_paper.html) ![preprocess] > - [x] [](https://github.com/google-research/augmix) ![preprocess] > - [x] [](https://github.com/hendrycks/imagenet-r) ![preprocess] > - [x] [](https://openaccess.thecvf.com/content/CVPR2022/html/Hendrycks_PixMix_Dreamlike_Pictures_Comprehensively_Improve_Safety_Measures_CVPR_2022_paper.html) ![preprocess] > - [x] [](https://github.com/FrancescoPinto/RegMixup) ![preprocess]Contributors

Citation

If you find our repository useful for your research, please consider citing these papers:

# v1.5 report

@article{zhang2023openood,

title={OpenOOD v1.5: Enhanced Benchmark for Out-of-Distribution Detection},

author={Zhang, Jingyang and Yang, Jingkang and Wang, Pengyun and Wang, Haoqi and Lin, Yueqian and Zhang, Haoran and Sun, Yiyou and Du, Xuefeng and Li, Yixuan and Liu, Ziwei and Chen, Yiran and Li, Hai},

journal={arXiv preprint arXiv:2306.09301},

year={2023}

}

# v1.0 report

@article{yang2022openood,

author = {Yang, Jingkang and Wang, Pengyun and Zou, Dejian and Zhou, Zitang and Ding, Kunyuan and Peng, Wenxuan and Wang, Haoqi and Chen, Guangyao and Li, Bo and Sun, Yiyou and Du, Xuefeng and Zhou, Kaiyang and Zhang, Wayne and Hendrycks, Dan and Li, Yixuan and Liu, Ziwei},

title = {OpenOOD: Benchmarking Generalized Out-of-Distribution Detection},

year = {2022}

}

# full-spectrum OOD detection

@article{yang2022fsood,

title = {Full-Spectrum Out-of-Distribution Detection},

author = {Yang, Jingkang and Zhou, Kaiyang and Liu, Ziwei},

journal={arXiv preprint arXiv:2204.05306},

year = {2022}

}

# generalized OOD detection framework & survey

@article{yang2021oodsurvey,

title={Generalized Out-of-Distribution Detection: A Survey},

author={Yang, Jingkang and Zhou, Kaiyang and Li, Yixuan and Liu, Ziwei},

journal={arXiv preprint arXiv:2110.11334},

year={2021}

}

# OOD benchmarks

# NINCO

@inproceedings{bitterwolf2023ninco,

title={In or Out? Fixing ImageNet Out-of-Distribution Detection Evaluation},

author={Julian Bitterwolf and Maximilian Mueller and Matthias Hein},

booktitle={ICML},

year={2023},

url={https://proceedings.mlr.press/v202/bitterwolf23a.html}

}

# SSB

@inproceedings{vaze2021open,

title={Open-Set Recognition: A Good Closed-Set Classifier is All You Need},

author={Vaze, Sagar and Han, Kai and Vedaldi, Andrea and Zisserman, Andrew},

booktitle={ICLR},

year={2022}

}-b31b1b?style=for-the-badge)

-yellowgreen?style=for-the-badge)