"{

Explore | Examine | Expose | Explain} your model with the explabox!"

| Status | |

|---|---|

| Latest release |    |

| Development |  |

The explabox aims to support data scientists and machine learning (ML) engineers in explaining, testing and documenting AI/ML models, developed in-house or acquired externally. The explabox turns your ingestibles (AI/ML model and/or dataset) into digestibles (statistics, explanations or sensitivity insights)!

The explabox can be used to:

- Explore: describe aspects of the model and data.

- Examine: calculate quantitative metrics on how the model performs.

- Expose: see model sensitivity to random inputs (safety), test model generalizability (e.g. sensitivity to typos; robustness), and see the effect of adjustments of attributes in the inputs (e.g. swapping male pronouns for female pronouns; fairness), for the dataset as a whole (global) as well as for individual instances (local).

- Explain: use XAI methods for explaining the whole dataset (global), model behavior on the dataset (global), and specific predictions/decisions (local).

A number of experiments in the explabox can also be used to provide transparency and explanations to stakeholders, such as end-users or clients.

:information_source: The

explaboxcurrently only supports natural language text as a modality. In the future, we intend to extend to other modalities.

© National Police Lab AI (NPAI), 2022

Quick tour

The explabox is distributed on PyPI. To use the package with Python, install it (pip install explabox), import your data and model and wrap them in the Explabox:

>>> from explabox import import_data, import_model

>>> data = import_data('./drugsCom.zip', data_cols='review', label_cols='rating')

>>> model = import_model('model.onnx', label_map={0: 'negative', 1: 'neutral', 2: 'positive'})

>>> from explabox import Explabox

>>> box = Explabox(data=data,

... model=model,

... splits={'train': 'drugsComTrain.tsv', 'test': 'drugsComTest.tsv'})Then .explore, .examine, .expose and .explain your model:

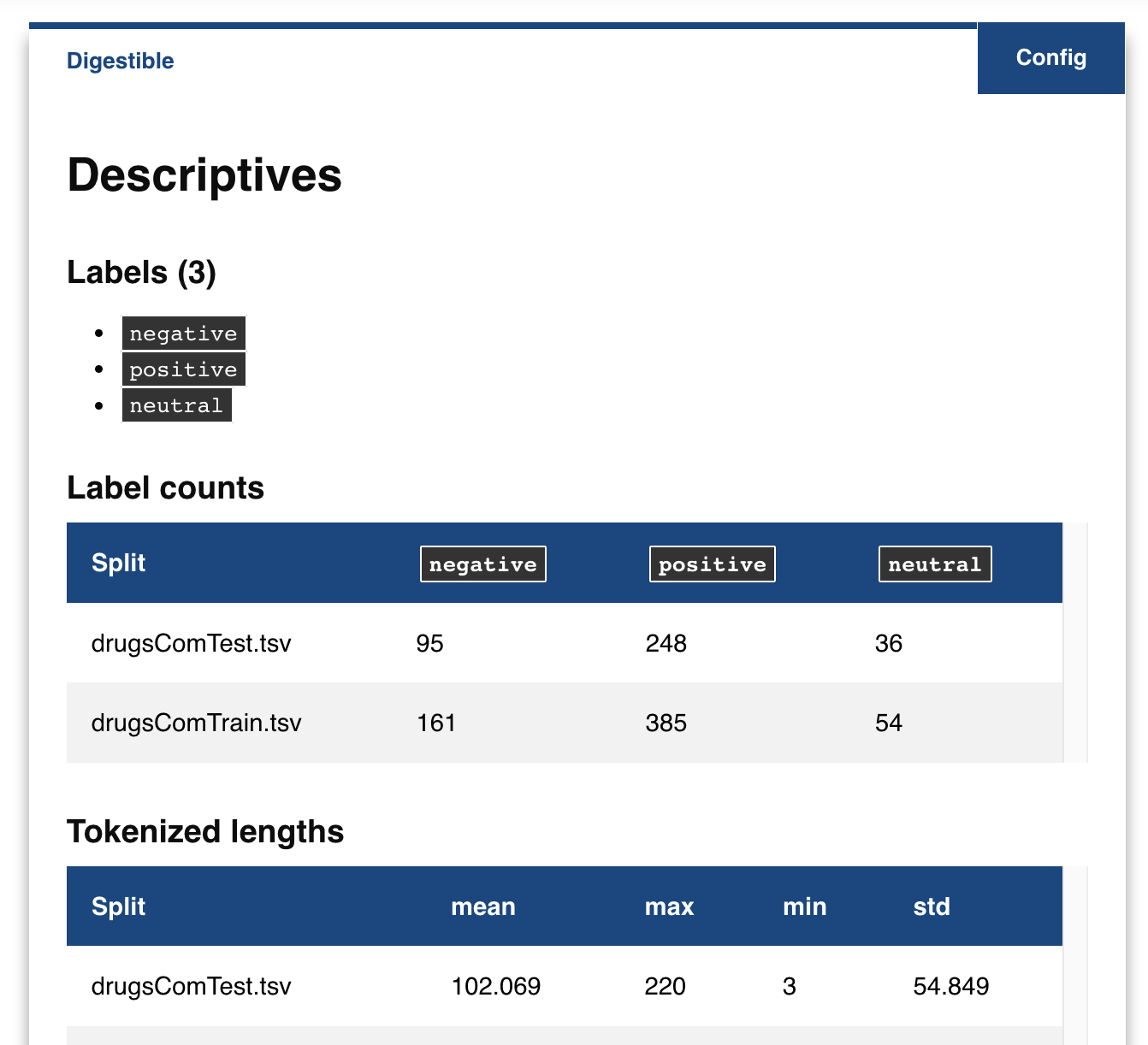

>>> # Explore the descriptive statistics for each split

>>> box.explore()

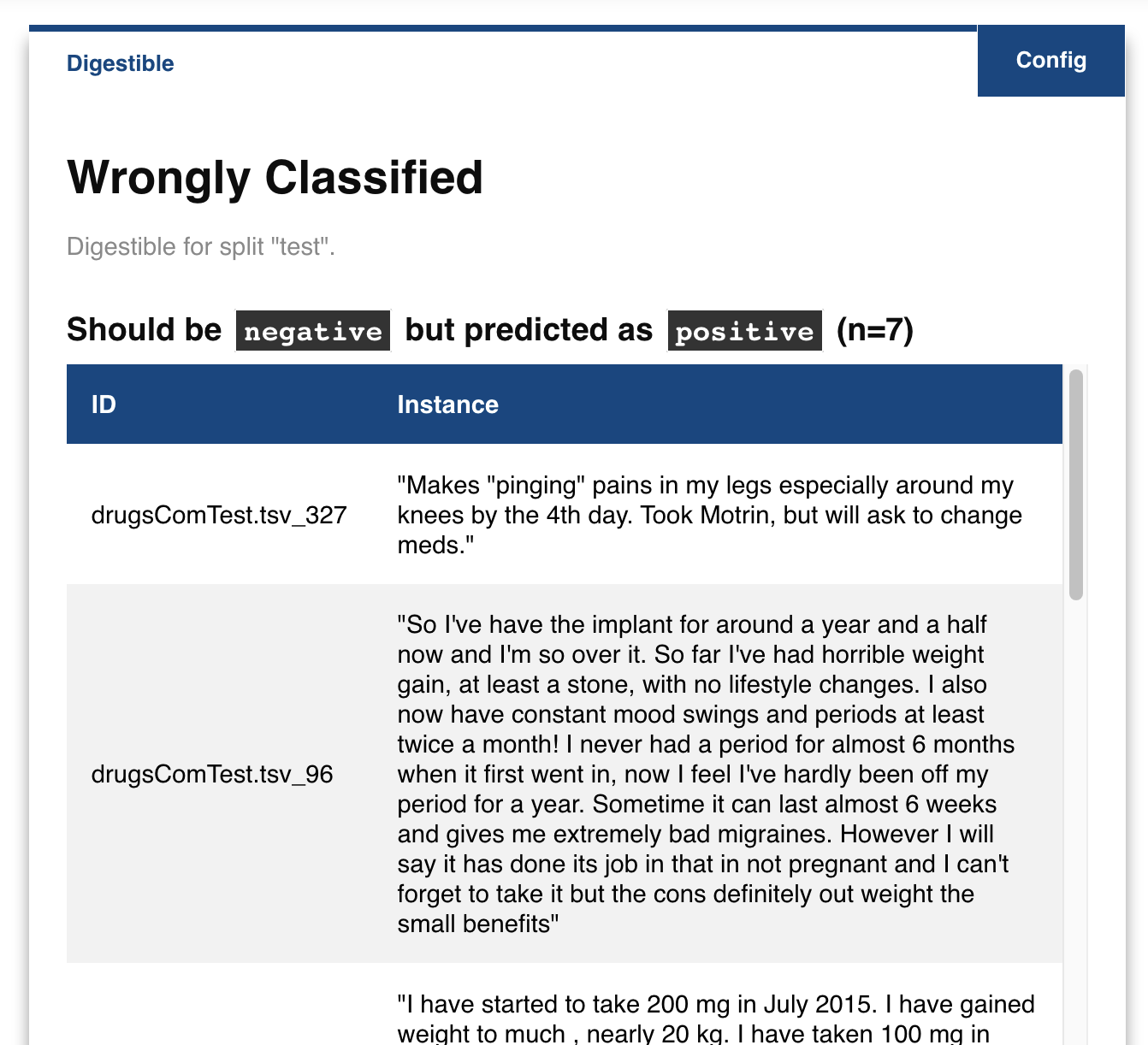

>>> # Show wrongly classified instances

>>> box.examine.wrongly_classified()

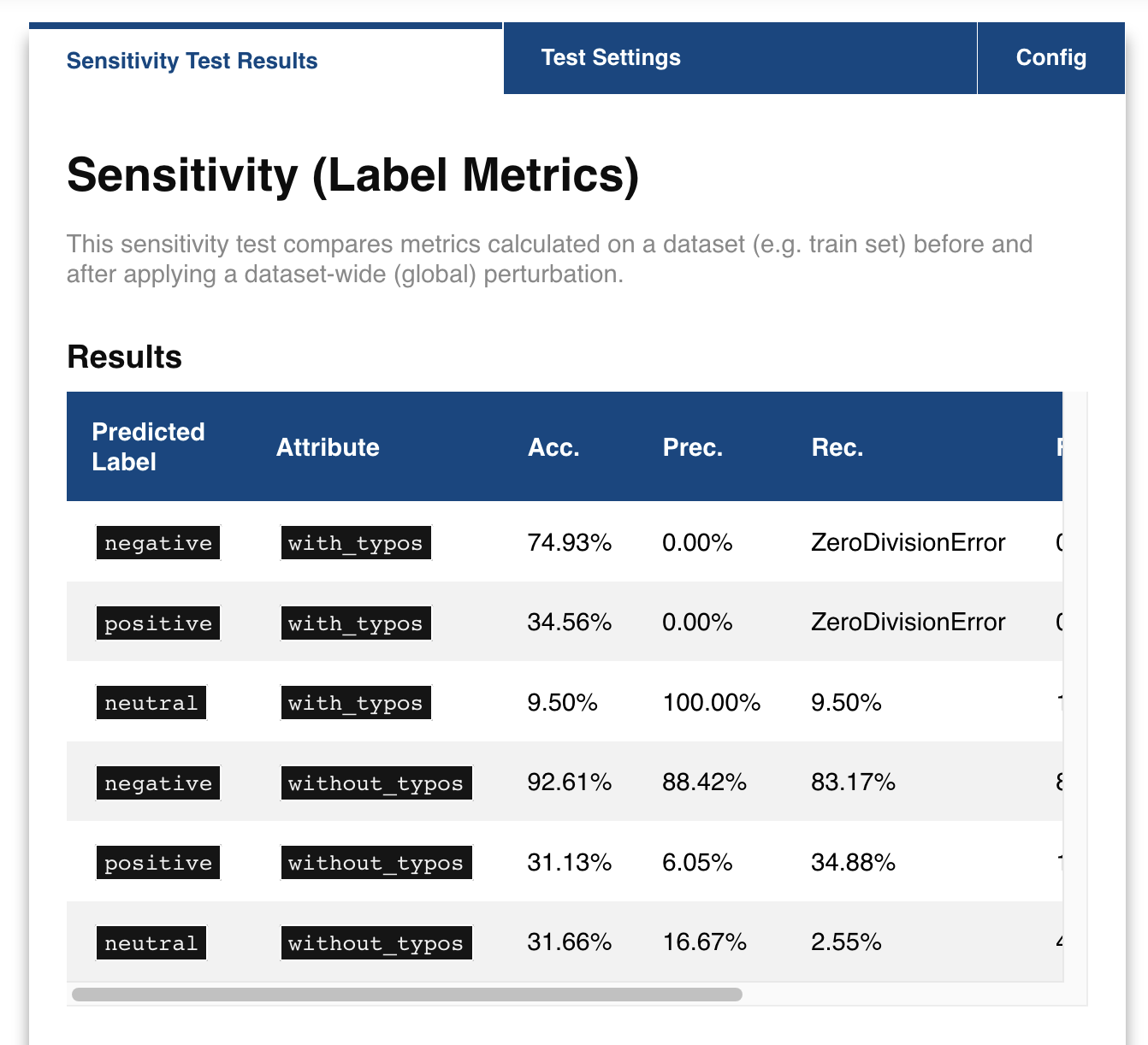

>>> # Compare the performance on the test split before and after adding typos to the text

>>> box.expose.compare_metrics(split='test', perturbation='add_typos')

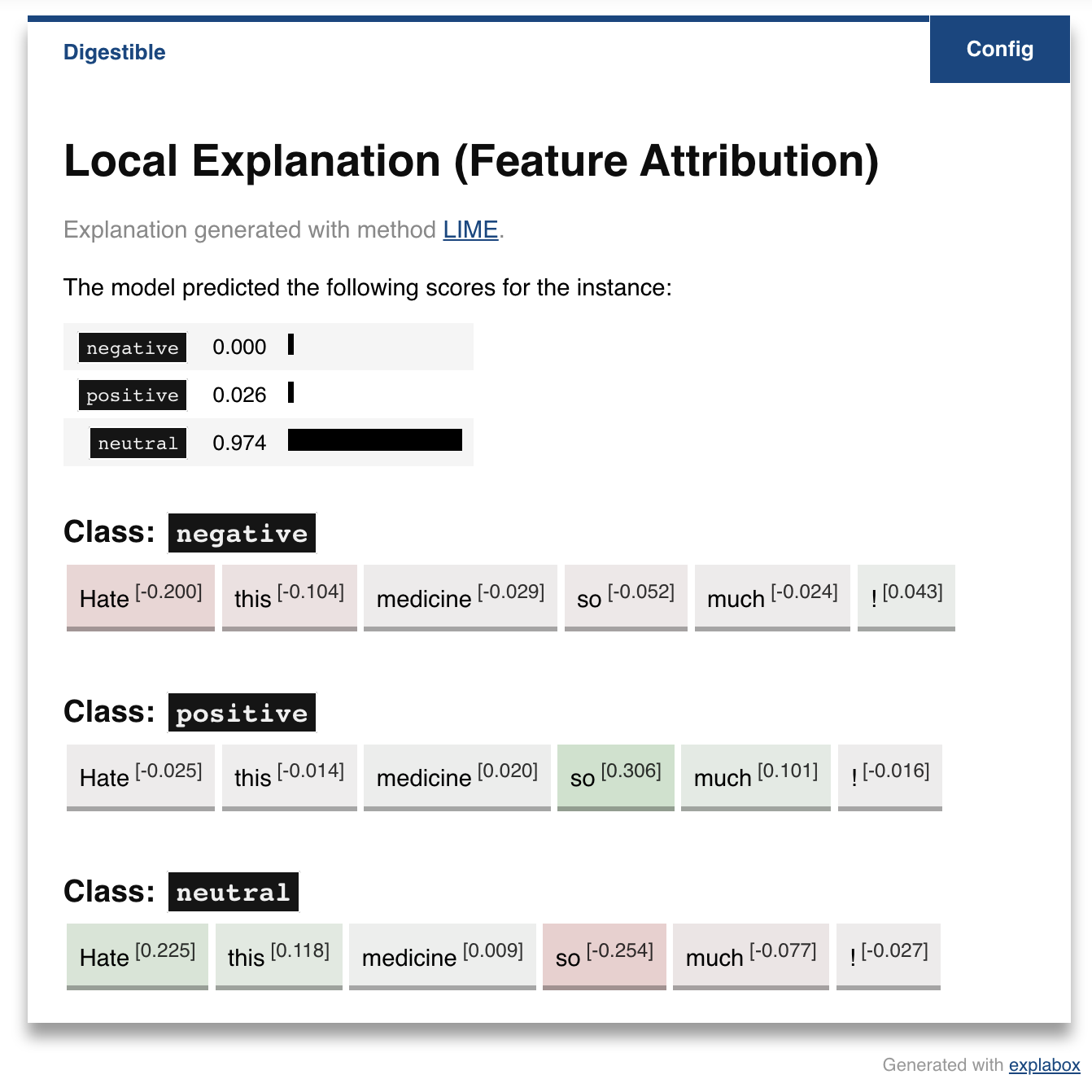

>>> # Get a local explanation (uses LIME by default)

>>> box.explain.box.explain_prediction('Hate this medicine so much!')

For more information, visit the explabox documentation.

Contents

Installation

The easiest way to install the latest release of the explabox is through pip:

user@terminal:~$ pip install explabox

Collecting explabox

...

Installing collected packages: explabox

Successfully installed explabox:information_source: The

explaboxrequires Python 3.8 or above.

See the full installation guide for troubleshooting the installation and other installation methods.

Documentation

Documentation for the explabox is hosted externally on explabox.rtfd.io.

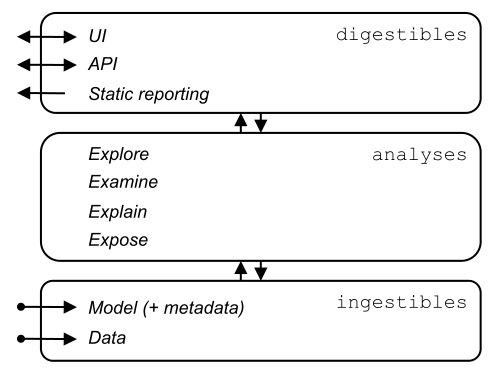

The explabox consists of three layers:

- Ingestibles provide a unified interface for importing models and data, which abstracts away how they are accessed and allows for optimized processing.

- Analyses are used to turn opaque ingestibles into transparent digestibles. The four types of analyses are explore, examine, explain and expose.

- Digestibles provide insights into model behavior and data, assisting stakeholders in increasing the explainability, fairness, auditability and safety of their AI systems. Depending on their needs, these can be accessed interactively (e.g. via the Jupyter Notebook UI or embedded via the API) or through static reporting.

Example usage

The example usage guide showcases the explabox for a black-box model performing multi-class classification of the UCI Drug Reviews dataset.

Without requiring any local installations, the notebook is provided on .

If you want to follow along on your own device, simply pip install explabox-demo-drugreview and run the lines in the Jupyter notebook we have prepared for you!

Releases

The explabox is officially released through PyPI. The changelog includes a full overview of the changes for each version.

Contributing

The explabox is an open-source project developed and maintained primarily by the Netherlands National Police Lab AI (NPAI). However, your contributions and improvements are still required! See contributing for a full contribution guide.

Citation

...