Would be super if we could also get full dataset from different SDC prototypes (including from Udacity, Waterloo, MIT and other universities) driving in similar conditions to see if current sensor technology is enough (I assume it is) to detect a moving target (person with a bike) at 50m while driving at 60km/h, then notify the safety driver that an obstacle is in range (FCW), automatically switch to long beam range to allow long range cameras to see better and slow down while approaching the target to increase the chances to stop or avoid impact.

We should also try to see how recent ADAS system behave in those conditions, in Tesla, Volkswagen, etc.

If anyone has access to this kind of cars (SDC or advanced ADAS) please share your investigations and tests.

This is the minimum that should have happened in the accident scenario, would be good to see how cars (as many as possible) with this feature activated would have behaved:

Forward Collision Warning (FCW) Systems http://brainonboard.ca/safety_features/active_safety_features_fcw.php

"However, if an obstacle like a pedestrian or vehicle approaches quickly from the side, for example, the FCW system will have less time to assess the situation and therefore a driver relying exclusively on this safety feature to provide a warning of a collision will have less time to react."

Here is the satellite view, the accident was a bit north east to Marque Theatre sign:

Here is the satellite view, the accident was a bit north east to Marque Theatre sign:

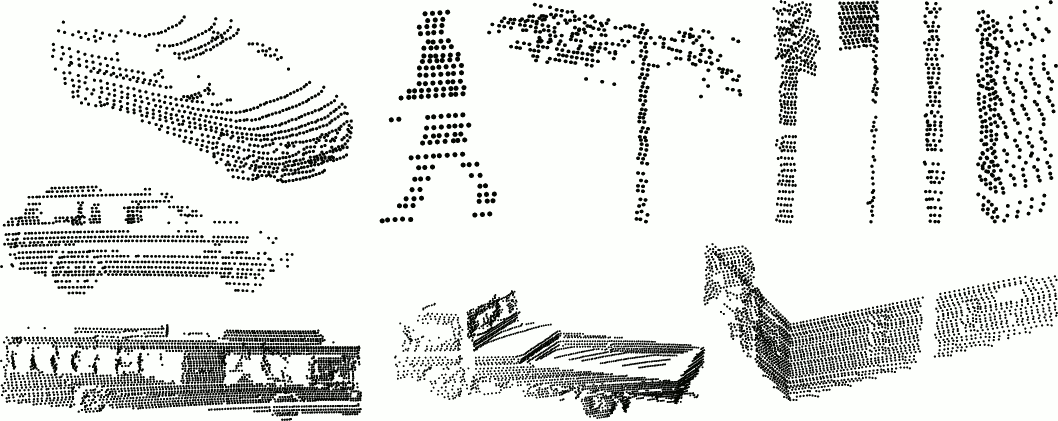

Collect datasets from similar situations and analyse them to help everyone understand what the car should have seen and what could have been done to avoid the accident.

Help us obtain the dataset from Uber SDC involved in the accident, at least 3 min before and 1 min after the impact (this is a reply to the police tweet with the video from the accident): https://twitter.com/GTARobotics/status/976628350331518976

A few initial pointers to accident info:

The Google Maps StreetView link where the accident happened:

642 North Mill Avenue, Tempe, Arizona, USA https://goo.gl/maps/wTDdCvSzc522

Brad Templeton analysis of the accident: https://twitter.com/GTARobotics/status/976726328488710150 https://twitter.com/bradtem/status/978013912359555072

Experts Break Down the Self-Driving Uber Crash https://twitter.com/GTARobotics/status/978025535807934470?s=09

Experts view on the fact that LIDAR should have detected the person from far away: https://twitter.com/GTARobotics/status/977764787328356352

This is the moment when we decide that human lives matter more than cars https://www.curbed.com/transportation/2018/3/20/17142090/uber-fatal-crash-driverless-pedestrian-safety

Uber self-driving system should have spotted woman, experts say http://www.cbc.ca/beta/news/world/uber-self-driving-accident-video-1.4587439

IIHS shows the Volvo XC90 with a range just under 250 feet (76 meters) with "low beams" on! https://twitter.com/GTARobotics/status/977995274122682368

Help us get current companies that test SDC to provide datasets from their own cars in similar situations as the accident: https://twitter.com/GTARobotics/status/977773180344512512

Lets also capture current SDC sensors configurations/specs in: https://github.com/OSSDC/OSSDC-Hacking-Book/wiki

Join the discussions on OSSDC Slack at http://ossdc.org