SpideyX 🕸️

SpideyX - A Web Reconnaissance Penetration Testing tool for Penetration Testers and Ethical Hackers that included with multiple mode with asynchronous concurrne performance. Spideyx is a tool that have 3 seperate modes and each mode are used for different approach and different methods, Spideyx is one tool but it equal to 3 tools because it have ability to crawling, Jsscraping, parameter fuzzing.

Installations:

Install using pip:

pip install git+https://github.com/RevoltSecurities/SpideyX.gitInstall using pipx

pipx install spideyxInstall using git

git clone https://github.com/RevoltSecurities/SpideyX

cd Spideyx

pip install .these are methods that install spideyx succesfully into your machiene and ready to execute, but how to use the spideyx

Spidey main mode usage:

spideyx -h _ __ _ __

_____ ____ (_) ____/ / ___ __ __ | |/ /

/ ___/ / __ \ / / / __ / / _ \ / / / / | /

(__ ) / /_/ / / / / /_/ / / __/ / /_/ / / |

/____/ / .___/ /_/ \__,_/ \___/ \__, / /_/|_|

/_/ /____/

@RevoltSecurities

[DESCRIPTION]: spideyX - An asynchronous concurrent web penetration testing multipurpose tool

[MODES]:

- crawler : spideyX asynchronous crawler mode to crawl urls with more user controllables settings.

- paramfuzzer : spideyX pramfuzzer mode , a faster mode to fuzz hidden parameters.

- jsscrapy : spideyX jsscrapy mode, included with asynchronous concurrency and find hidden endpoint and secrets using yaml template based regex.

- update : spideyX update mode to update and get latest update logs of spideyX

[FLAGS]:

-h, --help : Shows this help message and exits.

[Usage]:

spideyx [commands]

Available Commands:

- crawler : Executes web crawling mode of spideyX.

- paramfuzzer : Excecutes paramfuzzer bruteforcing mode of spideyX.

- jsscrapy : Executes jscrawl mode of spideyX to crawl js hidden endpoints & credentials using template based regex.

- update : Executes the update mode of spideyX

Help Commands:

- crawler : spideyx crawler -h

- paramfuzzer : spideyx paramfuzzer -h

- jsscrapy : spideyx jsscrapy -h

- update : spideyx update -hSpideyX Modes:

SpideyX have 3 different mode that used for 3 different purposes and thse are the modes of SpideX:

- crawler

- jsscrapy

- paramfuzzer

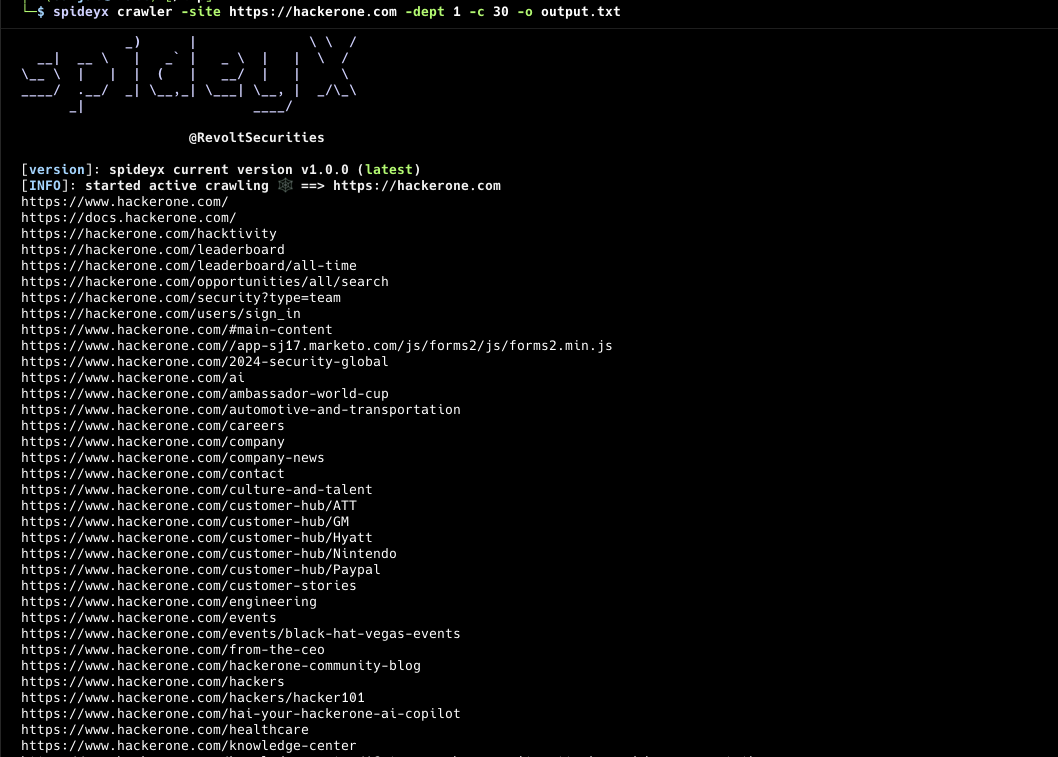

SpideyX Crawler Mode:

SpideyX Crawler Mode is more than just another web crawling tool. It provides advanced capabilities for both active and passive crawling of web applications. Whether you need to explore URLs recursively with a specified depth or perform comprehensive passive crawling using various external resources, SpideyX has you covered.

SpideyX crawler Features:

- Customizable crawl scope

- Customization output filtering,matching,regex,hosts

- Concurrent dept recursion crawling

- Customizable html tags & attributes for crawling

- Authenticated active crawling

- HTTP/2 supported

- Active & Passive crawling supported

- Input: stdin, url, file

- Output: file, stdout

- Random useragent for each crawling request

SpideyX crawler mode usages:

spideyx crawler -h

.__ .___

____________ |__| __| _/ ____ ___.__.___ ___

/ ___/\____ \ | | / __ | _/ __ \ < | |\ \/ /

\___ \ | |_> >| |/ /_/ | \ ___/ \___ | > <

/____ >| __/ |__|\____ | \___ > / ____|/__/\_ \

\/ |__| \/ \/ \/ \/

@RevoltSecurities

[MODE]: spideyX crawler mode!

[Usage]:

spidey crawler [options]

Options for crawler mode:

[input]:

-site, --site : target url for spideyX to crawl

-sties, --sites : target urls for spideyX to crawl

stdin/stdout : target urls for spideyX using stdin

[configurations]:

-tgs, --tags-attrs : spideyx crawling tags can be configured using this flags you can control spideyx crawling (ex: -tgs 'a:href,link:src,src:script')

-dept, --dept : dept value to crawl for urls by spideyx (info: only in active crawling) (default: 1)

-X, --method : request method for crawling (default: get) (choices: "get", "post", "head", "put", "delete", "patch", "trace", "connect", "options")

-H, --headers : custom headers & cookies to send in http request for authenticated or custom header crawling

-ra, --random-agent : use random user agents for each request in crawling urls instead of using default user-agent of spideyx

-to , --timeout : timeout values for each request in crawling urls (default: 15)

-px, --proxy : http proxy url to send request via proxy

-ar, --allow-redirect : follow redirects for each http request

-mr, --max-redirect : values for max redirection to follow

-mxr, --max-redirection : specify maximum value for maximum redirection to be followed when making requests.

-cp, --config-path : configuration file path for spideyX

--http2, --http2 : use http2 protocol to give request and crawl urls, endpoints

[scope]:

-hic, --host-include : specify hosts to include urls of it and show in results with comma seperated values (ex: -hc api.google.com,admin.google.com)

-hex, --host-exclude : speify hosts to exclude urls of it and show in results with comma seperated values (ex: -hex class.google.com,nothing.google.com)

-cs, --crawl-scope : specify the inscope url to be crawled by spideyx (ex: -cs /api/products or -cs inscope.txt)

-cos, --crawl-out-scope : specify the outscope url to be not crawled by spideyx (ex: -cos /api/products or -cos outscope.txt)

[filters]:

-em, --extension-match : extensions to blacklist and avoid including in output with comma seperated values (ex: -wl php,asp,jspx,html)

-ef, --extension-filter : extensions to whitelist and include in output with comma seperated values (ex: -bl jpg,css,woff)

-mr, --match-regex : sepcify a regex or list of regex to match in output by spideyx

-fr, --filter-regex : specify a regex or list of regex to exclude in output by spideyx

[passive]:

-ps, --passive : use passive resources for crawling (alienvault, commoncrawl, virustotal)

-pss, --passive-source : passive sources to use for finding endpoints with comma seperated values (ex: -pss alienvault,virustotal)

[rate-limits]:

-c, --concurrency : no of concurrency values for concurrent crawling (default: 50)

-pl, --parallelism : no of parallelism values for parallel crawling for urls (default: 2)

-delay, --delay : specify a delay value between each concurrent requests (default: 0.1)

[output]:

-o, --output : output file to store results of spideyX

[debug]:

-s, --silent : display only output to console

-vr, --verbose : increase the verbosity of the outputSpideyX crawler mode configurations:

File Input:

spideyx crawler -sites urls.txtUrl Input:

spideyx crawler -site https://hackerone.comStdin Input:

cat urls.txt | spideyx crawler -dept 5subdominator -d hackerone.com | subprober -nc | spideyx crawlerAuthenticated Crawling:

Using the -H or --headers flag, spideyx enables crawling of protected endpoints that require authentication. By including headers such as Authorization: Bearer

echo https://target.com | spideyx crawler -dept 5 -H Authorization eyhweiowuaohbdabodua.cauogodaibeajbikrba.wuogasugiuagdi -H X-Bugbounty-User user@wearehackerone.comDept Crawling:

-

Controlled Exploration Depth: The -dept flag defines how deep the crawler should go into a website's structure, limiting or expanding exploration based on levels of linked pages from the starting URL.

-

Efficient Resource Management: By setting a depth limit, users can prevent the crawler from going too deep into the site, reducing resource consumption and focusing on more relevant sections of the web application.

Filters & Matching:

SpideyX can filter and match your output using extensions, regex, hosts, regex and for that you can use flags -em ,--extentsion-match to match the desired extensions in the urls

spideyx crawler -site https://hackerone.com -em .js,.jsp,.asp,.apsx,.phpand you can filter extension using falgs: -ef, --extension-filters or spideyx have predefined blacklist of extensions not to crawl or include in output.

spideyx crawler -site https://hackerone.com -ef .css,.woff,.woff2,.mp3,.mp4,.pdf

Sometimes you dont want to include some subdomains of your target to not to crawl and here spideyx can also include and exclude host using flag: -hic, --host-include these can be used to match hosts you want to include.

spideyx crawler -site https://hackerone.com -hic developer.hackerone.com,www.hackerone.com,hackerone.comSpideyx can also exclude the hosts to not include in crawling scope and you can use these flags: -hex, --host-exclude

spideyx crawler -site https://hackerone.com -hex www.hackerone.com,hackerone.comTag and Attribute Configuration:

-tgs, --tags-attrs

Configure which HTML tags and attributes SpideyX should crawl. This flag allows you to control the crawling behavior by specifying tags and attributes of interest.

Example: -tgs 'a:href,link:src,src:script'

Description: This option tells SpideyX to look for and extract URLs from href attributes in tags, src attributes in tags, and src attributes in Githubissues.