SkillHack

We have adapted skillhack to work for quest_{easy,medium,hard}. For reproducibility, we have built a docker container. The the docker image is located in the root of the project.

Installation

This repository is dependent on the MiniHack repo (https://github.com/facebookresearch/minihack) and (Torchbeast). This is the rootless docker installation. The cluster will not work with docker but with singularity. This is just to show that it will run and achieve similair results. To build the docker container

docker build -t hks -f Dockerfile . Once the container is build. Navigate to the directory where this repo is cloned and run:

docker run --mount type=bind,source="$PWD",target=/workspace \

--rm \

-v /tmp/.X11-unix:/tmp/.X11-unix \

-e DISPLAY=$DISPLAY \

-it \

hksThis might not work as you may have to expose your display which is not recommended but this will mean you cannot see any GUI through the container. Thus instead you can run:

docker run --mount type=bind,source="$PWD",target=/workspace --rm -it hksThis will connect you to the running container.

Running experiments

To train an option you may need to set the environment variable again:

expose SKILL_TRANSFER_HOME=$PWD/skill_transfer_weightsTo train the REINFORCE with baseline (Advantage Actor Critic) Thereafter you can run:

python -m agent.polybeast.skill_transfer_polyhydra model=baseline env=mini_skill_fight use_lstm=false total_steps=1e7All the options are pretrained on the cluster and are present here. To train the HIERARCHICAL KICKSTARTING models you can use:

python -m agent.polybeast.skill_transfer_polyhydra model=hks env=quest_easyTo play using pretrained skills you can run:

python -m scripts.play_pretrained Understanding this repo

This repo is a very intertwined collections of classes. The driving "main" classes is

skill_transfer_learner.py

skill_transfer_env.py

The batching and queuing functions are handles using torchbeast.

Torchbeast is a Distributed Scalable Learner and Actor framework that handles the backbone of training. Torchbeast uses

skill_transfer_env.py to to create the environments. These environments are vectorized by having a class that

reconciles in agent/common/envs/tasks.py. Once the environment sever is started the actors can connect to it and start the

rollouts for each actor.

actor.run() actually performs the rollouts using the Batching queue and inference queue. In the skill_transfer_learner.py holds all the HKS , FOC

and baseline loss. The actual loss calculates are in agent/polybeast/models/losses.py.

The models are all parents of a base MiniHackAgent. The base models inherit this class. Then HKS,baseline,foc are all child

classes and build upon the base class.

Once the options are trained the hierarchical models finds them using a tasks.json. This allows as to add any skill as a subtask. Then these

models looks at the paths for the pretrained skills.

Anything after is the original repo which is from UCL Skillhack repository.

SkillHack

Source code for Hierarchical Kickstarting for Skill Transfer in Reinforcement Learning (CoLLAs 2022).

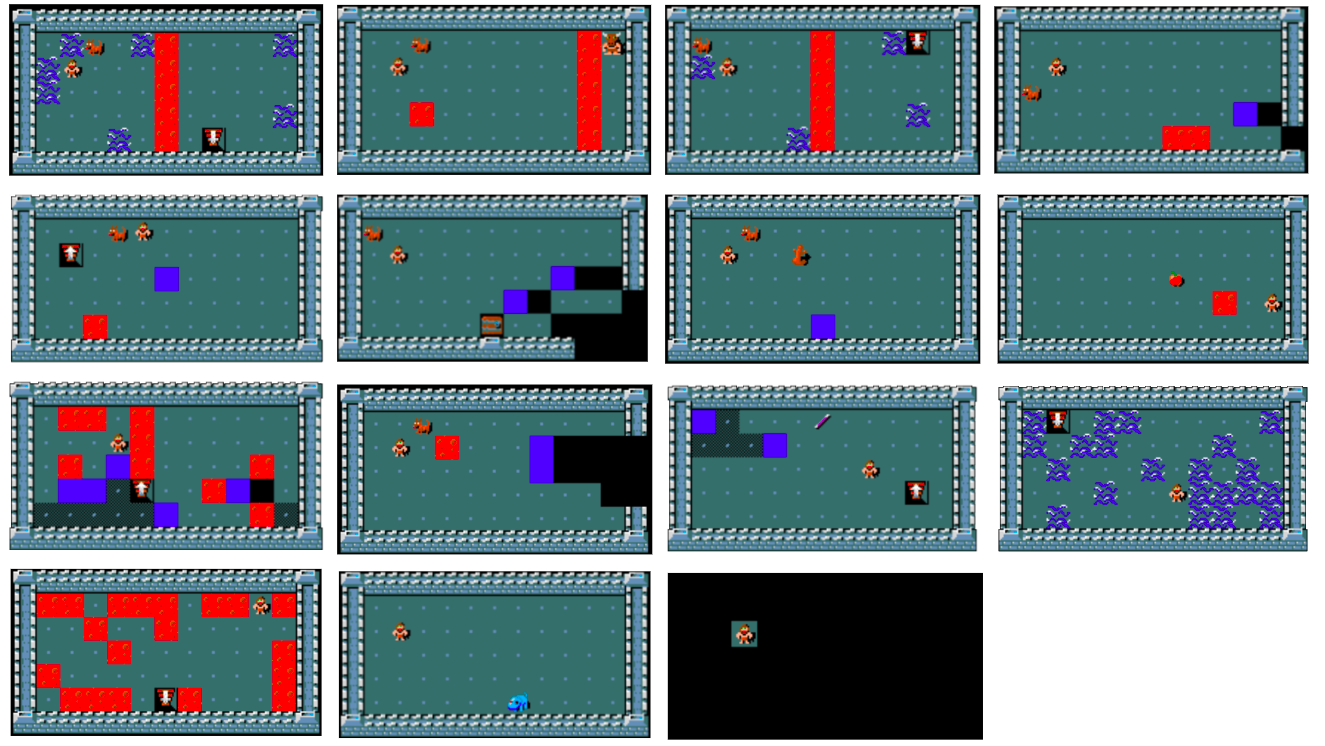

SkillHack is a repository for skill based learning based on MiniHack and the NetHack Learning Environment (NLE). SkillHack consists of 16 simple skill acquistion environments and 8 complex task environments. The task environments are difficult to solve due to the large state-action space and sparsity of rewards, but can be made tractable by transferring knowledge gained from the simpler skill acquistion environments.

How to run a Skill Transfer experiment

First, create a directory to store pretrained skills in and set the environment variable SKILL_TRANSFER_HOME to point to this directory. This directory must also include a file called skill_config.yaml. A default setting for this file can be found in the same directory as this readme.

Next, this directory must be populated with pretrained skill experts. These are obtained by running skill_transfer_polyhydra.py on a skill-specific environment.

Full Skill Expert Training Walkthrough

In this example we train the fight skill.

First, the agent is trained with the following command

python -m agent.polybeast.skill_transfer_polyhydra model=baseline env=mini_skill_fight use_lstm=false total_steps=1e7Once the agent is trained, the final weights are automatically copied to SKILL_TRANSFER_HOME and renamed to the name of the skill environment. So in this example, the skill would be saved at

${SKILL_TRANSFER_HOME}/mini_skill_fight.tarIf there already exists a file with this name in SKILL_TRANSFER_HOME (i.e. if you've already trained an agent on this skill) then the new skill expert is saved as

${SKILL_TRANSFER_HOME}/${ENV_NAME}_${CURRENT_TIME_SECONDS}.tarTasks that make use of skills will use the path given in the first example (i.e. without the current time). If you want to use your newer agent, you need to delete the old file and rename the new file to remove the time from it.

Training other skills

Repeat this for all skills.

Remember all skills need to be trained with use_lstm=false

The full list of skills to be trained is

- mini_skill_apply_frost_horn

- mini_skill_eat

- mini_skill_fight

- mini_skill_nav_blind

- mini_skill_nav_lava

- mini_skill_nav_lava_to_amulet

- mini_skill_nav_water

- mini_skill_pick_up

- mini_skill_put_on

- mini_skill_take_off

- mini_skill_throw

- mini_skill_unlock

- mini_skill_wear

- mini_skill_wield

- mini_skill_zap_cold

- mini_skill_zap_death

Training on Tasks

If the relevant skills for the environment are not present in SKILL_TRANSFER_HOME an error will be shown indicating which skill is missing. The skill transfer specific models are

- foc: Options Framework

- ks: Kickstarting

- hks: Hierarchical Kickstarting

The tasks created for skill transfer are

- mini_simple_seq: Battle

- mini_simple_union: Over or Around

- mini_simple_intersection: Prepare for Battle

- mini_simple_random: Target Practice

- mini_lc_freeze: Frozen Lava Cross

- mini_medusa: Medusa

- mini_mimic: Identify Mimic

- mini_seamonsters: Sea Monsters

So, for example, to run hierarchical kickstarting on the Target Practice environment, one would call

python -m agent.polybeast.skill_transfer_polyhydra model=hks env=mini_simple_randomWith all other parameters being able to be set in the same way as with polyhydra.py

Training on Tasks

The runs from the paper can be repeated with the following command. If you don't want to run with wandb, set wandb=false.

python -m agent.polybeast.skill_transfer_polyhydra --multirun model=ks,foc,hks,baseline env=mini_simple_seq,mini_simple_intersection,mini_simple_union,mini_simple_random,mini_lc_freeze,mini_medusa,mini_mimic,mini_seamonsters name=1,2,3,4,5,6,7,8,9,10,11,12 total_steps=2.5e8 group=<YOUR_WANDB_GROUP> hks_max_uniform_weight=20 hks_min_uniform_prop=0 train_with_all_skills=false ks_min_lambda_prop=0.05 hks_max_uniform_time=2e7 entity=<YOUR_WANDB_ENTITY> project=<YOUR_WANDB_PROJECT>Final Notes

- If you want to train with the fixed version of nav_blind, go to data/tasks/tasks.json and replace mini_skill_nav_blind with mini_skill_nav_blind_fixed