Judging from the file name, I suppose you take this model face_detection_yunet_2022mar from opencv zoo. The one in this repo is the latest version of YuNet, and the one in opencv zoo is old version but we will update soon.

I suggest you take a look at https://github.com/ShiqiYu/libfacedetection.train/blob/master/onnx/yunet_yunet_final_dynamic_simplify.onnx. It is the latest YuNet and has dynamic shape.

Hello, I'm kijoong lee, a LG Electronics SW Developer.

We are developing a TFLite-based hardware-accelerated AI inference framework on webOS.

Recently, we judged YuNet to be the most suitable for face detection models through benchmarks. And, by converting the face_detection_yunet_2022mar.onnx model included in opencv dnn into a tflite model, a face detector with good performance was obtained. For reference, we used the xnnpack accelerated method.

However, we need a model larger than 160x120 that can be accelerated by GPU(or NPU), so we tried to convert the model included in https://github.com/ShiqiYu/libfacedetection.train/tree/master/onnx and use it, but it didn't work. .

The reasons we analyzed are as follows.

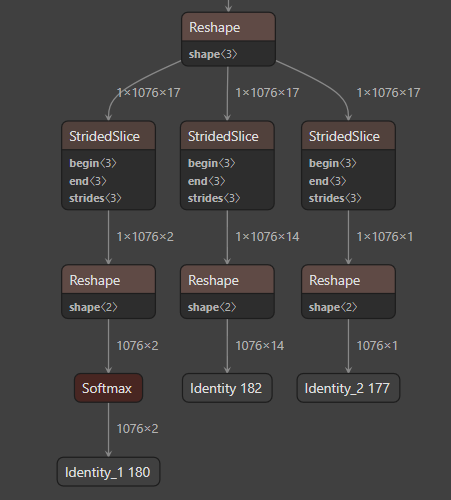

(face_detection_yunet_2022mar_float32.tflite)

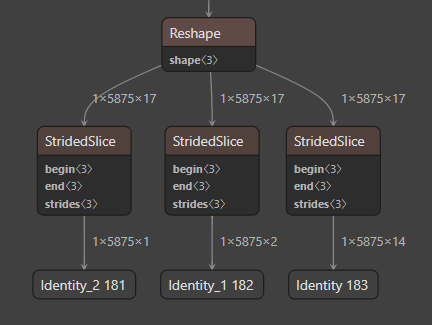

(yunet_yunet_final_320_320_simplify_float32.tflite)

As you can see in the two figures above, the output shapes of the two models are different. A well-behaved model includes a reshaping part into a two-dimensional tensor and a Softmax operation.

How can we make a model with an input size larger than face_detection_yunet_2022mar.onnx? Or could you please fix this problem?