ModelForge, a derivative of Ultralytics' YOLOv5, is a family of object detection architectures and models pretrained on the COCO dataset. It represents a conglomeration of efforts by Sprayer Mods et. al. into the state of the art computer vision methods, incorporating lessons learned and best practices evolved over thousands of hours of research and development. Sprayer Mods is appending new features and model types to an already amazing repository and model structure. Along with this we are implementing several AWS features [SageMaker, S3, EC2].

##See the YOLOv5 Docs for full documentation on training, testing and deployment with YOLOv5.

Quick Start Examples

Install

Clone repo and install [requirements.txt](https://github.com/ultralytics/yolov5/blob/master/requirements.txt) in a [**Python>=3.7.0**](https://www.python.org/) environment, including [**PyTorch>=1.7**](https://pytorch.org/get-started/locally/). ```bash git clone https://github.com/ultralytics/yolov5 # clone cd yolov5 pip install -r requirements.txt # install ```Inference

YOLOv5 [PyTorch Hub](https://github.com/ultralytics/yolov5/issues/36) inference. [Models](https://github.com/ultralytics/yolov5/tree/master/models) download automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases). ```python import torch # Model model = torch.hub.load('ultralytics/yolov5', 'yolov5s') # or yolov5n - yolov5x6, custom # Images img = 'https://ultralytics.com/images/zidane.jpg' # or file, Path, PIL, OpenCV, numpy, list # Inference results = model(img) # Results results.print() # or .show(), .save(), .crop(), .pandas(), etc. ```Inference with detect.py

`detect.py` runs inference on a variety of sources, downloading [models](https://github.com/ultralytics/yolov5/tree/master/models) automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases) and saving results to `runs/detect`. ```bash python detect.py --source 0 # webcam img.jpg # image vid.mp4 # video path/ # directory 'path/*.jpg' # glob 'https://youtu.be/Zgi9g1ksQHc' # YouTube 'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream ```Training

The commands below reproduce YOLOv5 [COCO](https://github.com/ultralytics/yolov5/blob/master/data/scripts/get_coco.sh) results. [Models](https://github.com/ultralytics/yolov5/tree/master/models) and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) download automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases). Training times for YOLOv5n/s/m/l/x are 1/2/4/6/8 days on a V100 GPU ([Multi-GPU](https://github.com/ultralytics/yolov5/issues/475) times faster). Use the largest `--batch-size` possible, or pass `--batch-size -1` for YOLOv5 [AutoBatch](https://github.com/ultralytics/yolov5/pull/5092). Batch sizes shown for V100-16GB. ```bash python train.py --data coco.yaml --cfg yolov5n.yaml --weights '' --batch-size 128 yolov5s 64 yolov5m 40 yolov5l 24 yolov5x 16 ```

Tutorials

- [Train Custom Data](https://github.com/ultralytics/yolov5/wiki/Train-Custom-Data) 🚀 RECOMMENDED - [Tips for Best Training Results](https://github.com/ultralytics/yolov5/wiki/Tips-for-Best-Training-Results) ☘️ RECOMMENDED - [Multi-GPU Training](https://github.com/ultralytics/yolov5/issues/475) - [PyTorch Hub](https://github.com/ultralytics/yolov5/issues/36) 🌟 NEW - [TFLite, ONNX, CoreML, TensorRT Export](https://github.com/ultralytics/yolov5/issues/251) 🚀 - [Test-Time Augmentation (TTA)](https://github.com/ultralytics/yolov5/issues/303) - [Model Ensembling](https://github.com/ultralytics/yolov5/issues/318) - [Model Pruning/Sparsity](https://github.com/ultralytics/yolov5/issues/304) - [Hyperparameter Evolution](https://github.com/ultralytics/yolov5/issues/607) - [Transfer Learning with Frozen Layers](https://github.com/ultralytics/yolov5/issues/1314) - [Architecture Summary](https://github.com/ultralytics/yolov5/issues/6998) 🌟 NEW - [Weights & Biases Logging](https://github.com/ultralytics/yolov5/issues/1289) - [Roboflow for Datasets, Labeling, and Active Learning](https://github.com/ultralytics/yolov5/issues/4975) 🌟 NEW - [ClearML Logging](https://github.com/ultralytics/yolov5/tree/master/utils/loggers/clearml) 🌟 NEW - [Deci Platform](https://github.com/ultralytics/yolov5/wiki/Deci-Platform) 🌟 NEWIntegrations

| Weights and Biases | Roboflow ⭐ NEW |

|---|---|

| Automatically track and visualize all your YOLOv5 training runs in the cloud with Weights & Biases | Label and export your custom datasets directly to YOLOv5 for training with Roboflow |

YOLOv7

New SOTA in Object Detection!

YOLOX

Anchorless object detection!

YOLOv5

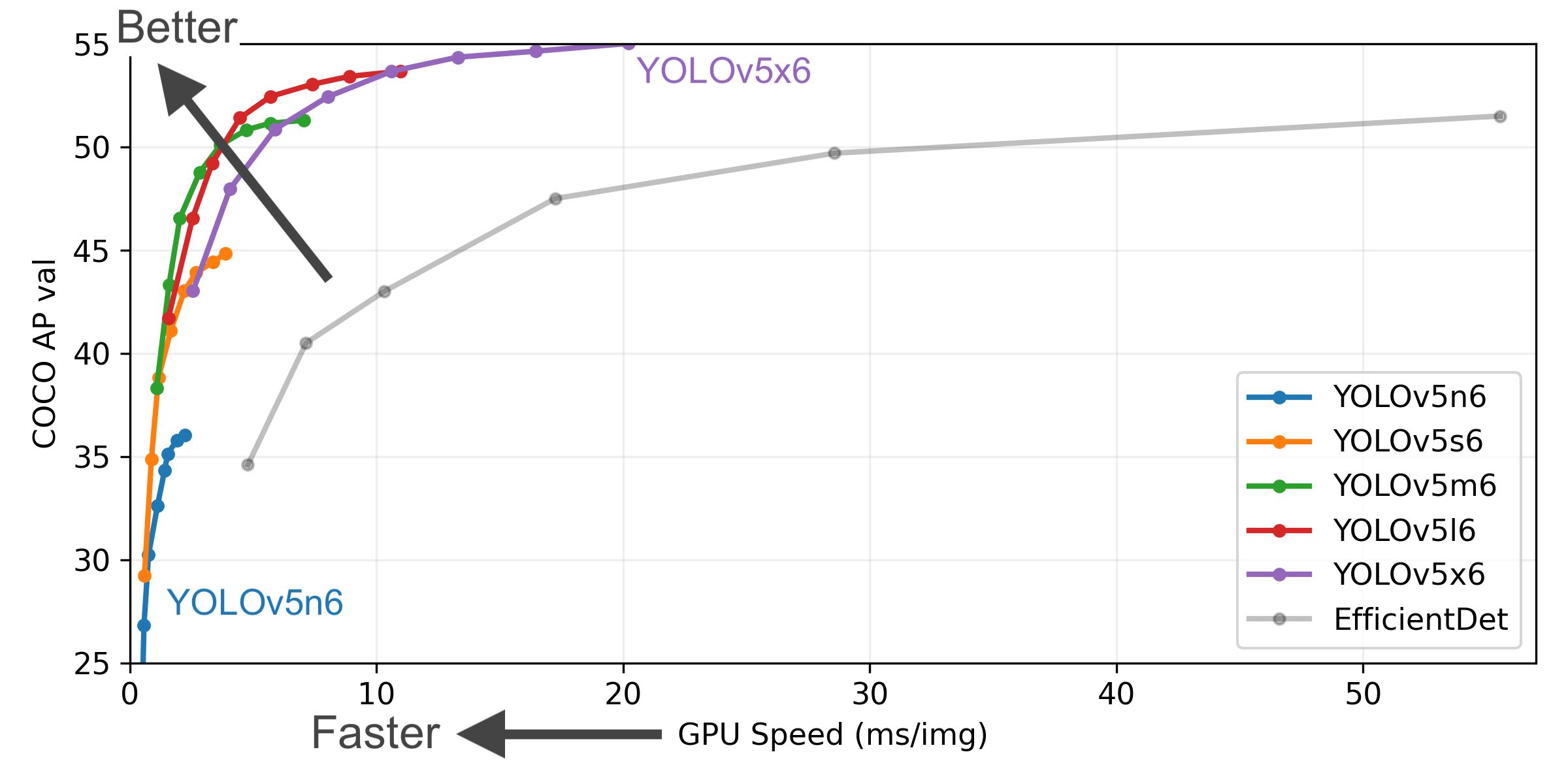

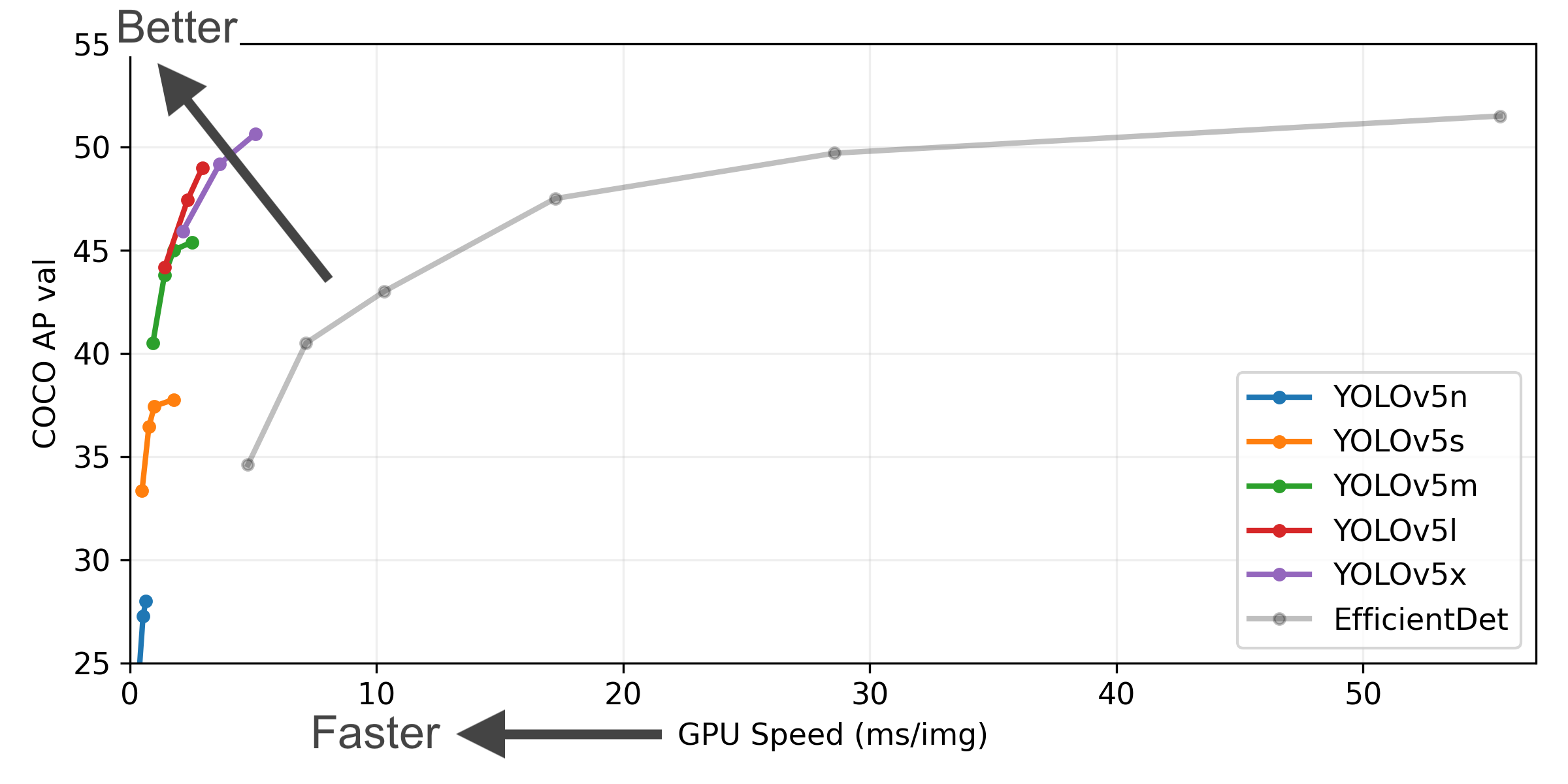

YOLOv5-P5 640 Figure (click to expand)

Figure Notes (click to expand)

- **COCO AP val** denotes mAP@0.5:0.95 metric measured on the 5000-image [COCO val2017](http://cocodataset.org) dataset over various inference sizes from 256 to 1536. - **GPU Speed** measures average inference time per image on [COCO val2017](http://cocodataset.org) dataset using a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/) V100 instance at batch-size 32. - **EfficientDet** data from [google/automl](https://github.com/google/automl) at batch size 8. - **Reproduce** by `python val.py --task study --data coco.yaml --iou 0.7 --weights yolov5n6.pt yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`Pretrained Checkpoints

| Model | size (pixels) |

mAPval 0.5:0.95 |

mAPval 0.5 |

Speed CPU b1 (ms) |

Speed V100 b1 (ms) |

Speed V100 b32 (ms) |

params (M) |

FLOPs @640 (B) |

|---|---|---|---|---|---|---|---|---|

| YOLOv5n | 640 | 28.0 | 45.7 | 45 | 6.3 | 0.6 | 1.9 | 4.5 |

| YOLOv5s | 640 | 37.4 | 56.8 | 98 | 6.4 | 0.9 | 7.2 | 16.5 |

| YOLOv5m | 640 | 45.4 | 64.1 | 224 | 8.2 | 1.7 | 21.2 | 49.0 |

| YOLOv5l | 640 | 49.0 | 67.3 | 430 | 10.1 | 2.7 | 46.5 | 109.1 |

| YOLOv5x | 640 | 50.7 | 68.9 | 766 | 12.1 | 4.8 | 86.7 | 205.7 |

| YOLOv5n6 | 1280 | 36.0 | 54.4 | 153 | 8.1 | 2.1 | 3.2 | 4.6 |

| YOLOv5s6 | 1280 | 44.8 | 63.7 | 385 | 8.2 | 3.6 | 12.6 | 16.8 |

| YOLOv5m6 | 1280 | 51.3 | 69.3 | 887 | 11.1 | 6.8 | 35.7 | 50.0 |

| YOLOv5l6 | 1280 | 53.7 | 71.3 | 1784 | 15.8 | 10.5 | 76.8 | 111.4 |

| YOLOv5x6 + TTA |

1280 1536 |

55.0 55.8 |

72.7 72.7 |

3136 - |

26.2 - |

19.4 - |

140.7 - |

209.8 - |

Added by Sprayer Mods*

| Model | size (pixels) |

mAPval 0.5:0.95 |

mAPval 0.5 |

Speed CPU b1 (ms) |

Speed V100 b1 (ms) |

Speed V100 b32 (ms) |

params (M) |

FLOPs @640 (B) |

|---|---|---|---|---|---|---|---|---|

| YOLOv7 tiny | 640 | 38.7 | 56.7 | --- | 3.4 | --- | 6.2 | 13.8 |

| YOLOv7 | 640 | 51.2 | 69.7 | --- | 6.2 | --- | 36.9 | 104.7 |

| YOLOX-S | 640 | 39.6 | --- | --- | 9.8 | --- | 9.0 | 26.8 |

| YOLOX-M | 640 | 46.4 | 65.4 | --- | 12.3 | --- | 25.3 | 73.8 |

| YOLOX-L | 640 | 50.0 | 68.5 | --- | 14.5 | --- | 54.2 | 155.6 |

| YOLOX-X | 640 | 51.2 | 69.6 | --- | 17.3 | --- | 99.6 | 281.4 |

*See CITATIONS. Implemented from one of the included repositories/papers listed.

Table Notes (click to expand)

- All checkpoints are trained to 300 epochs with default settings. Nano and Small models use [hyp.scratch-low.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-low.yaml) hyps, all others use [hyp.scratch-high.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-high.yaml). - **mAPval** values are for single-model single-scale on [COCO val2017](http://cocodataset.org) dataset.Reproduce by `python val.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65` - **Speed** averaged over COCO val images using a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/) instance. NMS times (~1 ms/img) not included.

Reproduce by `python val.py --data coco.yaml --img 640 --task speed --batch 1` - **TTA** [Test Time Augmentation](https://github.com/ultralytics/yolov5/issues/303) includes reflection and scale augmentations.

Reproduce by `python val.py --data coco.yaml --img 1536 --iou 0.7 --augment`

Contribute

We love your input! We want to make contributing to ModelForge as easy and transparent as possible. Please see the Contributing Guide to get started. Thank you to all of the YOLOv5 contributors and deep learning researchers!

Contact

For ModelForge bugs and feature requests please visit GitHub Issues. For business inquiries or professional support requests please visit https://sprayermods.com/contact.