Trans-Encoder

[arxiv] · [amazon.science blog] · [5min-video] · [talk@RIKEN] · [openreview]

Code repo for ICLR 2022 paper Trans-Encoder: Unsupervised sentence-pair modelling through self- and mutual-distillations

by Fangyu Liu, Yunlong Jiao, Jordan Massiah, Emine Yilmaz, Serhii Havrylov.

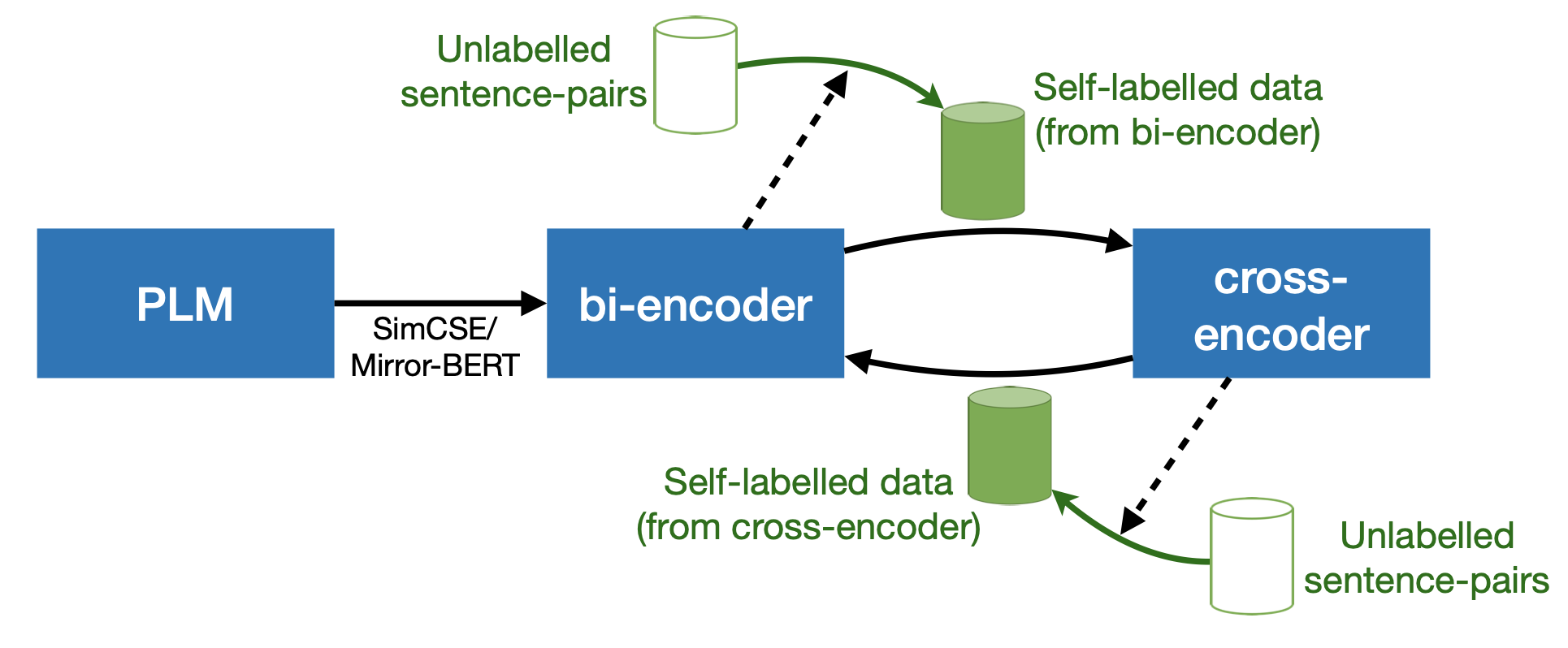

Trans-Encoder is a state-of-the-art unsupervised sentence similarity model. It conducts self-knowledge-distillation on top of pretrained language models by alternating between their bi- and cross-encoder forms.

Huggingface pretrained models for STS

| base models | large models |

|---|---|

| |model | STS avg. | |--------|--------| |baseline: [unsup-simcse-bert-base](https://huggingface.co/princeton-nlp/unsup-simcse-bert-base-uncased) | 76.21 | | [trans-encoder-bi-simcse-bert-base](https://huggingface.co/cambridgeltl/trans-encoder-bi-simcse-bert-base) | 80.41 | | [trans-encoder-cross-simcse-bert-base](https://huggingface.co/cambridgeltl/trans-encoder-cross-simcse-bert-base) | 79.90 | |baseline: [unsup-simcse-roberta-base](https://huggingface.co/princeton-nlp/unsup-simcse-roberta-base) | 76.10 | | [trans-encoder-bi-simcse-roberta-base](https://huggingface.co/cambridgeltl/trans-encoder-bi-simcse-roberta-base) | 80.47 | | [trans-encoder-cross-simcse-roberta-base](https://huggingface.co/cambridgeltl/trans-encoder-cross-simcse-roberta-base) | **81.15** | | |model | STS avg. | |--------|--------| |baseline: [unsup-simcse-bert-large](https://huggingface.co/princeton-nlp/unsup-simcse-bert-large-uncased) | 78.42 | | [trans-encoder-bi-simcse-bert-large](https://huggingface.co/cambridgeltl/trans-encoder-bi-simcse-bert-large) | 82.65 | | [trans-encoder-cross-simcse-bert-large](https://huggingface.co/cambridgeltl/trans-encoder-cross-simcse-bert-large) | 82.52 | |baseline: [unsup-simcse-roberta-large](https://huggingface.co/princeton-nlp/unsup-simcse-roberta-large) | 78.92 | | [trans-encoder-bi-simcse-roberta-large](https://huggingface.co/cambridgeltl/trans-encoder-bi-simcse-roberta-large) | **82.93** | | [trans-encoder-cross-simcse-roberta-large](https://huggingface.co/cambridgeltl/trans-encoder-cross-simcse-roberta-large) | **82.93** | |

Dependencies

torch==1.8.1

transformers==4.9.0

sentence-transformers==2.0.0Please view requirements.txt for more details.

Data

All training and evaluation data will be automatically downloaded when running the scripts. See src/data.py for details.

Train

--task options: sts (STS2012-2016 and STS-b), sickr, sts_sickr (STS2012-2016, STS-b, and SICK-R), qqp, qnli, mrpc, snli, custom. See src/data.py for task data details. By default using all STS data (sts_sickr).

Self-distillation

>> bash train_self_distill.sh 00 denotes GPU device index.

Mutual-distillation

>> bash train_mutual_distill.sh 0,1Two GPUs needed; by default using SimCSE BERT & RoBERTa base models for ensembling. Add --use_large for switching to large models.

Train with your custom corpus

>> CUDA_VISIBLE_DEVICES=0,1 python src/mutual_distill_parallel.py \

--batch_size_bi_encoder 128 \

--batch_size_cross_encoder 64 \

--num_epochs_bi_encoder 10 \

--num_epochs_cross_encoder 1 \

--cycle 3 \

--bi_encoder1_pooling_mode cls \

--bi_encoder2_pooling_mode cls \

--init_with_new_models \

--task custom \

--random_seed 2021 \

--custom_corpus_path CORPUS_PATHCORPUS_PATH should point to your custom corpus in which every line should be a sentence pair in the form of sent1||sent2.

Evaluate

Evaluate a single model

Bi-encoder:

>> python src/eval.py \

--model_name_or_path "cambridgeltl/trans-encoder-bi-simcse-roberta-large" \

--mode bi \

--task sts_sickrCross-encoder:

>> python src/eval.py \

--model_name_or_path "cambridgeltl/trans-encoder-cross-simcse-roberta-large" \

--mode cross \

--task sts_sickrEvaluate ensemble

Bi-encoder:

>> python src/eval.py \

--model_name_or_path1 "cambridgeltl/trans-encoder-bi-simcse-bert-large" \

--model_name_or_path2 "cambridgeltl/trans-encoder-bi-simcse-roberta-large" \

--mode bi \

--ensemble \

--task sts_sickrCross-encoder:

>> python src/eval.py \

--model_name_or_path1 "cambridgeltl/trans-encoder-cross-simcse-bert-large" \

--model_name_or_path2 "cambridgeltl/trans-encoder-cross-simcse-roberta-large" \

--mode cross \

--ensemble \

--task sts_sickrAuthors

- Fangyu Liu: Main contributor

Security

See CONTRIBUTING for more information.

License

This project is licensed under the Apache-2.0 License.