CoGAN-tensorflow

Implement Coupled Generative Adversarial Networks, [NIPS 2016]

This implementation is a little bit different from the original caffe code. Basically, I follow the model architecture design of DCGAN.

What's CoGAN?

CoGAN can learn a joint distribution with just samples drawn from the marginal distributions. This is achieved by enforcing a weight-sharing constraint that limits the network capacity and favors a joint distribution solution over a product of marginal distributions one.

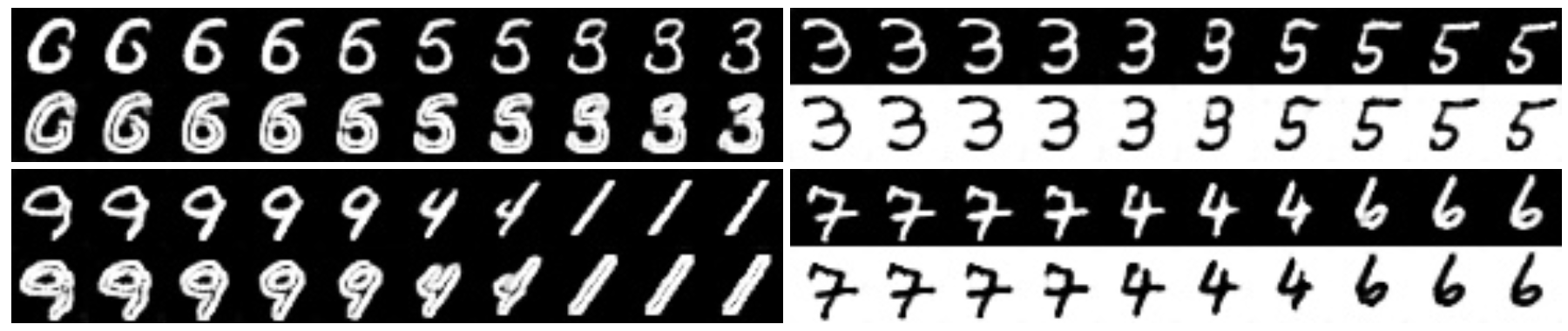

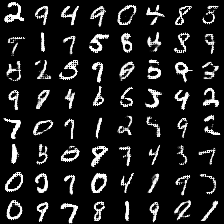

The following figure is the result showed in paper:

- Note that all the natural images here is unpaired. In a nutshell, in each training process, the input of the descriminator is not aligned.

- The experiment result of UDA problem is very impressive, which inpires me to implement this in Tensorflow.

The following image is the model architecture referred in the paper:

Again: this repo isn't follow the model architecture in the paper currently

Requirement

- Python 2.7

- Tensorflow.0.12

Kick off

First you have to clone this repo:

$ git clone https://github.com/andrewliao11/CoGAN-tensorflow.gitDownload the data:

This step will automatically download the data under the current folder.

$ python download.py mnistPreprocess(invert) the data:

$ python invert.py Train your CoGAN:

$ python main.py --is_train TrueDuring the training process, you can see the average loss of the generators and the discriminators, which can hellp your debugging. After training, it will save some sample to the ./samples/top and ./samples/bot, respectively.

To visualize the the whole training process, you can use Tensorboard:

tensorboard --logdir=logs

Results

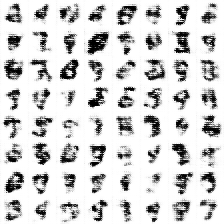

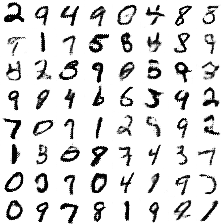

-

model in 1st epoch

-

model in 5th epoch

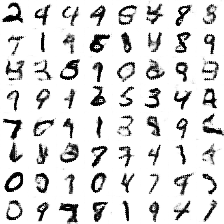

-

model in 24th epoch

-

We can see that without paired infomation, the network can generate two different images with the same high-level concepts.

-

Note: To avoid the fast convergence of D (discriminator) network, G (generator) network is updated twice for each D network update, which differs from original paper.

TODOs

- Modify the network structure to get the better results

- Try to use in different dataset(WIP)

Reference

This code is heavily built on these repo:

- DCGAN-tensorflow from @carpedm20

- CoGAN from @mingyuliutw