I think my data wasn't being normalized. tried applying this function before sending it into convolutions and I think it fixed the problem

dl_matrix3d_t normalizeData(dl_matrix3du_t in,int w , int h) { dl_matrix3d_t out = dl_matrix3d_alloc(1,w,h,in->c); int n=hw; for (int i=0; i<n;i++) { out->item[i]=(in->item[i]-128)/128; } return out; }

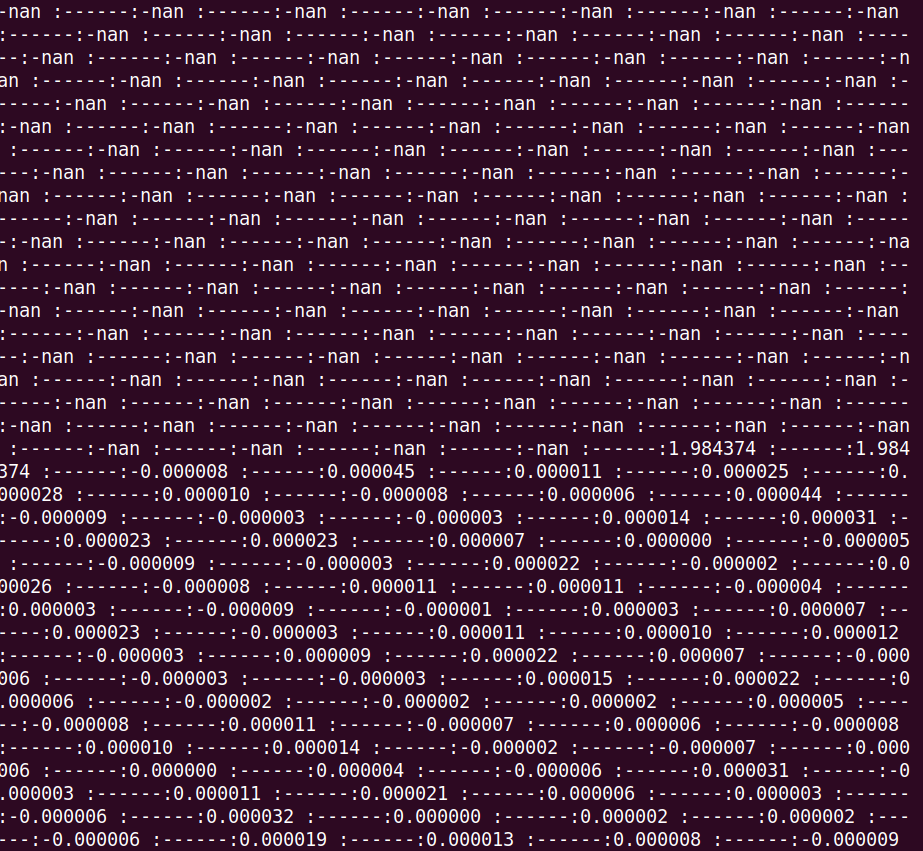

Hello! I would like to know the way numbers are handled in the deep learning softmax function. After performing 3 convolutions I have output numbers for the first 1000 spaces (printed out). The shape of this convolution input is (32,3,3,16). then prelu, and maxpool. The following operation is another convolution of shape (2,1,1,32) to obtain the scores. This output for this matrix is mostly Nan, and then some numbers that make general sense for a score (0-1)...so my question would be where are these nans coming from? Are they expected? Should i write a small function to obtain the scores at each stride instead of all score for all convolutions? Could it be that my Data is not normalized? --> As a side note, I have a hunch that it could be something to do with weight ordering the ESP lib is: (N H W C) caffe's output is: (N C H W)

in-> (N C H W)

I have translated these formats using the method below with numpy first step: np.moveaxis(in,1,3) out-> (N W H C ) second step: np.moveaxis(in, 1,2) out-> ( N H W C) flatten the array using np.reshape(in,-1) load the array into dl_matrix3d_t using a for loop (using out = in.transpose(0,2,3,1) also provides the same result)

as an example (this is from the input to the bounding box layer) original Shape from caffe (4, 32, 1, 1) new shape for ESP lib(4, 1, 1, 32) flatten and load

OUTPUT FROM MODEL:

here is the code for the neural layers of the network: ( i have erased the free and some printout methods to save space, but will attach the whole code if context is needed)

getpnet_conv1_0(filt_c1);//pnetVals.h getpnet_conv1_1(bias1);//pnetvals.h getpnet_prelu1_0(prelu1);//pnetvals.h // 3x3 convolution 1 out1= dl_matrix3dff_conv_3x3(in,filt_c1,bias1,1,1,PADDING_SAME);

score_out = dl_matrix3dff_conv_3x3(out3,score_filter,score_bias,1,1,PADDING_SAME);

bbox_out = dl_matrix3dff_conv_3x3(out3,bbox_filter,bbox_bias,1,1,PADDING_SAME); dl_matrix3d_free(out3); dl_matrix3d_free(bbox_filter); dl_matrix3d_free(bbox_bias); printf("\n\nALLOCATING OUTPUT...\n\n\n");

//========================================= // SET MEMORY //

// dl_matrix3d_free(score_out);

printf("\n\n"); int i; printf("\n\nFROM PNET LOOP:\nscore: "); for (i=0;i<1500;i++) { printf("---:%f :---", score_out->item[i]); } printf("\n\n");

xSemaphoreGive(skeletonKey); vTaskDelay(1000/portTICK_PERIOD_MS); vTaskDelete( NULL );