Let me play back to ensure I fully understood. I have written 1.18 fully in N, u and capped u at 4 as follows:

Ht118f(N,t) = {

u = if(4*Pi*abs(t)/(4*Pi*N^2)<4,4*Pi*abs(t)/(4*Pi*N^2), 4);

mulfact = exp(Pi^2*(-u*N^2)/64)*(M0((1+I*(4*Pi*N^2))/2))*N^(-(1+I*(4*Pi*N^2))/2)/(8*sqrt(1-I*u/(8*Pi)));

A=mulfact*sum(n=1,1000,exp(-u*(n-N)^2/(4*(1-I*u/(8*Pi)))-2*Pi*I*N^2*log(n/N)));

return(A);

}When I then run the root finding for N[26, 34], t [-3000,-2980], I do get roots at approximately half an integer like these:

-3000.00000000, 26.50506056

-3000.00000000, 27.50506039

-3000.00000000, 28.50646986

-3000.00000000, 29.50802722

-3000.00000000, 30.50974867

-3000.00000000, 31.51165483

-3000.00000000, 32.51377237

-3000.00000000, 33.51613622

-3000.00000000, 34.51879268

-2990.00000000, 26.50506056

-2990.00000000, 27.50513046

-2990.00000000, 28.50655129

-2990.00000000, 29.50812153

-2990.00000000, 30.50985767

-2990.00000000, 31.51178081

-2990.00000000, 32.51391822

-2990.00000000, 33.51630570

-2990.00000000, 34.51899072

-2980.00000000, 26.50506056

-2980.00000000, 27.50520138

-2980.00000000, 28.50663372

-2980.00000000, 29.50821699

-2980.00000000, 30.50996805

-2980.00000000, 31.51190842

-2980.00000000, 32.51406604

-2980.00000000, 33.51647757

-2980.00000000, 34.51919170The same, but now for N[100, 110], t [-3000,-2980]

-3000.00000000, 100.42704250

-3000.00000000, 101.42812900

-3000.00000000, 102.42922063

-3000.00000000, 103.43031384

-3000.00000000, 104.43140573

-3000.00000000, 105.49725631

-3000.00000000, 106.43357639

-3000.00000000, 107.99954423

-3000.00000000, 109.17267055

-3000.00000000, 110.17303455

-2990.00000000, 100.42706678

-2990.00000000, 101.42815533

-2990.00000000, 102.42924850

-2990.00000000, 103.43034285

-2990.00000000, 104.43143553

-2990.00000000, 105.49729523

-2990.00000000, 106.43360700

-2990.00000000, 107.99958021

-2990.00000000, 109.17270673

-2990.00000000, 110.17306971

-2980.00000000, 100.42709133

-2980.00000000, 101.42818186

-2980.00000000, 102.42927653

-2980.00000000, 103.43037196

-2980.00000000, 104.43146540

-2980.00000000, 105.49733403

-2980.00000000, 106.43363763

-2980.00000000, 107.99961606

-2980.00000000, 109.17274278

-2980.00000000, 110.17310474No longer at the half integer, but the vertical line pattern clearly remains.

EDIT: and for N[10000, 10010], t [-3000,-2980]. Seems that rationals are preferred.

-3000.00000000, 10001.74999702

-3000.00000000, 10004.99999730

-3000.00000000, 10005.12499807

-3000.00000000, 10009.40234201

-3000.00000000, 10010.99999869

-2990.00000000, 10001.74999702

-2990.00000000, 10004.99999731

-2990.00000000, 10005.12499808

-2990.00000000, 10009.40234201

-2990.00000000, 10010.99999867

-2980.00000000, 10001.74999703

-2980.00000000, 10004.99999733

-2980.00000000, 10005.12499808

-2980.00000000, 10009.40234200

-2980.00000000, 10010.99999865Before I run any plots, is this what you are after?

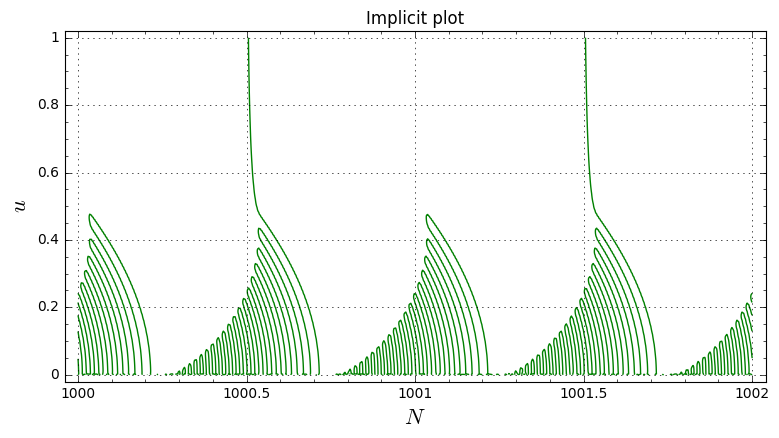

A multiplier has been normalized for this plot.

A multiplier has been normalized for this plot.

Grateful for any help to properly evaluate the sequence of equations on this Wiki page: http://michaelnielsen.org/polymath1/index.php?title=Polymath15_test_problem#Large_negative_values_of_.5Bmath.5Dt.5B.2Fmath.5D

Below is the pari/gp code I have used so far. At the end of the code is a simple rootfinder that should (approximately) find this sequence of roots:

however from eq 318 onwards it finds many more roots (also at different t, e.g. -100). Have experimented with the integral limits, the integral's fixed parameter, the real precision settings of pari and the size of the sums, but no success yet.

Have re-injected some of the non-zero multiplication factors that can be switched on/off if needed.

One thing I noticed is that after eq 307 the function doesn't seem to be real anymore (see data at the end). Not sure if this should matter though, since I use the real value of Ht for root finding.