ConfigILM

ConfigILM

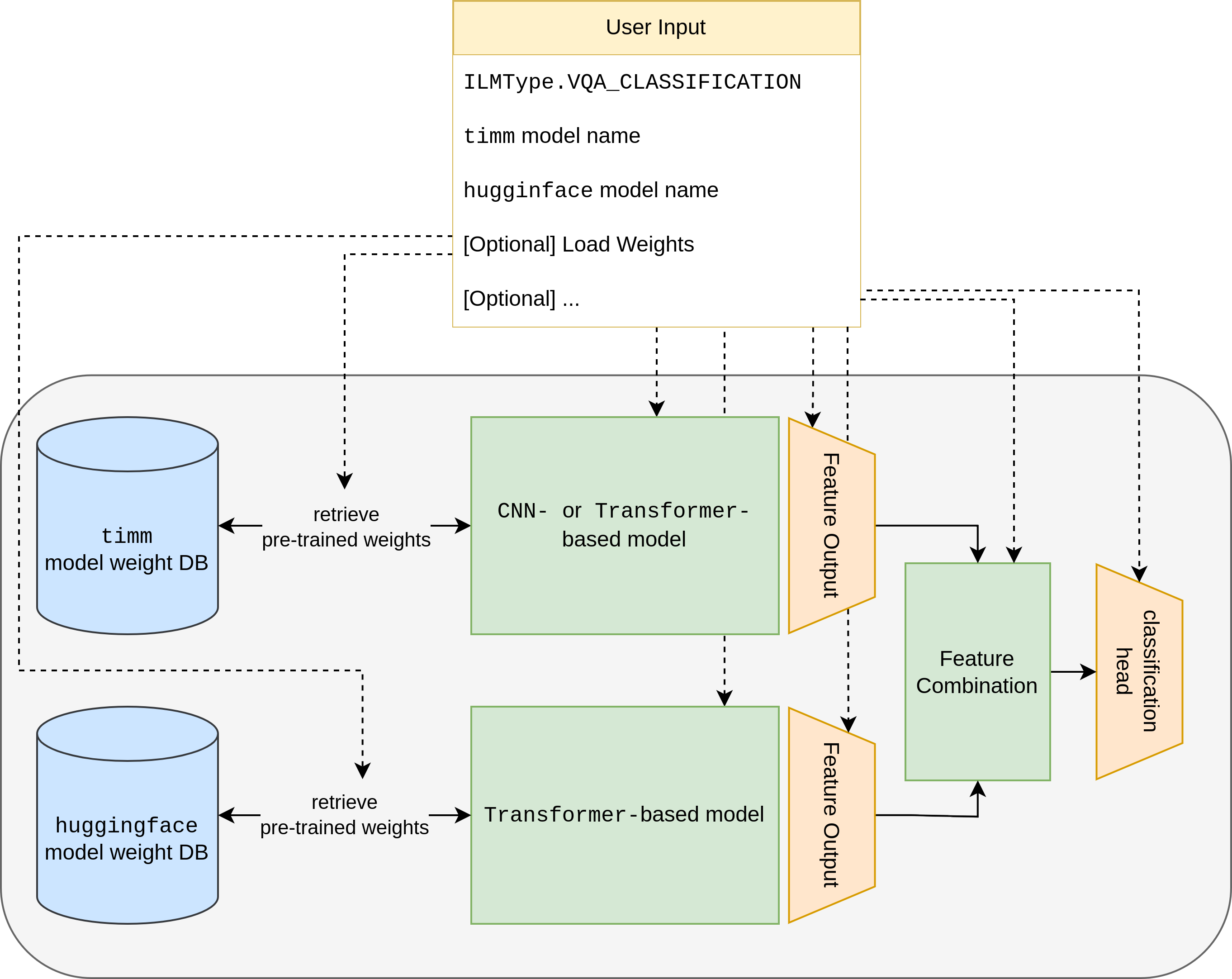

The library ConfigILM is a state-of-the-art tool for Python developers seeking to rapidly and

iteratively develop image and language models within the pytorch framework.

This open-source library provides a convenient implementation for seamlessly combining models

from two of the most popular pytorch libraries,

the highly regarded timm and huggingface🤗.

With an extensive collection of nearly 1000 image and over 100 language models,

with an additional 120,000 community-uploaded models in the huggingface🤗 model collection,

ConfigILM offers a diverse range of model combinations that require minimal implementation effort.

Its vast array of models makes it an unparalleled resource for developers seeking to create

innovative and sophisticated image-language models with ease.

Furthermore, ConfigILM boasts a user-friendly interface that streamlines the exchange of model components,

thus providing endless possibilities for the creation of novel models.

Additionally, the package offers pre-built and throughput-optimized

pytorch dataloaders and

lightning datamodules,

which enable developers to seamlessly test their models in diverse application areas, such as Remote Sensing (RS).

Moreover, the comprehensive documentation of ConfigILM includes installation instructions,

tutorial examples, and a detailed overview of the framework's interface, ensuring a smooth and hassle-free development experience.

For detailed information please see its publication and the documentation.

ConfigILM is released under the MIT Software License

Contributing

As an open-source project in a developing field, we are open to contributions. They can be in the form of a new or improved feature or better documentation.

For detailed information on how to contribute, see here.

Citation

If you use this work, please cite

@article{hackel2024configilm,

title={ConfigILM: A general purpose configurable library for combining image and language models for visual question answering},

author={Hackel, Leonard and Clasen, Kai Norman and Demir, Beg{\"u}m},

journal={SoftwareX},

volume={26},

pages={101731},

year={2024},

publisher={Elsevier}

}and the used version of the software, e.g., the current version with

@software{lhackel_tub_2024_13269357,

author = {lhackel-tub and

Kai Norman Clasen},

title = {lhackel-tub/ConfigILM: v0.6.9},

month = aug,

year = 2024,

publisher = {Zenodo},

version = {v0.6.9},

doi = {10.5281/zenodo.13269357},

url = {https://doi.org/10.5281/zenodo.13269357}

}Acknowledgement

This work is supported by the European Research Council (ERC) through the ERC-2017-STG BigEarth Project under Grant 759764 and by the European Space Agency through the DA4DTE (Demonstrator precursor Digital Assistant interface for Digital Twin Earth) project and by the German Ministry for Economic Affairs and Climate Action through the AI-Cube Project under Grant 50EE2012B. Furthermore, we gratefully acknowledge funding from the German Federal Ministry of Education and Research under the grant BIFOLD24B. We also thank EO-Lab for giving us access to their GPUs.