I think I may have figured it out.

If I don't convert the coordinate system to drone body frame $b$ (the second image above), I should get the correct result. The reason it looks incomplete in the above picture is that the stone on the right is too close (below is its corresponding rgb), so the area in the point cloud set that is very close to the origin, it is actually correct and complete.

So why does the FAQ on the site say that we need to use this transform to convert to drone body frame? It may seem like a redundant operation (perhaps it's my ignorance leading me to the wrong conclusion, let me know if so).

So why does the FAQ on the site say that we need to use this transform to convert to drone body frame? It may seem like a redundant operation (perhaps it's my ignorance leading me to the wrong conclusion, let me know if so).

Converting these coordinates to the drone body frame $b$ simply be obtained by the following transformation: $$(x_b,y_b,z_b)=(z_c,y_c,x_c)$$

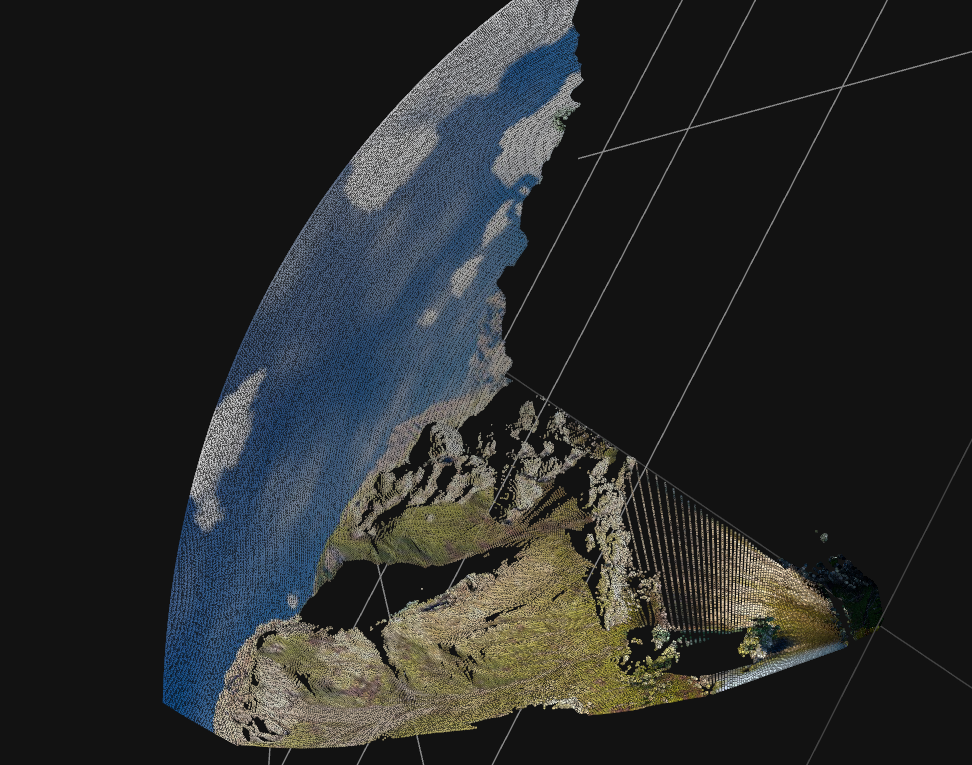

In addition, I further converted the point cloud from the camera coordinate system to the world coordinate system. The result is the same as the two screenshots above, and the result after converting to drone body frame is very strange.

I tried another example Kite_training/sunset/color_left/trajectory_1001/000000.JPEG, here is the original image:

and here are the results with(up) and without(down) the conversion to drone body frame:

and here are the results with(up) and without(down) the conversion to drone body frame:

Thank you for creating and releasing this dataset to the public, great work!

I'm confused about projecting the pixel points to the world coordinate system by depth, for the sake of simplicity, I will project the points to the camera (drone) coordinate system first. In general, if depth is encoded in a standard way (rather than the "euclidean distance of the objects to the focal point" in MidAir), then going from uv to camera coordinates can be achieved simply:

But what should I do in MidAir? Following the instructions in the FAQ on the website, I implemented it like this, but it doesn't work.

The projected points look like this, which doesn't look right: If I don't convert the coordinate system to drone body frame $b$

If I don't convert the coordinate system to drone body frame $b$

Seems a little better, but still weird and seems incomplete:

Am I missing something? Or is there something wrong with my implementation? You have detailed the projection to the world coordinate system in the FAQ, can you provide a verified implementation of this? I think it would be very helpful, thanks a lot!