Φ-SfT: Shape-from-Template with a Physics-Based Deformation Model

Project Page | Video | Paper | Data

Navami Kairanda,

Edith Tretschk,

Mohamed Elgharib,

Christian Theobalt,

Vladislav Golyanik

Max Planck Institute for Informatics

in CVPR 2022

This is the official implementation of the paper "Φ-SfT: Shape-from-Template with a Physics-Based Deformation Model".

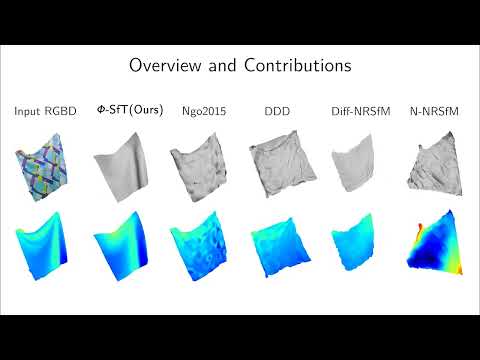

What is Φ-SfT?

Φ-Sft is an analysis-by-synthesis method to reconstruct a temporally coherent sequence of 3D shapes from a monocular RGB video, given a single initial 3D template in advance. The method models deformations as a response of the elastic surface to the external and internal forces acting on it. Given the initial 3D template, it uses a physics simulator to generate deformed future states, which are treated as the reconstructed surfaces. The set of physical parameters Φ that describe the challenging surface deformations are optimised by minimising a dense per-pixel photometric energy. We backpropagate through the differentiable rendering and differentiable physics simulator to obtain the optimal set of physics parameters Φ.

Installation

Clone this repository to ${code_root}. The following sets up a conda environment with all Φ-SfT dependencies

conda env create -f ${code_root}/phi_sft/environment.yml

conda activate phi_sftΦ-SfT uses a physics simulator as a deformation model prior. Here, we package and re-distribute such a differentiable physics simulator. You can first build the arcsim dependencies (ALGLIB, JsonCpp, and TAUCS) with

cd ${code_root}/arcsim/dependencies; makeThis assumes that you have the following libraries installed. On Linux, you should be able to get all of them through your distribution's package manager.

- BLAS

- Boost

- freeglut

- gfortran

- LAPACK

- libpng

After arcsim setup, you can compile the differentiable physics simulator by going back to the parent directory, updating paths in setup.py and running 'make'.

cd ${code_root}; makeOne can verify that the simulator installation is successful using

import torch

import arcsimRunning code

Reconstructing 3D shapes from monocular sequences

Download and extract Φ-SfT dataset to ${data_root}. For reconstructing real sequence S3, update paths (and other parameters) in experiment configuration file ${code_root}/phi_sft/config/expt_real_s3.ini, data sequence configuration file ${data_root}/real/S3/preprocess.ini and simulator configuration file ${data_root}/real/S3/sim_conf.json

sequence_type=real

sequence_name=s3

cd ${code_root}/phi_sft

conda activate phi_sft

python -u reconstruct_surfaces_from_sequence.py ${data_root}/${sequence_type} ${code_root}/phi_sft/config/expt_${sequence_type}_${sequence_name}.iniThe reconstruction process requires several hundred iterations in most cases, which takes 16−24 hours on an Nvidia RTX 8000 GPU for roughly 50 frames of 1920x1080 image sequences and template mesh ~300 vertices. During reconstruction at every i_save iterations, the code will save checkpoints and evaluate quantitatively against the ground-truth and store the result in log.txt.

To resume training from last checkpoint (e.g. iteration 100), set reload=False and i_reload=100 in experiment configuration file ${code_root}/phi_sft/config/expt_real_s3.ini and run reconstruct_surfaces_from_sequence.py script above

Preparing real sequences

If you would like to try Φ-SfT on your dataset, create a folder ${sequence_name} in ${data_root}/real with the following structure

rgbswith monocular RGB images of a deforming surfacepoint_cloudswith point cloud for 3D template of the surface corresponding to the first framemaskssegmentation maskspreprocess.ini, configuration parameters for the sequencecamera.json, camera intrinsics assuming the camera coordinate system of Kinect SDKsim_conf.json, simulation configuration, can be kept unchanged

The blurred version of ground-truth segmentation masks is used for silhouette loss in Φ-SfT and can be generated with

python preprocess_real_sequence.py ${data_root}/real ${sequence_name} blur_masked_images Given Kinect RGB image and point cloud for the template, generate 3D template mesh with poisson surface reconstruction. The corresponding texture map for the template is obtained by projecting the vertices of the template mesh onto the image space of the first image with known camera intrinsics.

python preprocess_real_sequence.py ${data_root}/real ${sequence_name} generate_template_surfaceThe generated template can be cleaned with a mesh editing tool such as Blender and saved as ${data_root}/real/${sequence_name}/templates/template_mesh_final.obj. The following script prepares the template in the format expected by the physics simulator used in Φ-SfT. It determines the initial rigid pose of the template relative to a flat sheet on the XY plane (as required in arcsim) using iterative closest point (ICP). Pose estimation parameters in ${data_root}/real/${sequence_name}/preprocess.ini can be modified to get accurate pose.

python preprocess_real_sequence.py ${data_root}/real ${sequence_name} clean_template_surfaceNow, your data sequence is ready for running Φ-SfT! Create an experiment configuration file in {code_root}/phi_sft/config and follow the instructions in the previous section. If the generated template is not positively oriented, you may get the following error Error: TAUCS failed with return value -1 while running reconstruct_surfaces_from_sequence.py. This can be addressed by setting invert_faces_orientation=True,

in preprocess.iniand running the last step in template processing (clean_template_surface).

Generating synthetic sequences

If you would like to generate synthetic datasets with physics simulator, create a folder ${sequence_name} in ${data_root}/synthetic with following structure

preprocess.ini, configuration parameters for the sequencecamera.json, camera intrinsics assuming the camera coordinate system of PyTorch3Dsim_conf.json, simulation configuration, please modify the forces (gravity, wind) and material here to generate interesting surface deformations

The following commands generate challenging synthetic surface and image sequence

python generate_synthetic_sequence.py ${data_root}/synthetic ${sequence_name} generate_surfaces

python generate_synthetic_sequence.py ${data_root}/synthetic ${sequence_name} render_surfacesNow that your data sequence is ready for running Φ-SfT, create an experiment configuration file in {code_root}/phi_sft/config and follow the instructions in the first section.

Evaluation

Run the following to evaluate the reconstructed surfaces of Φ-SfT against ground-truth. This computes the chamfer distance for real data sequences and angular as well as 3D error for synthetic sequences. Additionally, it provides mesh and depth visualisations of reconstructed surfaces as in the paper.

sequence_type=real

sequence_name=s3

converged_iteration=300

python evaluate_reconstructed_surfaces.py ${data_root}/${sequence_type} config/expt_${sequence_type}_${sequence_name}.ini $converged_iterationCitation

If you use this code for your research, please cite:

@inproceedings{kair2022sft,

title={$\phi$-SfT: Shape-from-Template with a Physics-Based Deformation Model},

author={Navami Kairanda and Edith Tretschk and Mohamed Elgharib and Christian Theobalt and Vladislav Golyanik},

booktitle={Computer Vision and Pattern Recognition (CVPR)},

year={2022}

}

@inproceedings{liang2019differentiable,

title={Differentiable Cloth Simulation for Inverse Problems},

author={Junbang Liang and Ming C. Lin and Vladlen Koltun},

booktitle={Conference on Neural Information Processing Systems (NeurIPS)},

year={2019}

}

License

This software is provided freely for non-commercial use. The code builds on the differentiable version (https://github.com/williamljb/DifferentiableCloth) of the ARCSim cloth simulator (http://graphics.berkeley.edu/resources/ARCSim/). We thank both of them for releasing their code.

We release this code under the MIT license. You can find all licenses in the file LICENSE.