TensorflowJS EfficientNet

This repository contains a tensorflowJs implementation of EfficientNet, an object detection model trained on ImageNet and can detect 1000 different objects.

EfficientNet a lightweight convolutional neural network architecture achieving the state-of-the-art accuracy with an order of magnitude fewer parameters and FLOPS, on both ImageNet and five other commonly used transfer learning datasets.

The codebase is heavily inspired by the TensorFlow implementation.

#

👏 Supporters

↳ Stargazers

↳ Forkers

Multilingual status

| locale | status | translate by 👑 |

|---|---|---|

en |

✅ | |

zh |

✅ | @luoye-fe |

es |

✅ | @h383r |

ar |

✅ | @lamamyf |

ru |

✅ | @Abhighyaa |

he |

✅ | @jhonDoe15 |

fr |

✅ | @burmanp |

other |

⏩ (need help, PR welcome ) |

Table of Contents

- Just Want to Play With The Model

- Installation

- API

- Examples

- Usage

- About EfficientNet Models

- Models

- Multilingual status

How I Run This Project Locally ?

- clone this repository

- Just Want to Play ?

- At the root project go to playground directory, Run:

docker-compose up - Navigate to http://localhost:8080

- At the root project go to playground directory, Run:

Usage:

EfficientNet has 8 different model checkpoints each checkpoint as different input layer resolution for larger input layer resolution, the greater the accuracy and the running time is slower.

for example lets take this images:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

#

Installation

npm i --save node-efficientnetAPI

EfficientNetCheckPointFactory.create(checkPoint: EfficientNetCheckPoint, options?: EfficientNetCheckPointFactoryOptions): Promise<EfficientNetModel>

Example: to create an efficientnet model you need to pass EfficientNetCheckPoint

(available checkpoint [B0..B7]) each one of them represent different model

const {

EfficientNetCheckPointFactory,

EfficientNetCheckPoint,

} = require("node-efficientnet");

const model = await EfficientNetCheckPointFactory.create(

EfficientNetCheckPoint.B7

);

const path2image = "...";

const topResults = 5;

const result = await model.inference(path2image, {

topK: topResults,

locale: "zh",

});Of course, you can use local model file to speed up loading

You can download model file from efficientnet-tensorflowjs-binaries, please keep the directory structure consistent, just like:

local_model

└── B0

├── group1-shard1of6.bin

├── group1-shard2of6.bin

├── group1-shard3of6.bin

├── group1-shard4of6.bin

├── group1-shard5of6.bin

├── group1-shard6of6.bin

└── model.jsonconst path = require("path");

const {

EfficientNetCheckPointFactory,

EfficientNetCheckPoint,

} = require("node-efficientnet");

const model = await EfficientNetCheckPointFactory.create(

EfficientNetCheckPoint.B7,

{

localModelRootDirectory: path.join(__dirname, "local_model"),

}

);

const path2image = "...";

const topResults = 5;

const result = await model.inference(path2image, {

topK: topResults,

locale: "zh",

});#

Examples

download files from remote and predict using model

const fs = require("fs");

const nodeFetch = require("node-fetch");

const {

EfficientNetCheckPointFactory,

EfficientNetCheckPoint,

} = require("node-efficientnet");

const images = ["car.jpg", "panda.jpg"];

const imageDir = "./samples";

const imageDirRemoteUri =

"https://raw.githubusercontent.com/ntedgi/node-EfficientNet/main/samples";

if (!fs.existsSync(imageDir)) {

fs.mkdirSync(imageDir);

}

async function download(image, cb) {

const response = await nodeFetch.default(`${imageDirRemoteUri}/${image}`);

const buffer = await response.buffer();

fs.writeFile(`${imageDir}/${image}`, buffer, cb);

}

EfficientNetCheckPointFactory.create(EfficientNetCheckPoint.B2)

.then((model) => {

images.forEach(async (image) => {

await download(image, () => {

model.inference(`${imageDir}/${image}`).then((result) => {

console.log(result.result);

});

});

});

})

.catch((e) => {

console.error(e);

});output :

[

{ label: "sports car, sport car", precision: 88.02440940394301 },

{

label: "racer, race car, racing car",

precision: 6.647441678387659,

},

{ label: "car wheel", precision: 5.3281489176693295 },

][

({

label: "giant panda, panda, panda bear, coon bear, Ailuropoda melanoleuca",

precision: 83.60747593436018,

},

{ label: "skunk, poleca", precision: 11.61300759424677 },

{

label: "hog, pig, grunter, squealer, Sus scrofa",

precision: 4.779516471393051,

})

];#

About EfficientNet Models

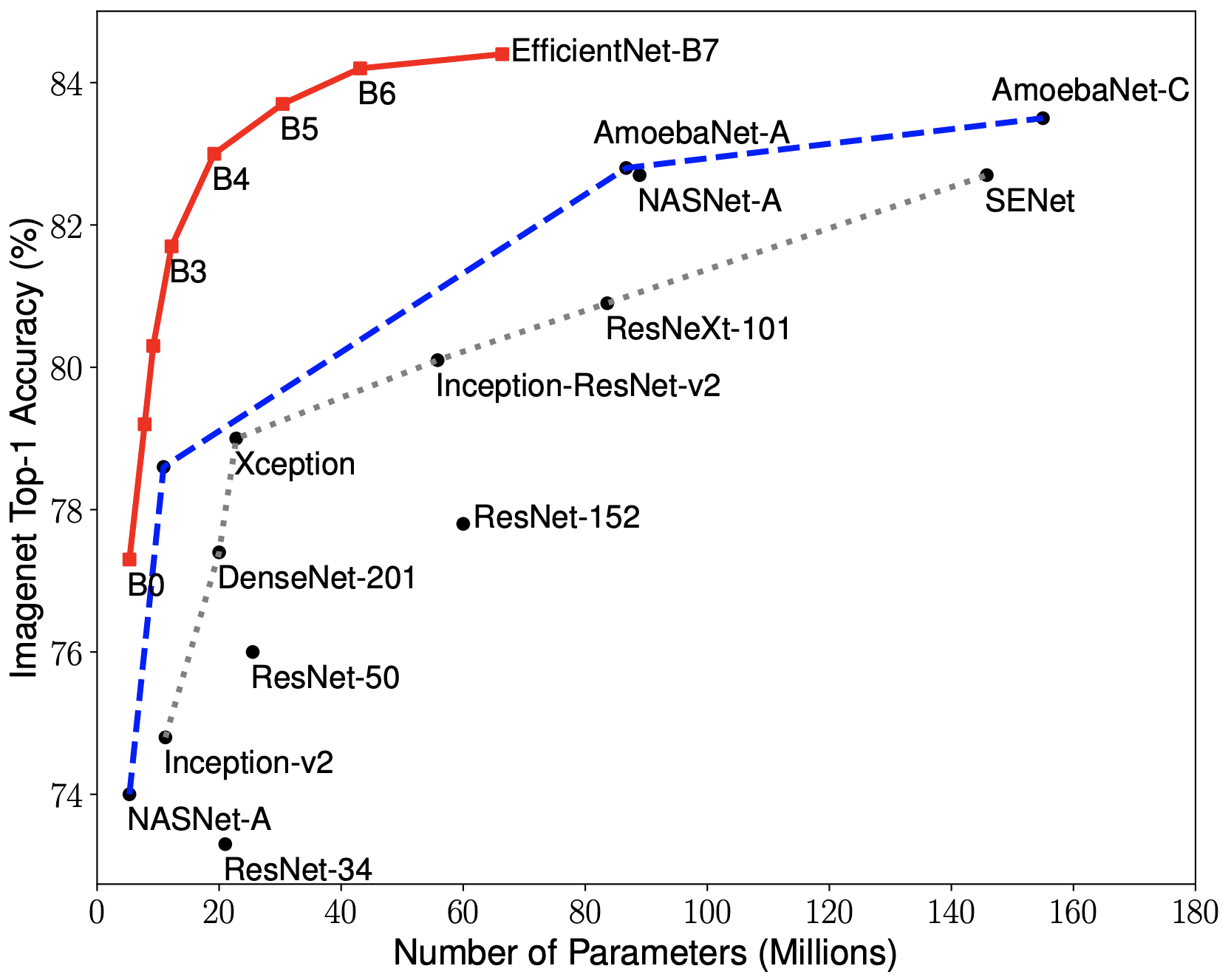

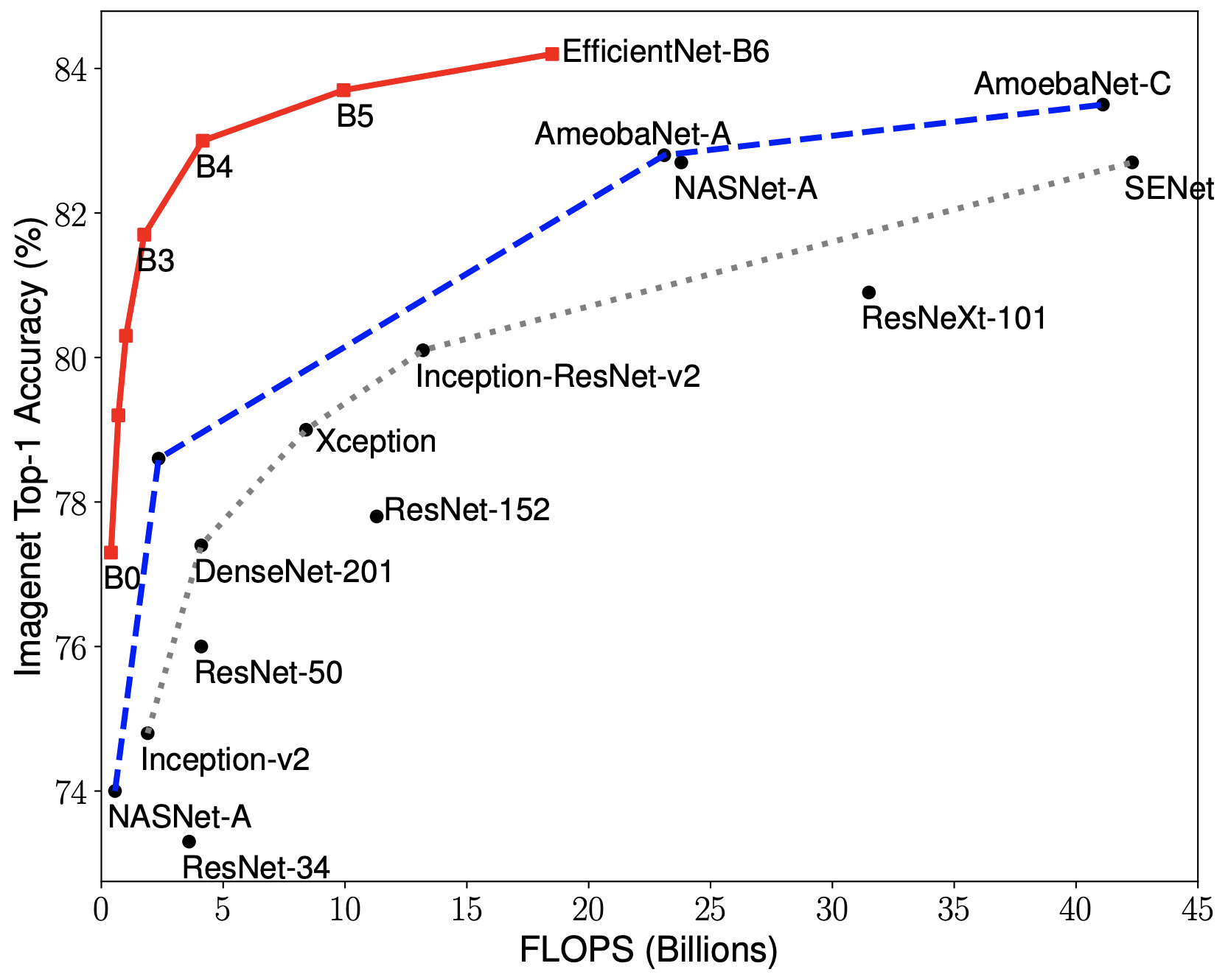

EfficientNets rely on AutoML and compound scaling to achieve superior performance without compromising resource efficiency. The AutoML Mobile framework has helped develop a mobile-size baseline network, EfficientNet-B0, which is then improved by the compound scaling method to obtain EfficientNet-B1 to B7.

|

|

#

EfficientNets achieve state-of-the-art accuracy on ImageNet with an order of magnitude better efficiency:

-

In high-accuracy regime, EfficientNet-B7 achieves the state-of-the-art 84.4% top-1 / 97.1% top-5 accuracy on ImageNet with 66M parameters and 37B FLOPS. At the same time, the model is 8.4x smaller and 6.1x faster on CPU inference than the former leader, Gpipe.

-

In middle-accuracy regime, EfficientNet-B1 is 7.6x smaller and 5.7x faster on CPU inference than ResNet-152, with similar ImageNet accuracy.

-

Compared to the widely used ResNet-50, EfficientNet-B4 improves the top-1 accuracy from 76.3% of ResNet-50 to 82.6% (+6.3%), under similar FLOPS constraints.

#

Models

The performance of each model variant using the pre-trained weights converted from checkpoints provided by the authors is as follows:

| Architecture | @top1* Imagenet | @top1* Noisy-Student |

|---|---|---|

| EfficientNetB0 | 0.772 | 0.788 |

| EfficientNetB1 | 0.791 | 0.815 |

| EfficientNetB2 | 0.802 | 0.824 |

| EfficientNetB3 | 0.816 | 0.841 |

| EfficientNetB4 | 0.830 | 0.853 |

| EfficientNetB5 | 0.837 | 0.861 |

| EfficientNetB6 | 0.841 | 0.864 |

| EfficientNetB7 | 0.844 | 0.869 |

* - topK accuracy score for converted models (imagenet val set)

if (this.repo.isAwesome || this.repo.isHelpful) {

Star(this.repo);

}