SL-animals-DVS training with STBP (improved)

This repository contains an STBP (Spatio-Temporal Back Propagation) implementation on the SL-Animals-DVS dataset using Pytorch. The first results reported in this repository were an initial atempt to reproduce the published results. Additionally, improvements were made to the original implementation, optimizing the input data and the spiking neural network in order to enhance the gesture recognition performance. Details of the techniques applied can be found in the following conference paper, published in LASCAS 2024.

A BRIEF INTRODUCTION:

STBP is an offline training method that directly trains a Spiking Neural Network (SNN), expanding the use of the classic backpropagation algorithm to the time domain, so the training occurs in space AND time. Therefore, it is a suitable method to train SNNs, which are biologically plausible networks (in short).

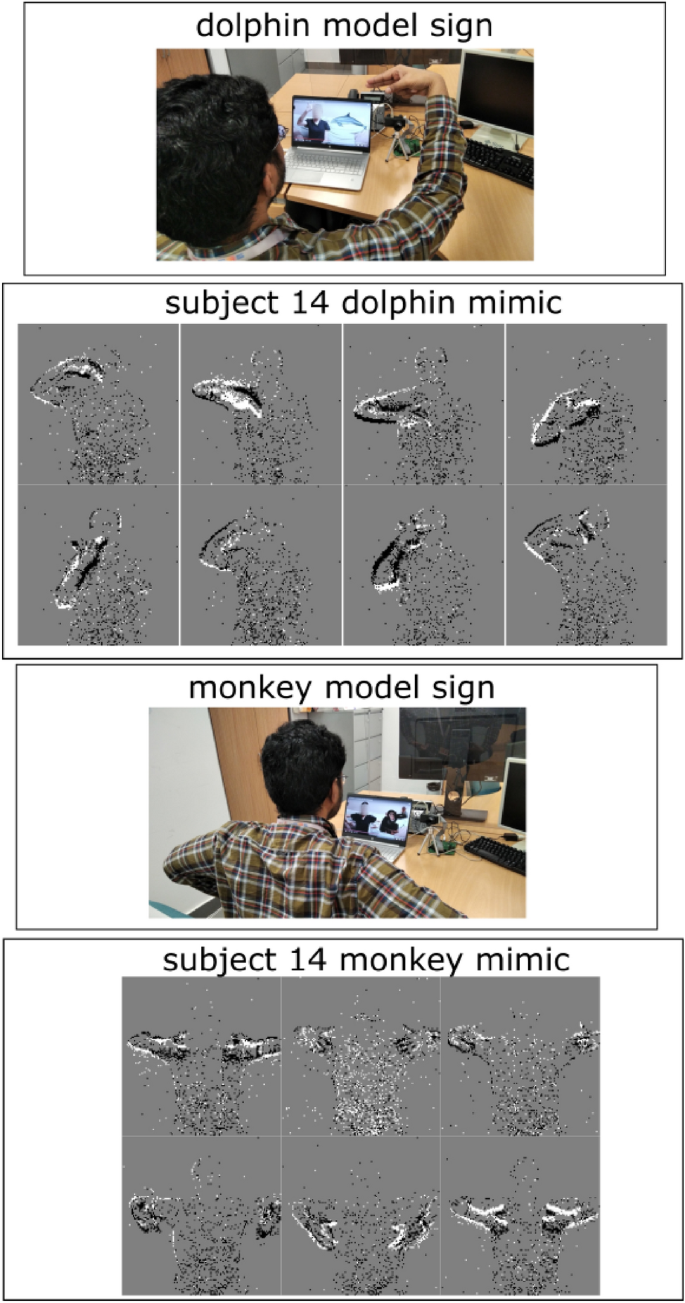

The SL-animals-DVS is a dataset of sign language (SL) gestures peformed by different people representing animals, and recorded with a Dynamic Vision Sensor (DVS).

The reported results in the SL-animals paper were divided in two: results with the full dataset and results with a reduced dataset, meaning excluding group S3. The results achieved with the implementation in this repository exceed the published results in the full dataset by about 9% and are equivalent in the reduced dataset. Considering the published results have no code available to reproduce them, it's quite an achievement.

The implementation published in this repository is the first publicly available STBP implementation on the SL-animals dataset (and the only one as of may 2023, as far as I know). The results are summarized below:

| Full Dataset | Reduced Dataset | |

|---|---|---|

| Reported Results | 56.20 +- 1.52% | 71.45 +- 1.74% |

| This Implementation | 64.97 +- 4.81% | 71.47 +- 1.54% |

| Optimized Version | 75.78 +- 2.73% | N/A |

Requirements:

While not sure if the list below contains the actual minimums, it will run for sure if you do have the following:

- Python 3.0+

- Pytorch 1.11+

- CUDA 11.3+

- python libraries: os, numpy, matplotlib, pandas, sklearn, datetime, tonic, tensorboardX

README FIRST

This package contains the necessary python files to train a Spiking Neural Network with the STBP method on the Sign Language Animals DVS dataset.

IMPLEMENTATION

Package Contents:

- dataset.py

- layers.py

- model.py

- network.yaml

- sl_animals_stbp.py

- slice_data.py

- stbp_tools.py

- train_test_only.py

The SL-Animals-DVS dataset implementation code is in dataset.py, and it's basically a Pytorch Dataset object. The library Tonic was used to read and process the DVS recordings.

A script was created to slice the SL animals DVS recordings into actual samples for training, and save the slices to disk - slice_data.py. The reason for this is because the original raw dataset after download contains only 59 files (DVS recordings), and not 1121 samples. Each recording contains 1 individual performing the 19 gestures in sequence, so there is a need to manually cut these 19 slices from each whole recording in order to actually use the dataset.

The core of the STBP method implementation is in layers.py: the base code code is from thiswinex/STBP-simple, to which I added a few fixes, changes and adaptations, inspired also by this other STBP implementation.

The Spiking Neural Network model is in model.py (SLANIMALSNet), and reproduces the architecture described in the SL-animals paper. The main training tools and functions used in the package are in stbp_tools.py. The customizable network and simulation parameters are in network.yaml; this is the place to edit parameters like 'batch size', 'data path', 'seed' and many others.

A new feature was introduced as an option, allowing the use of random sample crops for training instead of the fixed crops starting at the beggining of the sample, as in the original paper implementation. This allows further exploration of the available data in the dataset, and that's how I got the best results in the table above. Check my published paper for implementation details.

The main program is in sl_animals_slayer.py, which uses the correct experimental procedure for training a network using cross validation after dividing the dataset into train, validation and test sets. A simpler version of the main program is in train_test_only.py, which is basically the same except dividing the dataset only into train and test sets, in an effort to replicate the published results. Apparently, the benchmark results were reported in this simpler dataset split configuration.

Use

- Clone this repository:

git clone https://github.com/ronichester/SL-animals-DVS-stbp - Download the dataset in this link;

- Save the DVS recordings in the data/recordings folder and the file tags in the data/tags folder;

- Run slice_data.py to slice the 59 raw recordings into 1121 samples.

python slice_data.py - Edit the custom parameters according to your preferences. The default parameters setting is functional and was tailored according to the information provided in the relevant papers, the reference codes used as a basis, and mostly by trial and error (lots of it!). You are encouraged to edit the parameters in network.yaml and, please let me know if you got better results.

- Run sl_animals_stbp.py (or train_test_only.py) to start the SNN training:

python sl_animals_stbp.pyor

python train_test_only.py - The Tensorboard logs will be saved in src/logs and the network weights will be saved in src/weights. To visualize the logs with tensorboard:

- open a terminal (I use Anaconda Prompt), go to the src directory and type:

tensorboard --logdir=logs - open your browser and type in the address bar http://localhost:6006/ or any other address shown in the terminal screen.

- open a terminal (I use Anaconda Prompt), go to the src directory and type:

References

- C. R. Schechter and J. G. R. C. Gomes, "Enhancing Gesture Recognition Performance Using Optimized Event-Based Data Sample Lengths and Crops", 2024 IEEE 15th Latin America Symposium on Circuits and Systems (LASCAS), Punta del Este, Uruguay, pp. 1-5, (2024).

- Vasudevan, A., Negri, P., Di Ielsi, C. et al. "SL-Animals-DVS: event-driven sign language animals dataset" . Pattern Analysis and Applications 25, 505–520 (2021).

- Yujie Wu, Lei Deng, Guoqi Li, Jun Zhu and Luping Shi: "Spatio-Temporal Backprogation for Training High-performance Spiking Neural Networks" Frontiers in Neuroscience 12:331 (2018)

- The original dataset can be downloaded here

- Other basic STBP implementations that served as a base for this project: thiswinex/STBP-simple and yjwu17/STBP-for-training-SpikingNN

Copyright

Copyright 2023 Schechter Roni. This software is free to use, copy, modify and distribute for personal, academic, or research use. Its terms are described under the General Public License, GNU v3.0.