Wolf

Trade foreign exchange with ease.

Introduction

Wolf Trading Platform is an interactive platform that supports real-time financial data visualization, as well as historical data lookup. It executes simple trading rules in real time and is simple to integrate with the brokerage services. It is currently not deployed publicly. Here and here are screen casts from Wolf's operation.

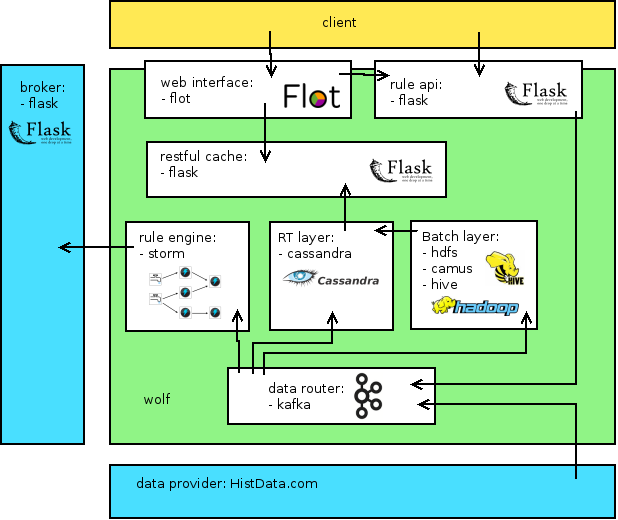

Below is an overview of the architecture of Wolf:

Wolf uses the following modules:

- Data Provider (bottom box on the diagram above) is responsible for a real-time Forex feed to the system. To have that free of charge it uses data aggregated by Hist Data every month. The data feed is therefore one month old, e.g., a tick that happened in June at exactly '2014-06-03 15:32:21.451 EST' is fed to the system on July at exactly '2014-07-03 15:32:21.451 EST' (1 milisecond resolution). This approach helps generating traffic similar to what expensive data providers would provide. The downside is, apart from data being one-month old, the fact that a regular weekday in July might have been a weekend in June, which causes outage in feed.

- Rule Submission (top right box on the diagram above) is responsible for getting user-defined orders from investors. They are expressed as IF THEN statements, e.g. IF price of X is less than Y THEN buy X. Rules are delivered to the Rule Engine and executed if the condition specified in the order is met. All rules enter the system via this API. Web Interface calls this API when user clicks to submit a rule.

- Data Router (the second from the bottom box on the diagram above) is responsible for getting events generated by producers: "ticks" from Data Provider and orders from Rule Submission and deliver them to the appropriate consumers. It is built on top of Kafka distributed queue.

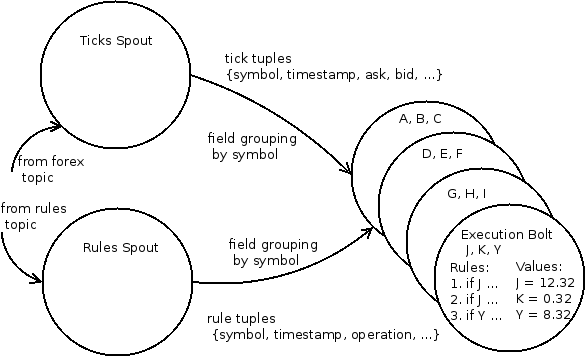

- Rule Engine (the second left-most box on the diagram above) is one of the consumers of events provided by Data Router. It matches up rules with the current state of the market and executes the trades when the conditions specified in rules are met. It is built on top of Storm event processor. The design of rule.engine is shown below:

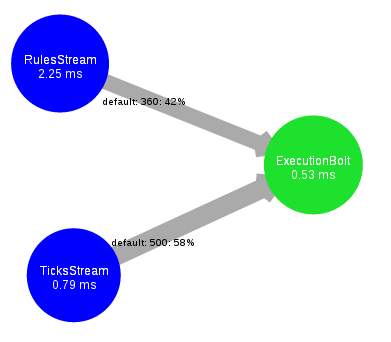

There are two spouts: one provides ticks, one provides rules. It is a simple design, which matches up rules with ticks by the "symbol" field, e.g. it uses Storm field grouping. Execution bolt stores partially the latest state of the market, e.g. each task "knows" only about the state from the input ticks. Below is a run-time visualization of the topology running on Storm cluster:

There are two spouts: one provides ticks, one provides rules. It is a simple design, which matches up rules with ticks by the "symbol" field, e.g. it uses Storm field grouping. Execution bolt stores partially the latest state of the market, e.g. each task "knows" only about the state from the input ticks. Below is a run-time visualization of the topology running on Storm cluster:

- Data Aggregator - RT (the "Cassandra" box on the diagram above) is responsible for aggregating the latest ticks in real time. It is built on top of Cassandra database. Ticks are only retained for 3 hours. This module takes advantage of the dynamic column families and TTL-tagged inserts, which is a mechanism of storing temporal time-series data in Cassandra.

- Data Aggregator - Batch (the "HDFS" box on the diagram above) is responsible for storing all the incoming events in the system, aggregating a wide range of ticks in batch, averaging them, and serving aggregated views to Data Aggregator - RT. It is built on top of HDFS, Camus, and Hive. Ticks are stored in HDFS every 10 minutes by Camus data collector. Camus creates a set of compressed files, groups them by hours, each line in such a file is one serialized json object representing a tick. A Hive table is defined over the directory that contains the files from the last hour, files are decompressed on-the-fly, json objects get flatten, average prices within every minute are calculated and sent to Data Aggregator - RT.

- Cache - RT ("restful cache" box on the diagram above) separates queries to the Data Aggregator - RT from clients. It uses in-memory cache to serve requests in real time. Cache - Batch works exactly like Cache - RT but for the other types of queries. The distinction have been made between these modules because they are likely to be queried at different rates, e.g. Cache - RT is queried more frequently to give user the best possible experience, whereas Cache - Batch is queried less frequently because it depends on the data from Data Aggregator - Batch, serving every 10 minutes or so.

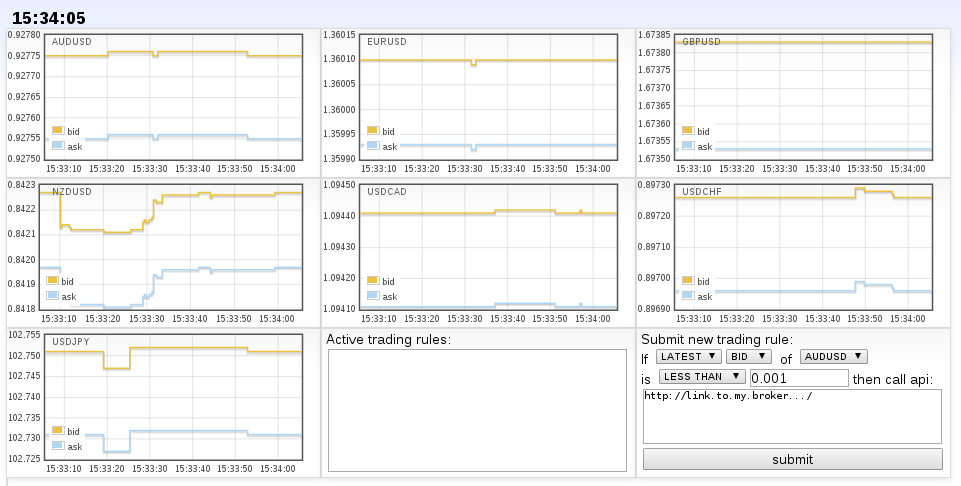

- Web Interface is a front-end that a regular investor can use to analyze Forex market and order trades. It is built on top of Flot JavaScript graphing library. There are all the seven main Forex pairs plotted real-time on the main page of the system:

User can specify a trading rule in the bottom right corner of the system. Specifying URL is the way to link Wolf platform to the brokerage service. User can also click on any of the plots to see the historical data visualization:

User can specify a trading rule in the bottom right corner of the system. Specifying URL is the way to link Wolf platform to the brokerage service. User can also click on any of the plots to see the historical data visualization:

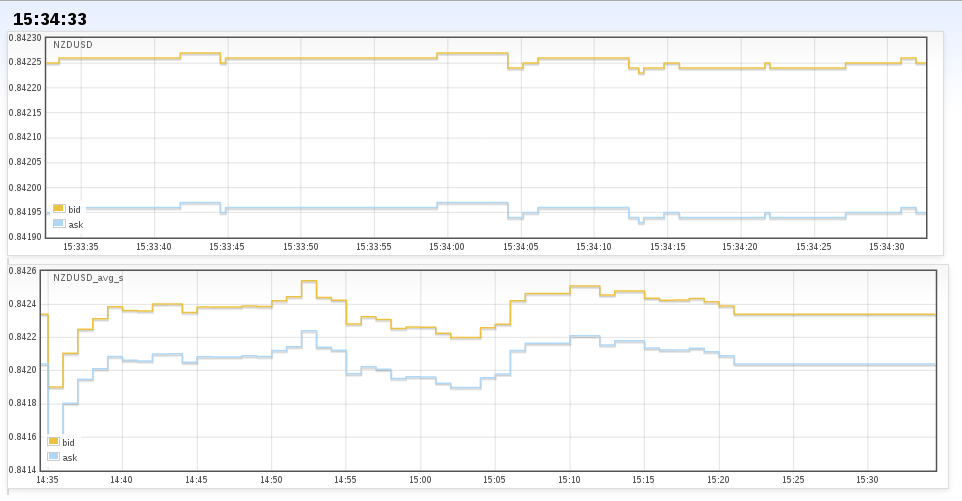

The top plot shows the latest minute of trade. The bottom plot shows the last hour, with values averaged per-minute in Aggregator - Batch module. Note that the last couple of minutes are missing because MR jobs, executed by Hive, are running in background.

The top plot shows the latest minute of trade. The bottom plot shows the last hour, with values averaged per-minute in Aggregator - Batch module. Note that the last couple of minutes are missing because MR jobs, executed by Hive, are running in background.

Getting started

I am currently working on the deployment scripts, all the modules should be easy to deploy using the following commands:

git clone https://github.com/slawekj/wolf.git

./wolf/<module name>/bin/install.sh

./wolf/<module name>/bin/run.shModules should be deployed, possibly to different physical machines/clusters, in the following order:

- data.router (deployment scripts ready)

- data.provider (deployment scripts ready)

- rule.engine (deployment scripts ready)

- data.aggregator.rt (deployment scripts in preparation)

- data.aggregator.batch (deployment scripts in preparation)

- restful.cache.rt (deployment scripts in preparation)

- restful.cache.batch (deployment scripts in preparation)

- restful.rule.submission (deployment scripts in preparation)

- web.interface (deployment scripts in preparation)

Deployment scripts are tested on Ubuntu 12.04 distribution, which is available here. You should have sudo permissions and git installed:

sudo apt-get install git