Same problem here,

triying now with different models of the model ZOO, for the moment impossible for me to convert to TFLITE a mobilenet V2 with TF2 Object detection API.

Open SukyoungCho opened 4 years ago

Same problem here,

triying now with different models of the model ZOO, for the moment impossible for me to convert to TFLITE a mobilenet V2 with TF2 Object detection API.

You cannot directly convert the SavedModels from the detection zoo to TFLite, since it contains training parts of the graph that are not relevant to TFLite. Can you post the command you used to export the TFLite-friendly SavedModel using export_tflite_graph_tf2?

Running "Step 2: Convert to TFLite", is the pain in the ass. I managed to convert the model generated in the step 1 into .tflite without any quantization following the given command, although I am not sure if it can be deployed on the mobile devices.

Why unsure? What is the size of the converted model? You can use a tool like Netron to check if the .tflite model is valid.

For quantization, you need to implement representative dataset gen in such a way that it mimics how you would typically pass image tensors to TFLite models. It usually boils down to something like this:

def representative_dataset_gen():

for i in range(100):

image = tf.io.read_file(os.path.join(data_dir, image_files[i % NUM_FILES]))

image = tf.compat.v1.image.decode_jpeg(image)

image = preprocess(image)

yield [image]For the preprocess function, look at this implementation.

@srjoglekar246 Thanks for your reply. I tried to export TFLite-friendly SavedModel using following command line. I am confused that how "TFLite-friendly SavedModel" is different from the SavedModel from Model Zoo as they seem almost same to me. In addition, although you are saying I cannot directly convert the SavedModels from the detection Zoo, It was converted to TFLite somehow... although im not sure why.

CUDA_VISIBLE_DEVICES=1 python object_detection/export_tflite_graph_tf2.py \

--pipeline_config_path /root/ecomfort/tf2_model_zoo/ssd_mobilenet_v2_fpnlite_640x640_coco17_tpu-8/pipeline.config \

--trained_checkpoint_dir /root/ecomfort/tf2_model_zoo/ssd_mobilenet_v2_fpnlite_640x640_coco17_tpu-8/checkpoint \

--output_directory /root/ecomfort/tf2_model_zoo/tflite/ssd_mobilenet_v2_fpnlite_640x640_coco17_tpu-8For quantization, I thought it could be any data as long as it is image tensors, and thats why I used MNIST data which does not need to be downloaded. By the way, I think I saw somewhere that "representative_dataset_gen()" or TF2 takes images as numpy array, so could you let me know which is correct? I will try to do quantization following your suggested command line. I will update it here. Thanks.

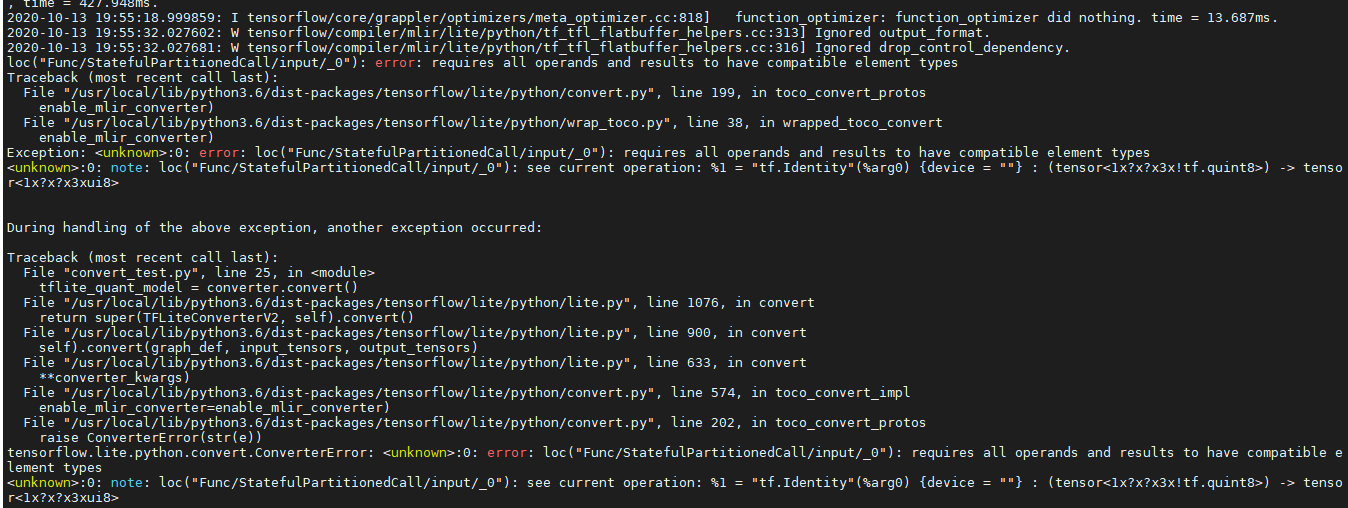

@srjoglekar246 Hi, I got this error while quantizing. Wonder if you are familiar with this kind of error.

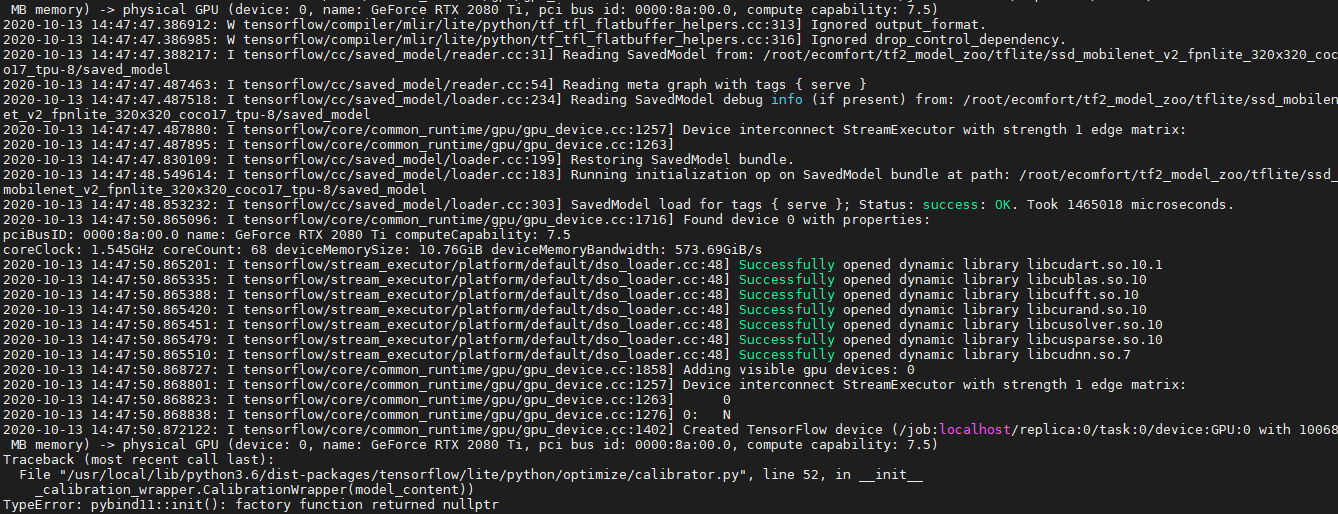

@srjoglekar246 Hi, I tried to train the model from a model zoo and convert it to .tflite and quantize it. However, there were 2 critical issues.

During the training, at certain point, the loss becomes NaN.

Converting and quantizing the trained model, it still shows ValueError: Failed to parse the model: pybind11::init(): factory function returned nullptr. error

Not sure if there is a bug with training. The ODAPI folks might have a better idea of any issues that occur before using export_tflite_graph_tf2.py.

Can you show your exact code for tflite conversion? Though the model should probably be trained well first.

@srjoglekar246 This is how I ran the step 1

CUDA_VISIBLE_DEVICES=1 tflite_convert \

--saved_model_dir=/root/ecomfort/tf2_model_zoo/tflite/ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8/saved_model \

--output_file=/root/ecomfort/tf2_model_zoo/tflite/ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8/test.tfliteand this is how I ran the step 2

CUDA_VISIBLE_DEVICES=1 python demo_convert.py

and this is demo_convert

import tensorflow as tf

# Convert the model

converter = tf.lite.TFLiteConverter.from_saved_model("/root/ecomfort/tf2_model_zoo/tflite/ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8/saved_model")

converter.optimizations = [tf.lite.Optimize.DEFAULT]

def representative_dataset_gen():

for i in range(100):

#dir path where .jpg (image) files are

image = tf.io.read_file(os.path.join("/root/ecomfort/data/valid_data/total/", image_files[i % NUM_FILES]))

image = tf.compat.v1.image.decode_jpeg(image)

image = preprocess(image)

yield [image]

"""

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.mnist.load_data()

x_train = x_train.reshape(60000, 28, 28, 1).astype('float32') / 255

x_test = x_test.reshape(10000, 28,28, 1).astype('float32') / 255

y_train = y_train.astype('float32')

y_test = y_test.astype('float32')

def create_represent_data(data):

def data_gen():

for i in data:

yield [list([i])]

return data_gen

converter.representative_dataset = create_represent_data(x_train[:5000])

"""

converter.representative_dataset = representative_dataset_gen

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.inference_input_type = tf.uint8 # or tf.int8

converter.inference_output_type = tf.uint8 # or tf.int8

tflite_quant_model = converter.convert()

# Save the model.

with open('model.tflite', 'wb') as f:

f.write(tflite_model)@SukyoungCho How did you run export_tflite_graph_tf2.py? Note that the saved model directly from the model zoo will not convert to TFLite in a performant way (and won't quantize either), since it is not optimized for on-device inference.

In your demo_convert, how are you implementing preprocess?

@srjoglekar246 sorry for confusing. this is how i ran export_tflite_grpah_tf.py

CUDA_VISIBLE_DEVICES=1 python object_detection/export_tflite_graph_tf2.py \

--pipeline_config_path /root/ecomfort/tf2_model_zoo/ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8/pipeline.config \

--trained_checkpoint_dir /root/ecomfort/tf2_model_zoo/ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8/checkpoint \

--output_directory /root/ecomfort/tf2_model_zoo/tflite/ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8Yes, I ran about 12000 steps of training and tried converting it to tflite and quantize it.

For preprocessing, image = preprocess(image) this is all i have as I just copied and pasted the code you provided above. Should i modify it? thanks

@srjoglekar246 Should I implement preprocess within the script? like this?

import tensorflow as tf

# Convert the model

converter = tf.lite.TFLiteConverter.from_saved_model("/root/ecomfort/tf2_model_zoo/tflite/ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8/saved_model")

converter.optimizations = [tf.lite.Optimize.DEFAULT]

def preprocess(image,

height,

width,

central_fraction=0.875,

scope=None,

central_crop=True,

use_grayscale=False):

with tf.name_scope(scope, 'eval_image', [image, height, width]):

if image.dtype != tf.float32:

image = tf.image.convert_image_dtype(image, dtype=tf.float32)

if use_grayscale:

image = tf.image.rgb_to_grayscale(image)

# Crop the central region of the image with an area containing 87.5% of

# the original image.

if central_crop and central_fraction:

image = tf.image.central_crop(image, central_fraction=central_fraction)

if height and width:

# Resize the image to the specified height and width.

image = tf.expand_dims(image, 0)

image = tf.image.resize_bilinear(image, [height, width],

align_corners=False)

image = tf.squeeze(image, [0])

image = tf.subtract(image, 0.5)

image = tf.multiply(image, 2.0)

return image

def representative_dataset_gen():

for i in range(100):

#dir path where .jpg (image) files are

image = tf.io.read_file(os.path.join("/root/ecomfort/data/valid_data/total/", image_files[i % NUM_FILES]))

image = tf.compat.v1.image.decode_jpeg(image)

image = preprocess(image)

yield [image]

converter.representative_dataset = representative_dataset_gen

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.inference_input_type = tf.uint8 # or tf.int8

converter.inference_output_type = tf.uint8 # or tf.int8

tflite_quant_model = converter.convert()

# Save the model.

with open('model.tflite', 'wb') as f:

f.write(tflite_model)@SukyoungCho Yes.

@srjoglekar246 When I try to quantize the model, using the script above. It still gives me an error message below..

this is the exact script

import tensorflow as tf

# Convert the model

converter = tf.lite.TFLiteConverter.from_saved_model("/root/ecomfort/tf2_model_zoo/tflite/ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8/saved_model")

converter.optimizations = [tf.lite.Optimize.DEFAULT]

def preprocess(image,

height,

width,

central_fraction=0.875,

scope=None,

central_crop=True,

use_grayscale=False):

with tf.name_scope(scope, 'eval_image', [image, height, width]):

if image.dtype != tf.float32:

image = tf.image.convert_image_dtype(image, dtype=tf.float32)

if use_grayscale:

image = tf.image.rgb_to_grayscale(image)

# Crop the central region of the image with an area containing 87.5% of

# the original image.

if central_crop and central_fraction:

image = tf.image.central_crop(image, central_fraction=central_fraction)

if height and width:

# Resize the image to the specified height and width.

image = tf.expand_dims(image, 0)

image = tf.image.resize_bilinear(image, [height, width],

align_corners=False)

image = tf.squeeze(image, [0])

image = tf.subtract(image, 0.5)

image = tf.multiply(image, 2.0)

return image

def representative_dataset_gen():

for i in range(100):

#dir path where .jpg (image) files are

image = tf.io.read_file(os.path.join("/root/ecomfort/data/valid_data/total/", image_files[i % NUM_FILES]))

image = tf.compat.v1.image.decode_jpeg(image)

image = preprocess(image)

yield [image]

converter.representative_dataset = representative_dataset_gen

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.inference_input_type = tf.uint8 # or tf.int8

converter.inference_output_type = tf.uint8 # or tf.int8

tflite_quant_model = converter.convert()

# Save the model.

with open('model.tflite', 'wb') as f:

f.write(tflite_model)This seems like an error with post-training quantization. Adding @jianlijianli who might be able to help.

@SukyoungCho Can you confirm if conversion to float works?

@SukyoungCho Can you confirm if conversion to float works?

I remember it worked before. But, I will try it again and update it as soon as possible.

@srjoglekar246 Hi, when I try conversion to float 32 or float 16, the code does not show any error and gives out a .tflite model file, but it is suspicious. The model produced by step 1 was about 7mb. However, the .tflite file from step 2 is only about 560 bytes - less than 1 kb. Here I attach the model resulted from the step 1 (https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/running_on_mobile_tf2.md) and the result from the step 2 (.tflite conversion to float 32).

ssd_mobilenet_v2_fpnlite_320.zip

Step 1 Step 1: Export TFLite inference graph This step generates an intermediate SavedModel that can be used with the TFLite Converter via commandline or Python API.

To use the script:

# From the tensorflow/models/research/ directory

python object_detection/export_tflite_graph_tf2.py \

--pipeline_config_path path/to/ssd_model/pipeline.config \

--trained_checkpoint_dir path/to/ssd_model/checkpoint \

--output_directory path/to/exported_model_directory@srjoglekar246 Hi, when I try conversion to float 32 or float 16, the code does not show any error and gives out a .tflite model file, but it is suspicious. The model produced by step 1 was about 7mb. However, the .tflite file from step 2 is only about 560 bytes - less than 1 kb. Here I attach the model resulted from the step 1 (https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/running_on_mobile_tf2.md) and the result from the step 2 (.tflite conversion to float 32).

ssd_mobilenet_v2_fpnlite_320.zip

Step 1 Step 1: Export TFLite inference graph This step generates an intermediate SavedModel that can be used with the TFLite Converter via commandline or Python API.

To use the script:

# From the tensorflow/models/research/ directory python object_detection/export_tflite_graph_tf2.py \ --pipeline_config_path path/to/ssd_model/pipeline.config \ --trained_checkpoint_dir path/to/ssd_model/checkpoint \ --output_directory path/to/exported_model_directory

Hi! Try this solution (https://github.com/tensorflow/models/issues/9394#issuecomment-713143622) It solve problem for me (and converting to INT too)

@srjoglekar246 Hi, when I try conversion to float 32 or float 16, the code does not show any error and gives out a .tflite model file, but it is suspicious. The model produced by step 1 was about 7mb. However, the .tflite file from step 2 is only about 560 bytes - less than 1 kb. Here I attach the model resulted from the step 1 (https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/running_on_mobile_tf2.md) and the result from the step 2 (.tflite conversion to float 32). ssd_mobilenet_v2_fpnlite_320.zip Step 1 Step 1: Export TFLite inference graph This step generates an intermediate SavedModel that can be used with the TFLite Converter via commandline or Python API. To use the script:

# From the tensorflow/models/research/ directory python object_detection/export_tflite_graph_tf2.py \ --pipeline_config_path path/to/ssd_model/pipeline.config \ --trained_checkpoint_dir path/to/ssd_model/checkpoint \ --output_directory path/to/exported_model_directoryHi! Try this solution (#9394 (comment)) It solve problem for me (and converting to INT too)

Hi @mpa74, thank you so much for your help. I have tried the solution you offered and I was able to get .tflite file with seemingly legit size. However, I was not able to quantize it into uint8 format.

In addition, when I perform step 1 and step 2, i am getting lots of error messages although it is giving out the converted file. So, I am not sure if they are converted properly. Could you let me know if you have experienced the same error message? Thank you so much.

W1022 17:38:15.026157 140288236451648 save.py:228] No concrete functions found for untraced function `projection_1_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading.

W1022 17:38:15.026237 140288236451648 save.py:228] No concrete functions found for untraced function `nearest_neighbor_upsampling_layer_call_fn` while saving. This function will not be callable after loading.

W1022 17:38:15.026307 140288236451648 save.py:228] No concrete functions found for untraced function `nearest_neighbor_upsampling_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading.

W1022 17:38:15.026376 140288236451648 save.py:228] No concrete functions found for untraced function `nearest_neighbor_upsampling_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading.

W1022 17:38:15.026456 140288236451648 save.py:228] No concrete functions found for untraced function `nearest_neighbor_upsampling_layer_call_fn` while saving. This function will not be callable after loading.

W1022 17:38:15.026524 140288236451648 save.py:228] No concrete functions found for untraced function `nearest_neighbor_upsampling_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading.

W1022 17:38:15.026593 140288236451648 save.py:228] No concrete functions found for untraced function `nearest_neighbor_upsampling_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading.

W1022 17:38:15.032178 140288236451648 save.py:228] No concrete functions found for untraced function `smoothing_2_layer_call_fn` while saving. This function will not be callable after loading.

W1022 17:38:15.032264 140288236451648 save.py:228] No concrete functions found for untraced function `smoothing_2_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading.

W1022 17:38:15.032336 140288236451648 save.py:228] No concrete functions found for untraced function `smoothing_2_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading.

W1022 17:38:15.037694 140288236451648 save.py:228] No concrete functions found for untraced function `smoothing_1_layer_call_fn` while saving. This function will not be callable after loading.

W1022 17:38:15.037776 140288236451648 save.py:228] No concrete functions found for untraced function `smoothing_1_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading.

W1022 17:38:15.037863 140288236451648 save.py:228] No concrete functions found for untraced function `smoothing_1_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading.

INFO:tensorflow:Unsupported signature for serialization: (([(<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300ec47b8>, TensorSpec(shape=(None, 40, 40, 32), dtype=tf.float32, name='image_features/0/1')), (<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300ec48d0>, TensorSpec(shape=(None, 20, 20, 96), dtype=tf.float32, name='image_features/1/1')), (<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300ec45c0>, TensorSpec(shape=(None, 10, 10, 1280), dtype=tf.float32, name='image_features/2/1'))], False), {}).

I1022 17:38:18.807447 140288236451648 def_function.py:1170] Unsupported signature for serialization: (([(<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300ec47b8>, TensorSpec(shape=(None, 40, 40, 32), dtype=tf.float32, name='image_features/0/1')), (<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300ec48d0>, TensorSpec(shape=(None, 20, 20, 96), dtype=tf.float32, name='image_features/1/1')), (<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300ec45c0>, TensorSpec(shape=(None, 10, 10, 1280), dtype=tf.float32, name='image_features/2/1'))], False), {}).

INFO:tensorflow:Unsupported signature for serialization: (([(<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300f32cf8>, TensorSpec(shape=(None, 40, 40, 32), dtype=tf.float32, name='image_features/0/1')), (<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300f32d30>, TensorSpec(shape=(None, 20, 20, 96), dtype=tf.float32, name='image_features/1/1')), (<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300f32eb8>, TensorSpec(shape=(None, 10, 10, 1280), dtype=tf.float32, name='image_features/2/1'))], True), {}).WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable.

WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable.

WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable.

WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable.

WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable.

WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable.

WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable.

WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable.

WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable.

WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable.

2020-10-22 17:43:38.769464: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:316] Ignored output_format.

2020-10-22 17:43:38.769521: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:319] Ignored drop_control_dependency.

2020-10-22 17:43:38.769530: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:325] Ignored change_concat_input_ranges.

2020-10-22 17:43:38.770421: I tensorflow/cc/saved_model/reader.cc:32] Reading SavedModel from: ./saved_model

2020-10-22 17:43:38.838857: I tensorflow/cc/saved_model/reader.cc:55] Reading meta graph with tags { serve }

2020-10-22 17:43:38.838917: I tensorflow/cc/saved_model/reader.cc:93] Reading SavedModel debug info (if present) from: ./saved_model

2020-10-22 17:43:38.838986: I tensorflow/compiler/jit/xla_gpu_device.cc:99] Not creating XLA devices, tf_xla_enable_xla_devices not set

2020-10-22 17:43:38.839010: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1261] Device interconnect StreamExecutor with strength 1 edge matrix:

2020-10-22 17:43:38.839019: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1267]

2020-10-22 17:43:39.042039: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:196] None of the MLIR optimization passes are enabled (registered 0 passes)

2020-10-22 17:43:39.095908: I tensorflow/cc/saved_model/loader.cc:206] Restoring SavedModel bundle.

2020-10-22 17:43:39.154998: I tensorflow/core/platform/profile_utils/cpu_utils.cc:112] CPU Frequency: 3591830000 Hz

2020-10-22 17:43:39.651706: I tensorflow/cc/saved_model/loader.cc:190] Running initialization op on SavedModel bundle at path: ./saved_model

2020-10-22 17:43:39.884801: I tensorflow/cc/saved_model/loader.cc:277] SavedModel load for tags { serve }; Status: success: OK. Took 1114382 microseconds.

2020-10-22 17:43:40.914707: I tensorflow/compiler/mlir/tensorflow/utils/dump_mlir_util.cc:194] disabling MLIR crash reproducer, set env var `MLIR_CRASH_REPRODUCER_DIRECTORY` to enable.

2020-10-22 17:43:41.582975: I tensorflow/compiler/jit/xla_gpu_device.cc:99] Not creating XLA devices, tf_xla_enable_xla_devices not set

2020-10-22 17:43:41.585994: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1720] Found device 0 with properties:

pciBusID: 0000:0a:00.0 name: TITAN X (Pascal) computeCapability: 6.1

coreClock: 1.531GHz coreCount: 28 deviceMemorySize: 11.91GiB deviceMemoryBandwidth: 447.48GiB/s

2020-10-22 17:43:41.586061: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcudart.so.11.0

2020-10-22 17:43:41.586133: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcublas.so.11

2020-10-22 17:43:41.586188: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcublasLt.so.11

2020-10-22 17:43:41.586241: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcufft.so.10

2020-10-22 17:43:41.586310: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcurand.so.10

2020-10-22 17:43:41.586499: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcusolver.so.10

2020-10-22 17:43:41.586580: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcusparse.so.11

2020-10-22 17:43:41.586641: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcudnn.so.8

2020-10-22 17:43:41.591077: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1862] Adding visible gpu devices: 0

2020-10-22 17:43:41.591123: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1261] Device interconnect StreamExecutor with strength 1 edge matrix:

2020-10-22 17:43:41.591139: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1267] 0

2020-10-22 17:43:41.591154: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1280] 0: N

2020-10-22 17:43:41.595432: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1406] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 11217 MB memory) -> physical GPU (device: 0, name: TITAN X (Pascal), pci bus id: 0000:0a:00.0, compute capability: 6.1)

Exception ignored in: <bound method Buckets.__del__ of <tensorflow.python.eager.monitoring.ExponentialBuckets object at 0x7f6e7542dc48>>

Traceback (most recent call last):

File "/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/monitoring.py", line 407, in __del__

AttributeError: 'NoneType' object has no attribute 'TFE_MonitoringDeleteBuckets'

root@9e19458b0d02:/workspace#@srjoglekar246 Hi, when I try conversion to float 32 or float 16, the code does not show any error and gives out a .tflite model file, but it is suspicious. The model produced by step 1 was about 7mb. However, the .tflite file from step 2 is only about 560 bytes - less than 1 kb. Here I attach the model resulted from the step 1 (https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/running_on_mobile_tf2.md) and the result from the step 2 (.tflite conversion to float 32). ssd_mobilenet_v2_fpnlite_320.zip Step 1 Step 1: Export TFLite inference graph This step generates an intermediate SavedModel that can be used with the TFLite Converter via commandline or Python API. To use the script:

# From the tensorflow/models/research/ directory python object_detection/export_tflite_graph_tf2.py \ --pipeline_config_path path/to/ssd_model/pipeline.config \ --trained_checkpoint_dir path/to/ssd_model/checkpoint \ --output_directory path/to/exported_model_directoryHi! Try this solution (#9394 (comment)) It solve problem for me (and converting to INT too)

Hi @mpa74, thank you so much for your help. I have tried the solution you offered and I was able to get .tflite file with seemingly legit size. However, I was not able to quantize it into

uint8format.In addition, when I perform step 1 and step 2, i am getting lots of error messages although it is giving out the converted file. So, I am not sure if they are converted properly. Could you let me know if you have experienced the same error message? Thank you so much.

Error Message during step 1 (No Concrete functions found for untraced function)

W1022 17:38:15.026157 140288236451648 save.py:228] No concrete functions found for untraced function `projection_1_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading. W1022 17:38:15.026237 140288236451648 save.py:228] No concrete functions found for untraced function `nearest_neighbor_upsampling_layer_call_fn` while saving. This function will not be callable after loading. W1022 17:38:15.026307 140288236451648 save.py:228] No concrete functions found for untraced function `nearest_neighbor_upsampling_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading. W1022 17:38:15.026376 140288236451648 save.py:228] No concrete functions found for untraced function `nearest_neighbor_upsampling_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading. W1022 17:38:15.026456 140288236451648 save.py:228] No concrete functions found for untraced function `nearest_neighbor_upsampling_layer_call_fn` while saving. This function will not be callable after loading. W1022 17:38:15.026524 140288236451648 save.py:228] No concrete functions found for untraced function `nearest_neighbor_upsampling_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading. W1022 17:38:15.026593 140288236451648 save.py:228] No concrete functions found for untraced function `nearest_neighbor_upsampling_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading. W1022 17:38:15.032178 140288236451648 save.py:228] No concrete functions found for untraced function `smoothing_2_layer_call_fn` while saving. This function will not be callable after loading. W1022 17:38:15.032264 140288236451648 save.py:228] No concrete functions found for untraced function `smoothing_2_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading. W1022 17:38:15.032336 140288236451648 save.py:228] No concrete functions found for untraced function `smoothing_2_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading. W1022 17:38:15.037694 140288236451648 save.py:228] No concrete functions found for untraced function `smoothing_1_layer_call_fn` while saving. This function will not be callable after loading. W1022 17:38:15.037776 140288236451648 save.py:228] No concrete functions found for untraced function `smoothing_1_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading. W1022 17:38:15.037863 140288236451648 save.py:228] No concrete functions found for untraced function `smoothing_1_layer_call_and_return_conditional_losses` while saving. This function will not be callable after loading. INFO:tensorflow:Unsupported signature for serialization: (([(<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300ec47b8>, TensorSpec(shape=(None, 40, 40, 32), dtype=tf.float32, name='image_features/0/1')), (<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300ec48d0>, TensorSpec(shape=(None, 20, 20, 96), dtype=tf.float32, name='image_features/1/1')), (<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300ec45c0>, TensorSpec(shape=(None, 10, 10, 1280), dtype=tf.float32, name='image_features/2/1'))], False), {}). I1022 17:38:18.807447 140288236451648 def_function.py:1170] Unsupported signature for serialization: (([(<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300ec47b8>, TensorSpec(shape=(None, 40, 40, 32), dtype=tf.float32, name='image_features/0/1')), (<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300ec48d0>, TensorSpec(shape=(None, 20, 20, 96), dtype=tf.float32, name='image_features/1/1')), (<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300ec45c0>, TensorSpec(shape=(None, 10, 10, 1280), dtype=tf.float32, name='image_features/2/1'))], False), {}). INFO:tensorflow:Unsupported signature for serialization: (([(<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300f32cf8>, TensorSpec(shape=(None, 40, 40, 32), dtype=tf.float32, name='image_features/0/1')), (<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300f32d30>, TensorSpec(shape=(None, 20, 20, 96), dtype=tf.float32, name='image_features/1/1')), (<tensorflow.python.framework.func_graph.UnknownArgument object at 0x7f9300f32eb8>, TensorSpec(shape=(None, 10, 10, 1280), dtype=tf.float32, name='image_features/2/1'))], True), {}).Errors during the step 2

WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable. WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable. WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable. WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable. WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable. WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable. WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable. WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable. WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable. WARNING:absl:Could not find any concrete functions to restore for this SavedFunction object while loading. The function will not be callable. 2020-10-22 17:43:38.769464: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:316] Ignored output_format. 2020-10-22 17:43:38.769521: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:319] Ignored drop_control_dependency. 2020-10-22 17:43:38.769530: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:325] Ignored change_concat_input_ranges. 2020-10-22 17:43:38.770421: I tensorflow/cc/saved_model/reader.cc:32] Reading SavedModel from: ./saved_model 2020-10-22 17:43:38.838857: I tensorflow/cc/saved_model/reader.cc:55] Reading meta graph with tags { serve } 2020-10-22 17:43:38.838917: I tensorflow/cc/saved_model/reader.cc:93] Reading SavedModel debug info (if present) from: ./saved_model 2020-10-22 17:43:38.838986: I tensorflow/compiler/jit/xla_gpu_device.cc:99] Not creating XLA devices, tf_xla_enable_xla_devices not set 2020-10-22 17:43:38.839010: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1261] Device interconnect StreamExecutor with strength 1 edge matrix: 2020-10-22 17:43:38.839019: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1267] 2020-10-22 17:43:39.042039: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:196] None of the MLIR optimization passes are enabled (registered 0 passes) 2020-10-22 17:43:39.095908: I tensorflow/cc/saved_model/loader.cc:206] Restoring SavedModel bundle. 2020-10-22 17:43:39.154998: I tensorflow/core/platform/profile_utils/cpu_utils.cc:112] CPU Frequency: 3591830000 Hz 2020-10-22 17:43:39.651706: I tensorflow/cc/saved_model/loader.cc:190] Running initialization op on SavedModel bundle at path: ./saved_model 2020-10-22 17:43:39.884801: I tensorflow/cc/saved_model/loader.cc:277] SavedModel load for tags { serve }; Status: success: OK. Took 1114382 microseconds. 2020-10-22 17:43:40.914707: I tensorflow/compiler/mlir/tensorflow/utils/dump_mlir_util.cc:194] disabling MLIR crash reproducer, set env var `MLIR_CRASH_REPRODUCER_DIRECTORY` to enable. 2020-10-22 17:43:41.582975: I tensorflow/compiler/jit/xla_gpu_device.cc:99] Not creating XLA devices, tf_xla_enable_xla_devices not set 2020-10-22 17:43:41.585994: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1720] Found device 0 with properties: pciBusID: 0000:0a:00.0 name: TITAN X (Pascal) computeCapability: 6.1 coreClock: 1.531GHz coreCount: 28 deviceMemorySize: 11.91GiB deviceMemoryBandwidth: 447.48GiB/s 2020-10-22 17:43:41.586061: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcudart.so.11.0 2020-10-22 17:43:41.586133: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcublas.so.11 2020-10-22 17:43:41.586188: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcublasLt.so.11 2020-10-22 17:43:41.586241: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcufft.so.10 2020-10-22 17:43:41.586310: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcurand.so.10 2020-10-22 17:43:41.586499: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcusolver.so.10 2020-10-22 17:43:41.586580: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcusparse.so.11 2020-10-22 17:43:41.586641: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcudnn.so.8 2020-10-22 17:43:41.591077: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1862] Adding visible gpu devices: 0 2020-10-22 17:43:41.591123: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1261] Device interconnect StreamExecutor with strength 1 edge matrix: 2020-10-22 17:43:41.591139: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1267] 0 2020-10-22 17:43:41.591154: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1280] 0: N 2020-10-22 17:43:41.595432: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1406] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 11217 MB memory) -> physical GPU (device: 0, name: TITAN X (Pascal), pci bus id: 0000:0a:00.0, compute capability: 6.1) Exception ignored in: <bound method Buckets.__del__ of <tensorflow.python.eager.monitoring.ExponentialBuckets object at 0x7f6e7542dc48>> Traceback (most recent call last): File "/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/monitoring.py", line 407, in __del__ AttributeError: 'NoneType' object has no attribute 'TFE_MonitoringDeleteBuckets' root@9e19458b0d02:/workspace#

Hi @SukyoungCho ! Looks like I have similar warnings on step 1, but error with ‘NoneType’ object I have never received. Sorry, I don’t know what to do with this error

@mpa74 If a SavedModel is getting generated by step 1, the logs just seem to be warnings based on APIs etc. You can ignore them

For step 2, you also need this for conversion:

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8, tf.lite.OpsSet.TFLITE_BUILTINS]The final post-processing op needs to run in float, there is no quantized version for it.

@srjoglekar246 Hi, I do appreciate for your help! It's been a while, but I'm still struggling from exporting a model into uint8 quantized .tflite model. In the meantime, I have tried to convert not only SSD models but also EfficientDet and CenterNet, but all of them failed.

If you don't mind, could you elaborate more on what do you mean by "The final post-processing op needs to run in float, there is no quantized version for it." this? It sounds confusing to me, since the models from TF1 OD API are eligible for uint8 quantization, tflite conversion, and EdgeTPU compile while they have post processing ops on them. Is it due to a difference in tflite conversion processes for TF1 and TF2?

To be honest, the reason why I was looking for EfficientDet uint8 was to deploy it on a board with coral accelerator. I have been using ssd mobiledet_dsp_coco from TF1 OD API, but its accuracy was not satisfactory (while i was only detecting 'person' the AP was about 38 and had way too many false positive). However, I found out that on the same image set, CenterNet and EfficientDet showed extremely low false positive rate (I ran an inference on the server for this not on the board).

So it would be very appreciated if you could manage to release uint8 quantization for EfficientDet or CenterNet so that I can EdgeTPU compile it and deploy it on a board! Or, if you could tell me if there are any models better than SSD Mobiledet that can be quantized into uint8, or EfficientDet for TF1. Thank you so much!

It looks like the Coral team has uncovered some issues with quantization of detection models. Will ping back once I have updates.

@mpa74 If a SavedModel is getting generated by step 1, the logs just seem to be warnings based on APIs etc. You can ignore them

For step 2, you also need this for conversion:

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8, tf.lite.OpsSet.TFLITE_BUILTINS]The final post-processing op needs to run in float, there is no quantized version for it.

@srjoglekar246 Thank you! and it would be appreciated if you could explain what do you mean by this!

@SukyoungCho So the basic idea is that multi-class Non-Max Suppression for SSD is written as a 'custom' op in TFLite. This is because if we try to directly convert TF's logic for multi-class NMS, its too complex to be efficient on mobile. This custom op is what takes in boxes/anchors/etc & returns the final (post-processed) boxes, classes, scores, etc from the model. The logic performed by this op isn't really implemented on most of our delegates (& might not even make sense on backends like the GPU), so it doesn't run on them. Even for quantized models from TF1, the heavy backbone of the model (All conv/depthwise conv ops etc) runs in quantized mode (possibly on GPU/DSP etc), but the post-processing runs on the CPU, since the custom SSD op is only implemented on CPU.

The conversion was successfully completed in about 30 minutes without any problems. Unless you introduce tf-nightly, it cannot be made to work properly at present. There is no difference in behavior between tf.int8 and tf.uint8.

I hope this is helpful to you all.

### Uninstalling a legitimate version of TensorFlow

$ sudo pip3 uninstall tensorboard-plugin-wit tb-nightly tensorboard \

tf-estimator-nightly tensorflow-gpu \

tensorflow tf-nightly tensorflow_estimator### Installation of tf-nightly

$ sudo pip3 install tf-nightly### export

$ export PYTHONPATH=$PYTHONPATH:${HOME}/git/models

$ cd ${HOME}/git/models/research

$ python3 object_detection/export_tflite_graph_tf2.py \

--pipeline_config_path ${HOME}/Downloads/ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8/pipeline.config \

--trained_checkpoint_dir ${HOME}/Downloads/ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8/checkpoint \

--output_directory ${HOME}/Downloads/ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8/export_tflite_graph### saved_model to tflite

### tf-nightly==2.5.0-dev20201128

import tensorflow as tf

import tensorflow_datasets as tfds

import numpy as np

def representative_dataset_gen():

for data in raw_test_data.take(10):

image = data['image'].numpy()

image = tf.image.resize(image, (320, 320))

image = image[np.newaxis,:,:,:]

image = image - 127.5

image = image * 0.007843

yield [image]

raw_test_data, info = tfds.load(name="coco/2017", with_info=True, split="test", data_dir="~/TFDS", download=False)

converter = tf.lite.TFLiteConverter.from_saved_model('export_tflite_graph/saved_model')

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS, tf.lite.OpsSet.SELECT_TF_OPS]

tflite_model = converter.convert()

with open('{}/model_float32.tflite'.format('export_tflite_graph/saved_model'), 'wb') as w:

w.write(tflite_model)

print("tflite convert complete! - {}/model_float32.tflite".format('export_tflite_graph/saved_model'))

converter = tf.lite.TFLiteConverter.from_saved_model('export_tflite_graph/saved_model')

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8, tf.lite.OpsSet.SELECT_TF_OPS]

converter.inference_input_type = tf.int8

converter.inference_output_type = tf.int8

# converter.inference_input_type = tf.uint8

# converter.inference_output_type = tf.uint8

converter.allow_custom_ops = True

converter.representative_dataset = representative_dataset_gen

tflite_model = converter.convert()

with open('{}/model_full_integer_quant.tflite'.format('export_tflite_graph/saved_model'), 'wb') as w:

w.write(tflite_model)

print("tflite convert complete! - {}/model_full_integer_quant.tflite".format('export_tflite_graph/saved_model'))

$ edgetpu_compiler -s export_tflite_graph/saved_model/model_full_integer_quant.tflite

Edge TPU Compiler version 15.0.340273435

Input: export_tflite_graph/saved_model/model_full_integer_quant.tflite

Output: model_full_integer_quant_edgetpu.tflite

Operator Count Status

PACK 4 Tensor has unsupported rank (up to 3 innermost dimensions mapped)

RESHAPE 6 Mapped to Edge TPU

RESHAPE 4 More than one subgraph is not supported

RESHAPE 2 Operation is otherwise supported, but not mapped due to some unspecified limitation

CONCATENATION 1 Operation is otherwise supported, but not mapped due to some unspecified limitation

CONCATENATION 1 More than one subgraph is not supported

LOGISTIC 1 Operation is otherwise supported, but not mapped due to some unspecified limitation

CUSTOM 1 Operation is working on an unsupported data type

ADD 10 Mapped to Edge TPU

ADD 2 More than one subgraph is not supported

CONV_2D 14 More than one subgraph is not supported

CONV_2D 58 Mapped to Edge TPU

DEQUANTIZE 1 Operation is otherwise supported, but not mapped due to some unspecified limitation

DEQUANTIZE 1 Operation is working on an unsupported data type

QUANTIZE 4 More than one subgraph is not supported

QUANTIZE 6 Mapped to Edge TPU

DEPTHWISE_CONV_2D 14 More than one subgraph is not supported

DEPTHWISE_CONV_2D 37 Mapped to Edge TPUNote that SSD MobileNet v2 320x320 needed to be fixed due to a strange configuration in pipeline.config.

model {

ssd {

num_classes: 90

image_resizer {

fixed_shape_resizer {

height: 300

width: 300

}

}

feature_extractor {

type: "ssd_mobilenet_v2_keras"

depth_multiplier: 1.0to

model {

ssd {

num_classes: 90

image_resizer {

fixed_shape_resizer {

height: 320

width: 320

}

}

feature_extractor {

type: "ssd_mobilenet_v2_keras"

depth_multiplier: 1.0Model successfully compiled but not all operations are supported by the Edge TPU. A percentage of the model will instead run on the CPU, which is slower. If possible, consider updating your model to use only operations supported by the Edge TPU. For details, visit g.co/coral/model-reqs.

Number of operations that will run on Edge TPU: 109

Number of operations that will run on CPU: 3

Operator Count Status

CUSTOM 1 Operation is working on an unsupported data type

ADD 10 Mapped to Edge TPU

CONCATENATION 2 Mapped to Edge TPU

RESHAPE 13 Mapped to Edge TPU

LOGISTIC 1 Mapped to Edge TPU

DEPTHWISE_CONV_2D 17 Mapped to Edge TPU

QUANTIZE 11 Mapped to Edge TPU

CONV_2D 55 Mapped to Edge TPU

DEQUANTIZE 2 Operation is working on an unsupported data type

@PINTO0309 Hi ! Thank you for the detailed post! I used the exact same model as you and my version of tensorflow is : tf_nightly-2.5.0.dev20201202.

But I get the following error when I convert with the quantization:

Traceback (most recent call last):

File "C:\Program Files\JetBrains\PyCharm Community Edition 2020.1\plugins\python-ce\helpers\pydev\pydevd.py", line 1438, in _exec

pydev_imports.execfile(file, globals, locals) # execute the script

File "C:\Program Files\JetBrains\PyCharm Community Edition 2020.1\plugins\python-ce\helpers\pydev\_pydev_imps\_pydev_execfile.py", line 18, in execfile

exec(compile(contents+"\n", file, 'exec'), glob, loc)

File "C:/Users/brackman/Documents/Tensorflow/models/research/object_detection/ssd_mobilenet_quant/convertion.py", line 39, in <module>

tflite_model = converter.convert()

File "C:\Users\brackman\Anaconda3\envs\tf-nightly\lib\site-packages\tensorflow\lite\python\lite.py", line 767, in convert

result = _modify_model_io_type(result, **flags_modify_model_io_type)

File "C:\Users\brackman\Anaconda3\envs\tf-nightly\lib\site-packages\tensorflow\lite\python\util.py", line 853, in modify_model_io_type

model_object = _convert_model_from_bytearray_to_object(model)

File "C:\Users\brackman\Anaconda3\envs\tf-nightly\lib\site-packages\tensorflow\lite\python\util.py", line 579, in _convert_model_from_bytearray_to_object

model_object = schema_fb.Model.GetRootAsModel(model_bytearray, 0)

AttributeError: module 'tensorflow.lite.python.schema_py_generated' has no attribute 'Model'When I look at this error it points me to #41846

Here is my code if you want to see if I did anything wrong

import tensorflow as tf

import numpy as np

data_dir = "C:/Users/brackman/Documents/Tensorflow/workspace/IVP_V4/data/test"

saved_model_dir="C:/Users/brackman/Documents/Tensorflow/models/research/object_detection/ssd_mobilenet_quant/tflite/saved_model"

def representative_dataset_gen():

import glob

from PIL import Image

image_list = glob.glob("C:/Users/brackman/Documents/Tensorflow/workspace/IVP_V4/data/test/*.png")

for data in image_list:

image = np.asarray(Image.open(data))

image = tf.image.resize(image, (320, 320))

image = image[np.newaxis, :, :, :]

image = image - 127.5

image = image * 0.007843

yield [image]

converter = tf.lite.TFLiteConverter.from_saved_model(saved_model_dir)

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS, tf.lite.OpsSet.SELECT_TF_OPS]

tflite_model = converter.convert()

with open('{}/model_float32.tflite'.format('export_tflite_graph/saved_model'), 'wb') as w:

w.write(tflite_model)

print("tflite convert complete! - {}/model_float32.tflite".format('export_tflite_graph/saved_model'))

converter = tf.lite.TFLiteConverter.from_saved_model(saved_model_dir)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8, tf.lite.OpsSet.SELECT_TF_OPS]

converter.inference_input_type = tf.int8

converter.inference_output_type = tf.int8

converter.allow_custom_ops = True

converter.representative_dataset = representative_dataset_gen

tflite_model = converter.convert()

with open('{}/model_full_integer_quant.tflite'.format('export_tflite_graph/saved_model'), 'wb') as w:

w.write(tflite_model)

print("tflite convert complete! - {}/model_full_integer_quant.tflite".format('export_tflite_graph/saved_model'))@ChowderII I created a notebook that converts v2 models to tflite model that works with Google Colab. Here is link (Click ”Open in Colab” and run all) https://gist.github.com/NobuoTsukamoto/f48df315be490dcf0c76375c2e04ddb1#file-export_tfv2_lite_models-ipynb

There were no errors in Colab.

(See below for uploading and downloading files to Colab. https://colab.research.google.com/notebooks/io.ipynb)

@ChowderII can you check to see if "C:\Users\brackman\Anaconda3\envs\tf-nightly\lib\site-packages\tensorflow\lite\python\schema_py_generated.py" file is empty?

I was getting the same error you were getting, and it turns out tensorflow has some sort of a bug, where schema_py_generated.py is empty with a pip installation.

@aeozyalcin Yes it is empty! How odd ...

Also, @NobuoTsukamoto thank you so much for this, I will upload my dataset and my model there first thing tomorrow and try it!

@ChowderII

See this post. I replaced my empty file with the one attached to this comment, and was able to convert quantized tflite models that way.

https://github.com/tensorflow/tensorflow/issues/44882#issuecomment-729274083

It's definitely a problem that only reproduces in a Windows environment. If you look at the contents of .whl, it is obvious.

@aeozyalcin Your solution worked! Omg I'm so happy! @NobuoTsukamoto Thanks for your solution, I'm sure yours is going to work as well but The other one was a lot simpler for my use case!

@ChowderII I created a notebook that converts v2 models to tflite model that works with Google Colab. Here is link (Click ”Open in Colab” and run all) https://gist.github.com/NobuoTsukamoto/f48df315be490dcf0c76375c2e04ddb1#file-export_tfv2_lite_models-ipynb

There were no errors in Colab.

(See below for uploading and downloading files to Colab. https://colab.research.google.com/notebooks/io.ipynb)

Thank for sharing. However, I have a question that I see you set converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8, tf.lite.OpsSet.TFLITE_BUILTINS], means when an op not supported for integer quantization implementation, the converter will use tflite-builtin op instead? So how does it run on device only using integer op??

@tucachmo2202 SSD models typically do not run only in integer format. The post-processing (NMS) step works best in float, so only the heavy Convolutional backbone (for eg, MobileNet) is run quantized.

Note that SSD MobileNet v2 320x320 needed to be fixed due to a strange configuration in

pipeline.config.* SSD MobileNet v2 320x320 **http://download.tensorflow.org/models/object_detection/tf2/20200711/ssd_mobilenet_v2_320x320_coco17_tpu-8.tar.gz**model { ssd { num_classes: 90 image_resizer { fixed_shape_resizer { height: 300 width: 300 } } feature_extractor { type: "ssd_mobilenet_v2_keras" depth_multiplier: 1.0to

model { ssd { num_classes: 90 image_resizer { fixed_shape_resizer { height: 320 width: 320 } } feature_extractor { type: "ssd_mobilenet_v2_keras" depth_multiplier: 1.0Model successfully compiled but not all operations are supported by the Edge TPU. A percentage of the model will instead run on the CPU, which is slower. If possible, consider updating your model to use only operations supported by the Edge TPU. For details, visit g.co/coral/model-reqs. Number of operations that will run on Edge TPU: 109 Number of operations that will run on CPU: 3 Operator Count Status CUSTOM 1 Operation is working on an unsupported data type ADD 10 Mapped to Edge TPU CONCATENATION 2 Mapped to Edge TPU RESHAPE 13 Mapped to Edge TPU LOGISTIC 1 Mapped to Edge TPU DEPTHWISE_CONV_2D 17 Mapped to Edge TPU QUANTIZE 11 Mapped to Edge TPU CONV_2D 55 Mapped to Edge TPU DEQUANTIZE 2 Operation is working on an unsupported data type

I have the same problem you have. Could you explain how do you solve it? I don't use the pipeline.config file at all, but you mention it, so I guess it is important. I am using TF Nightly 2.6.0.

Thanks in advance and congratulations for your faboulous work!

Hello everyone,

i currently struggle with this same issue. My setup is as follows: configuration for ssd_mobilenet_v2 almost default, but training on a custom dataset from scratch on 614x514 images and only 3 classes. I further reduced the amount of max detections per image to a level which is to be max expected on my dataset. I trained a model successfully using tensorflow 2.5 and python3.9. The model i created was successfully used for inference so in itself the saved model is valid. (.pb format)

Now i want to use edgetpu accelleration and thus need to convert this model to tflite for further compilation. This however fails miserably. The things i have tried:

The "successfully" converted it to a tflite model with this code:

MODEL_PATH = "your/abs/path/to/folder/saved_model"

MODEL_SAVE_PATH = "your/target/save/location"

converter = tf.lite.TFLiteConverter.from_saved_model(MODEL_PATH ,signature_keys=['serving_default'])

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.experimental_new_converter = True

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS, tf.lite.OpsSet.SELECT_TF_OPS]

tflite_model = converter.convert()

with tf.io.gfile.GFile(MODEL_SAVE_PATH, 'wb') as f:

f.write(tflite_model)This however is neither 8-bit quantized nor is this usable in any way. Tensorflow lite is not able to use this model for inference/interpretation and simply quits without any message when calling interpreter.invoke(). The coral-ai edgetpu-compiler can't even parse the model.

I have also tried a multitude of different converter settings on different tensorflow/python versions including the following:

converter.representative_dataset = gen_function

converter.inference_input_type = tf.float32/tf.int8/tf.uint8

converter.inference_output_type = tf.float32/tf.int8/tf.uint8

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.allow_custom_ops=True(most of those lead to the error Exception: <unknown>:0: error: loc("Func/StatefulPartitionedCall/input/_1"): requires all operands to be either same as or ref type of results)

I have attached the model data as well as the tflite file in a zip if this is necessary. Please let me know if you have any idea regarding this issue. I have wasted the last week finding a solution to this an feel like i did not manage to get one step further.

Some general questions that remain for me are: Is it even possible to convert this model config on tensorflow 2.5? If not can a model that has been trained on tensorflow 2.5 be converted on a different tensorflow version or are they not compatible? Is it an issue that i used 614x514px as image resolution? Is there any other way to confirm the success of a conversion except for running inference?

Thank you for reading this far and for any ideas you can provide.

@CaptainPineapple Did you follow these instructions to convert the model? Specifically the exporting script the produces a TFLite-compatible SavedModel for TFLite converter that you have used. This Colab also shows en end-to-end TFLite example.

@CaptainPineapple Did you follow these instructions to convert the model? Specifically the exporting script the produces a TFLite-compatible SavedModel for TFLite converter that you have used. This Colab also shows en end-to-end TFLite example.

thx for this really quick reply!

yes so far this is the approach that i followed. (not sure if you intended to post the same link 3 times though?)

Step 1 to convert the model from checkpoints to a .pb finishes correctly. The resulting model is also attached at my last post. From what i can tell this model works correctly as i can reload it in a script and successfully ran inference using it.

I'll leave my script for step 2 here, please correct me if there are any really stupid mistakes in there. The background for taking the detour from model over a concrete function is needed as the converter otherwise complains that the input size has not been set.

MODEL_SAVE_PATH = os.path.join(dir_path, "ML", "tf_lite_models","model.tflite")

IMAGE_BASE_PATH = os.path.join(dir_path, "tf", "models", "images","eval")

TEST_IMAGE_PATH = os.path.join(IMAGE_BASE_PATH, "image_20210421-174344.jpg")

MODEL_PATH = os.path.join(dir_path, "tf","data_ssd","saved_model")

def representative_dataset_gen():

data = tf_training_helper.load_images_in_folder_to_numpy_array(IMAGE_BASE_PATH)

(count, x,y,c) = data.shape

for i in range(count):

yield [data[i,:,:,:].astype(np.uint8)]

# load testimage into numpy array of form: (1, w, h, 3)

input_data = tf_training_helper.load_image_into_numpy_array(TEST_IMAGE_PATH)

print("startup\nrunning with tf version: ", tf.__version__)

print("loading model")

# Load the SavedModel.

saved_model_obj = tf.saved_model.load(export_dir=MODEL_PATH)

# Load the specific concrete function from the SavedModel.

concrete_func = saved_model_obj.signatures['serving_default']

print("setting input shape: ", input_data.shape)

# Set the shape of the input in the concrete function.

concrete_func.inputs[0].set_shape(input_data.shape)

# Create tf-lite converter from concrete function:

converter = tf.lite.TFLiteConverter.from_concrete_functions([concrete_func])

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8, tf.lite.OpsSet.TFLITE_BUILTINS]

converter.representative_dataset = representative_dataset_gen

tflite_model = converter.convert()

print("model conversion finished. Starting validation... ")

interpreter = tf.lite.Interpreter(model_content=tflite_model)

interpreter.allocate_tensors()

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

interpreter.set_tensor(input_details[0]['index'], input_data)

interpreter.invoke()This version would in my optinion be the exact version as the documentation you linked suggests it should work. However this results in the error:

Some ops are not supported by the native TFLite runtime, you can enable TF kernels fallback using TF Select. See instructions: https://www.tensorflow.org/lite/guide/ops_select

TF Select ops: ConcatV2

Details:

tf.ConcatV2This error can be "fixed" by allowing custom ops (converter.allow_custom_ops=True) which then again leads to the next error:

ValueError: Failed to parse the model: pybind11::init(): factory function returned nullptr.

This was already mentioned by SukyoungCho on the 14 Oct 2020 but i was not able to find a solution to this error from this thread.

Can you mention how you used export_tflite_graph_tf2.py? And sorry about the wrong Colab link, this is correct one.

Can you mention how you used

export_tflite_graph_tf2.py? And sorry about the wrong Colab link, this is correct one.

oh my... thank you so much.... I just realized that my conversion batch script did not use the correct exporting script.

I was using exporter_main_v2.py instead of export_tflite_graph_tf2.py .... such a stupid mistake...

So far things look more promising. model conversion now successfully terminates with the default settings and the interpreter of the resulting tflite model actually finishes the inference step. All out values from this

out_boxes = interpreter.get_tensor(output_details[0]['index'])

out_classes = interpreter.get_tensor(output_details[1]['index'])

out_scores = interpreter.get_tensor(output_details[2]['index'])are just 0.0 though. Might that be a matter of finetuning the representative dataset? Seems a bit weird that the boxes output is a single value.

The TF version you are using for conversion should be >=2.4. If an earlier version is used, the converter doesn't work with that the exporting script does.

i am running this on tf version 2.5.0

Can you check the model size? If the conversion is happening incorrectly, thew model should be very small (few KB). Also, maybe try float conversion first?

so far integer quantization is not active. All still on default settings with float32 as datatype. tflite model file is currently on 440 bytes. That seems a bit small i guess?

Yeah the converter doesn't seem to be working, and this was mainly observed when the converter was using an older version of TFLite. Can you try in a virtualenv with the latest TF?

okay, thanks will try that. Will need some time to set that up though as i currently do not have anaconda installed. Will report back with the results. Either way thank you so much for your support so far!

So i set up anaconda with python 3.9 and added tensorflow with all dependencies. I retrained my model over night on this fresh install and then did the checkpoint to saved_model conversion as well as the saved_model to tflite conversion from the py39 virtual env. The result remains the same with 440 bytes of model size.

EDIT: to make sure the object detection api is correctly i wiped the existing installation and reran all setup steps. model_build_tf2_tests runs without issues.

EDIT2:

my guess at this point is that the conversion to saved_model format already fails.

After readding the evaluation step to my script where i simply try to process a testimage with the loaded model the result is unusable as the resultobj looks like this:

outputs: [<tf.Tensor 'Identity:0' shape=() dtype=float32>, <tf.Tensor 'Identity_1:0' shape=() dtype=float32>, <tf.Tensor 'Identity_2:0' shape=() dtype=float32>, <tf.Tensor 'Identity_3:0' shape=() dtype=float32>]

while the model converted with exporter_main_v2.py shows this output for the same code:

outputs: [<tf.Tensor 'Identity:0' shape=(1, 100) dtype=float32>, <tf.Tensor 'Identity_1:0' shape=(1, 100, 4) dtype=float32>, <tf.Tensor 'Identity_2:0' shape=(1, 100) dtype=float32>, <tf.Tensor 'Identity_3:0' shape=(1, 100, 4) dtype=float32>, <tf.Tensor 'Identity_4:0' shape=(1, 100) dtype=float32>, <tf.Tensor 'Identity_5:0' shape=(1,) dtype=float32>, <tf.Tensor 'Identity_6:0' shape=(1, 6669, 4) dtype=float32>, <tf.Tensor 'Identity_7:0' shape=(1, 6669, 4) dtype=float32>]

i'll investigate this further to try and figure out why this happens. I assume that the latter version is the correct desired output.

EDIT3:

i guess the prior described difference in outputs is not an error and rather a misunderstanding on my side: Am i correct that the result from export_tflite_graph_tf2 is only to be used to further transform it into a tf-lite model?

This assumption is taken from the following comment:

https://github.com/tensorflow/models/issues/9033#issuecomment-724649515

i guess the prior described difference in outputs is not an error and rather a misunderstanding on my side: Am i correct that the result from export_tflite_graph_tf2 is only to be used to further transform it into a tf-lite model?

Yup :-)

I wonder why your TFLite model isn't coming out right though. Can you try running this workflow in your environment? If the code in that Colab runs, then the problem is with what we are doing for your use-case. If the Colab also fails, the issue is probably with the system setup.

i guess the prior described difference in outputs is not an error and rather a misunderstanding on my side: Am i correct that the result from export_tflite_graph_tf2 is only to be used to further transform it into a tf-lite model?

Yup :-)

I wonder why your TFLite model isn't coming out right though. Can you try running this workflow in your environment? If the code in that Colab runs, then the problem is with what we are doing for your use-case. If the Colab also fails, the issue is probably with the system setup.

i'll try but even the start is already a bit messy as it apparently is not made to run on python 3.9. some of the module require time.clock which has been removed starting at python 3.8. This already excludes the use of the following modules: object_detection.utils.colab_utils IPython.display.Image IPython.display.display Are there any parts that you'd deem skippable while still providing information if it is a setup or model issue?

EDIT: i guess skipping parts is not really possible as it all just builds on top of each other. I'll try refeactoring it as far as possible.

Hi, I was wondering if anyone could help how to convert and quantize SSD models on TF2 Object Detection Model Zoo. It seems like there's a difference in converting to .tflite in TF1 and TF2. To the best of my knowledge, in TF1, we first frozen the model using exporter and then quantized and converted it into .tflite. And, I had no problem in doing it in TF1. The models I tried was

However, when I followed the guideline provided on the github repo 1.(https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/running_on_mobile_tf2.md#step-1-export-tflite-inference-graph) and 2. (https://www.tensorflow.org/lite/performance/post_training_quantization#full_integer_quantization). I was not able to convert them into .tflite.

Running "Step 1: Export TFLite inference graph", created saved_model.pb file in the given output dir {inside ./saved_model/} However, it displayed the skeptic messages below while exporting them, and not sure if it's run properly.

Running "Step 2: Convert to TFLite", is the pain in the ass. I managed to convert the model generated in the step 1 into .tflite without any quantization following the given command, although I am not sure if it can be deployed on the mobile devices.

But, I am trying to deploy it on the board with the coral accelerator and need to conver the model into 'uint8' format. I thought the models provided on the model zoo are not QAT trained, and hence they require PTQ. Using the command line below,

It shows the error message below, and i am not able to convert the model into .tflite format. I think the error occurs because something went wrong in the first step.

Below, I am attaching the sample script I used to run "Step 2". I have never train a model, and i am just trying to check if it is possible to convert SSD models on TF 2 OD API Model Zoo into Uint8 format .tflite. That is why, i dont have the sample data used to train the model, and just using MNIST data in Keras to save the time and cost to create data. (checkpoint CKPT = 0)

The environment description. CUDA = 10.1 Tensorflow = 2.3, 2.2 (both are tried) TensorRT = ii libnvinfer-plugin6 6.0.1-1+cuda10.1 amd64 TensorRT plugin libraries ii libnvinfer6 6.0.1-1+cuda10.1 amd64 TensorRT runtime libraries

It would be appreciated if anyone could help to solve the issue, or provide a guideline. @srjoglekar246 Would you be able to provide the guideline or help me to convert models into uint8? Hope you documented while you were enabling SSD models to be converted into .tflite. Thank you so much.

**_

_**