Equalization Loss for Long-Tailed Object Recognition

Jingru Tan, Changbao Wang, Buyu Li, Quanquan Li, Wanli Ouyang, Changqing Yin, Junjie Yan

:warning: We recommend to use the EQLv2 repository (code) which is based on mmdetection. It also includes EQL and other algorithms, such as cRT (classifier-retraining), BAGS (BalanceGroup Softmax).

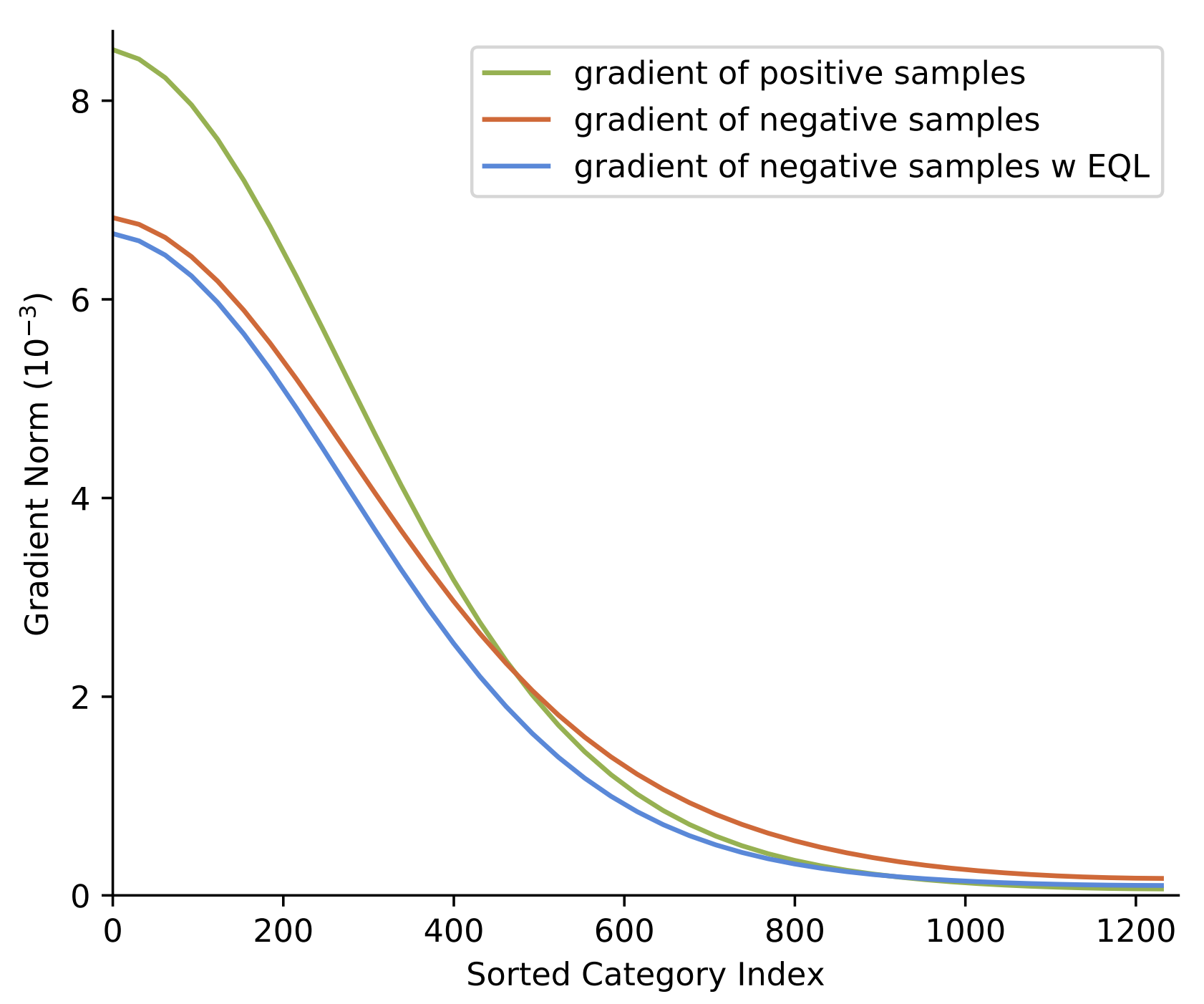

In this repository, we release code for Equalization Loss (EQL) in Detectron2. EQL protects the learning for rare categories from being at a disadvantage during the network parameter updating under the long-tailed situation. ## Installation Install Detectron 2 following [INSTALL.md](https://github.com/facebookresearch/detectron2/blob/master/INSTALL.md). You are ready to go! ## LVIS Dataset Following the instruction of [README.md](https://github.com/facebookresearch/detectron2/blob/master/datasets/README.md) to set up the lvis dataset. ## Training To train a model with 8 GPUs run: ```bash cd /path/to/detectron2/projects/EQL python train_net.py --config-file configs/eql_mask_rcnn_R_50_FPN_1x.yaml --num-gpus 8 ``` ## Evaluation Model evaluation can be done similarly: ```bash cd /path/to/detectron2/projects/EQL python train_net.py --config-file configs/eql_mask_rcnn_R_50_FPN_1x.yaml --eval-only MODEL.WEIGHTS /path/to/model_checkpoint ``` # Pretrained Models ## Instance Segmentation on LVIS

| Backbone | Method | AP | AP.r | AP.c | AP.f | AP.bbox | download |

|---|---|---|---|---|---|---|---|

| R50-FPN | MaskRCNN | 21.2 | 3.2 | 21.1 | 28.7 | 20.8 | model | metrics |

| R50-FPN | MaskRCNN-EQL | 24.0 | 9.4 | 25.2 | 28.4 | 23.6 | model | metrics |

| R50-FPN | MaskRCNN-EQL-Resampling | 26.1 | 17.2 | 27.3 | 28.2 | 25.4 | model | metrics |

| R101-FPN | MaskRCNN | 22.8 | 4.3 | 22.7 | 30.2 | 22.3 | model | metrics |

| R101-FPN | MaskRCNN-EQL | 25.9 | 10.0 | 27.9 | 29.8 | 25.9 | model | metrics |

| R101-FPN | MaskRCNN-EQL-Resampling | 27.4 | 17.3 | 29.0 | 29.4 | 27.1 | model | metrics |