CSD: Consistency-Based Semi-Supervised Learning for Object Detection implementation in Detectron2

This repository contains an unofficial implementation of the method described in CSD paper by Jeong et al based on Detectron2 framework. It includes implementation for two-stage RFCN object detector only as single-stage detectors were not the focus of my research. It uses W&B to monitor the progress.

Table of Contents

- CSD: Consistency-Based Semi-Supervised Learning for Object Detection implementation in Detectron2

- Table of Contents

- Overview

- Installation

- Running scripts

- Results

- Additional notes

- Future features

- Credits

Overview

The goal of this project was to verify the effectiveness of the CSD method for two-stage object detectors, implement an easily configurable solution, and to learn the D2 framework. After successful implementation, due to the lack of time, the scope of experiments was limited to a quick, non-extensive set on some datasets. Below I put a table that summarizes the current results. Note:

- only RFCN models with ResNet50 backbone were used;

- when a CSD version is compared with a baseline it uses the same configuration, the only difference is the CSD regularization enabled.

All runs were tested on VOC07 test. To monitor the progress better, for CSD runs I logged multiple images with predictions from both RPN and ROI as the training progressed that you can check by following the wandb links below.

| name | labeled | unlabeled | csd_beta | iterations | result (AP@50) | link to Wandb logs |

|---|---|---|---|---|---|---|

| baseline | VOC07 | - | - | 17K | 75.8 % (authors': 73.9 %) | wandb |

| csd | VOC07 | VOC12 | 0.5 | 13K | 76.4 %, +0.6% (authors': 74.7 %) | wandb |

| baseline | 5% VOC07 | - | - | 4K | 41.7% | wandb |

| csd | 5% VOC07 | 95% VOC07 | 0.5 | 2.5K | 42.6%, +0.9% | wandb |

| baseline | 10% VOC07 | - | - | 4K | 51.2% | wandb |

| csd | 10% VOC07 | 90% VOC07 | 0.3 | 3K | 53.2%, +2.0% | wandb |

| baseline | 20% VOC07 | - | - | 4.5K | 61.5% | wandb |

| csd | 20% VOC07 | 80% VOC07 | 0.3 | 4.5K | 63.0%, +1.5% | wandb |

Comparison result can be found in this Wandb report. Using CSD improved the results, especially it helped in low-data scenarios.

In Overview of the project structure you can get an overview of the code. The core CSD logic (e.g. to copy to your project) is in CSDGeneralizedRCNN's forward() and CSDTrainer's run_step() (but keep in mind that they may depend on many other functions/classes).

Please note that the current implementation can be used with other datasets and backbones with no problem, and I plan to run some COCO experiments soon.

The author's official source code for RFCN can be found here (also see their SSD implementation), but I struggled to make it run and faced multiple memory issues.

Installation

Prerequisites

This repository follows D2's requirements which you can find in the next section. Below I just mention the configuration I used for some of the experiments.

Initial code was tested on a machine with Ubuntu 18.04, CUDA V11.1.105, cuDNN 7.5.1, 4xV100 GPUs, Python 3.7.10, torch==1.7.1, torchvision==0.8.2, and detectron2==0.4. it was run on an AWS EC2 machine. To check specifically which AMI (image) and commands I run on the machine, see this note.

Installing Detectron2

See D2's INSTALL.md.

Downloading data

Follow D2's guide on downloading the datasets.

You can also use the script provided inside the datasets/ folder to download VOC data by running sh download_voc.sh. Run this command inside the datasets/ folder.

Running scripts

Baseline

If you get ModuleNotFoundError: No module named 'csd' error, you can add PYTHONPATH=<your_prefix>/CSD-detectron2 to the beginninng of the command.

To reproduce baseline results (without visualizations) on VOC07, run the command below:

python tools/run_baseline.py --num-gpus 4 --config configs/voc/baseline_L=07_R50RFCN.yamlTo reproduce baseline results for 5% labeled on VOC07, run the command below:

python tools/run_baseline.py --num-gpus 4 --config configs/voc/baseline_L=5p07_R50RFCN.yamlYou could also run a CSD training script with CSD_WEIGHT_SCHEDULE_RAMP_BETA=0 (or CSD_WEIGHT_SCHEDULE_RAMP_T0=<some_large_number>) but its data loader produces x-flips for all images and requires IMS_PER_BATCH_UNLABELED to be at least 1, which would slow down the training process significantly. However, to make sure there are no bugs in CSD implementation, I actually tried running the CSD script with the foregoing parameters for 2K iterations and obtained the results similar to the baseline's.

The run_baseline.py script is a duplicate of D2's tools/train_net.py (link), I only add a few lines of code enable Wandb logging of scalars.

I used a configuration that D2 provides for training on VOC07+12 in PascalVOC-Detection/faster_rcnn_R_50_FPN.yaml and slightly modified it. They report reaching 51.9 mAP on VOC07 test when training on VOC07+12 trainval.

To speed up the experiments, for this project it was decided to use a model trained only on VOC07 trainval and use VOC07 test for testing. For both baseline and CSD experiments the training duration & LRL schedule were the same.

CSD

Run the command below to train a model with CSD on VOC07 trainval (labeled) and VOC12 (unlabeled):

python tools/run_net.py --num-gpus 4 --config configs/voc/csd_L=07_U=12_R50RFCN.yamlTo train a model with CSD on 5% VOC07 trainval (labeled) and 95% VOC07 trainval (unlabeled):

python tools/run_net.py --num-gpus 4 --config configs/voc/csd_L=5p07_U=95p07_R50RFCN.yamlTo resume the training, you can run the following (fyi: the current iteration is also checkpointed in D2):

python tools/run_net.py --resume --num-gpus 4 --config configs/voc/csd_L=07_U=12_R50RFCN.yaml MODEL.WEIGHTS output/your_model_weights.ptNote that the configuration file configs/voc/csd_L=07_U=12_R50RFCN.yaml extends the baseline's configs/voc/baseline_L=07_R50RFCN.yaml so one can compare what exactly was modified.

The details for all the configuration parameters can be found in csd/config/config.py. I tried to document most of parameters and after checking the CSD paper and its supplementary you should have no problems with understanding them (also, the code is extensively documented).

It is recommended to experiment with parameters, as I ran only several configurations.

Evaluation

Run the command below to evaluate your model on VOC07 test dataset:

python tools/run_net.py --eval-only --config configs/voc/csd_L=07_U=12_R50RFCN.yaml MODEL.WEIGHTS output/your_model_weights.ptResults

The results can be found in this Wandb report and the table above, I also made the project & runs public so you can check them in detail as well.

Using the foregoing configuration, the improvements from CSD regularization are only marginal. On several occasions CSD-runs outperformed baseline-runs. An important note to make is that introducing CSD regularization did not harm the model that hints that with the right hyperparameters it could actually help more.

Several things that I left for future experiments:

- Trying a different max_csd_weight;

- Increasing training duration;

- Increasing backbone's capacity, i.e. replacing ResNet50 with ResNet101.

Additional notes

Overview of the project structure

To better understand the structure of the project, I first recommend checking the official D2's quickstart guide, and the following blogposts [1, 2, 3] that describe the detectron2's projects standard structure.

I tried to leave extensive comments in each file so there should be no problem with following the logic once you are comfortable with D2. The main files to check are:

tools/run_net.pythe starting script that loads the configuration, initializes Wandb, creates the trainer manager and the model, and starts the training/evaluation loop;csd/config/config.pydefines the project-specific configuration; note: parameters in this file define default parameters, they are repeated inconfigs/voc/csd_L=07_U=12_R50RFCN.yamlsimply for convenience (e.g. runningpython tools/run_net.py --num-gpus 4should give you the same CSD results);csd/engine/trainer.pycontains the implementation of the training loop;CSDTrainerManagercontrols the training process taking care of data loaders/checkpointing/hooks/etc., whileCSDTrainerruns the actual training loop; as in many other files, I extend D2's default classes such asDefaultTrainerandSimpleTrainer, overriding some of their methods, modifying only the needed parts;csd/data/build.pybuilds the data loaders; it uses images that are loaded and x-flipped as defined incsd/data/mapper.py;csd/modeling/meta_arch/rcnn.pycontains the implementation of the forward pass for CSD; specifically,forward()implements the standard forward pass along with CSD logic and returns both of the losses (whichCSDTraineruses for backpropagation), andinference()implements inference logic; note: half of the code there concerns visualizations using Wandb in which I put a lot of effort for seamless monitoring of RPN's and ROI heads' progress;csd/modeling/roi_heads/roi_heads.pycontains several minor modifications of the defaultStandardROIHeadssuch as returning both predictions and losses during training (which is needed for CSD inrcnn.py);csd/utils/events.pyimplements an EventWriter that logs scalars to Wandb;csd/checkpoint/detection_checkpoint.pyimplements uploading a model's checkpoint to Wandb.

The core CSD logic (e.g. to copy to your project) is in CSDGeneralizedRCNN's forward() and CSDTrainer's run_step() (but keep in mind that they may depend on many other functions/classes).

Wandb

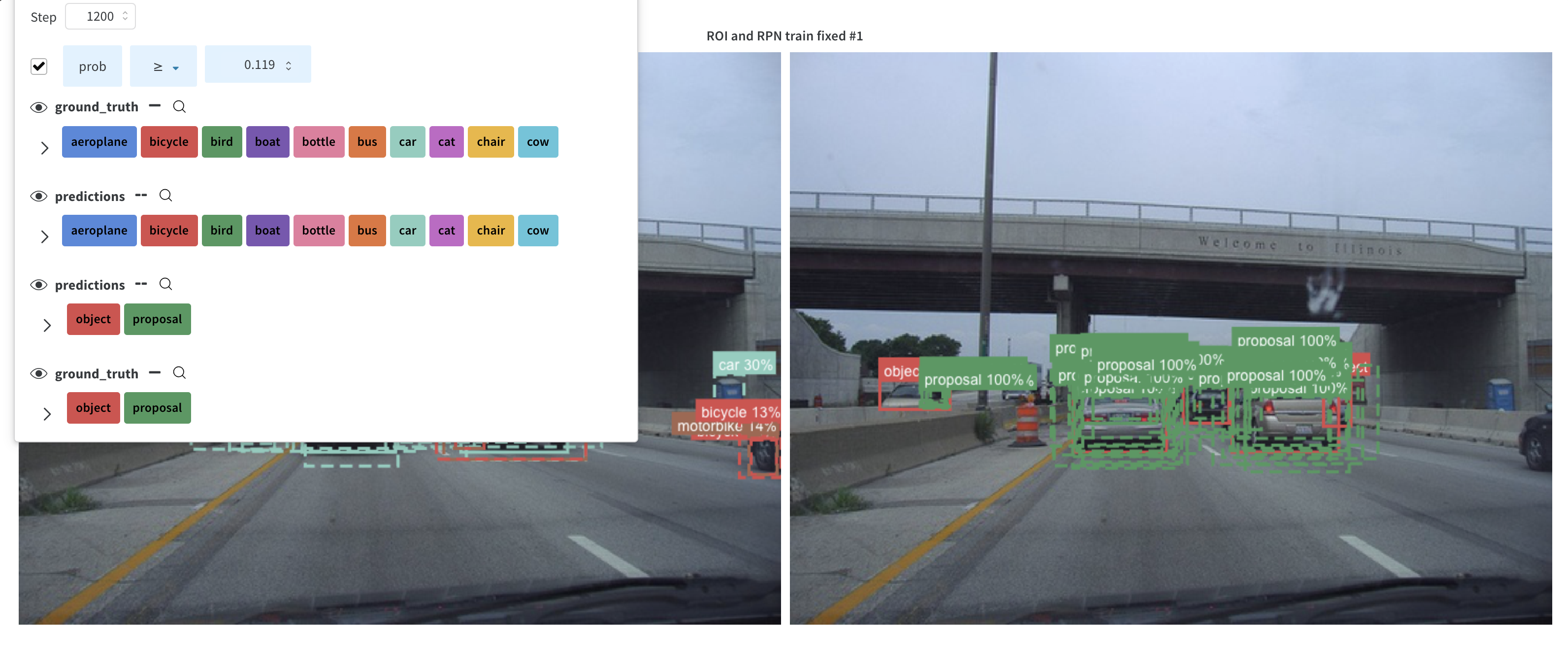

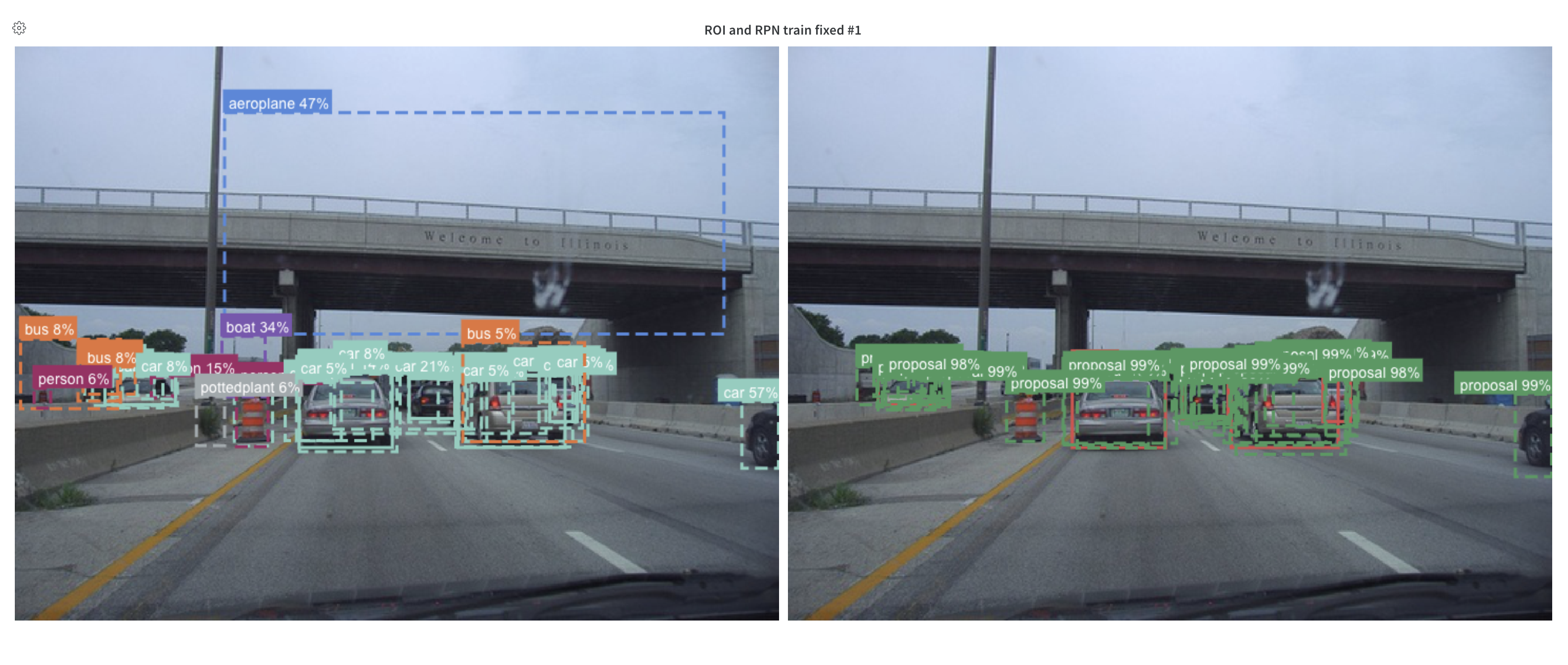

To monitor the training I used weights and biases (Wandb) web platform. Apart from seamless integration with Tensorboard that D2 uses (one can just use wandb.init(sync_tensorboard=True) to sync all the metrics), handy reports that can be used to compare experiments and share results, I really liked their visualizations of bounding boxes.

I log both RPN's and ROI heads' predictions each cfg.VIS_PERIOD (default=300) iterations on cfg.VIS_IMS_PER_GROUP (default=3) images, including up to cfg.VIS_MAX_PREDS_PER_IM (default=40) bboxes per image (both for RPN and ROIs).

I log examples for:

- a set of random training images with GT matching enabled (see link and link);

- a set of training images fixed at the beginning of the run, to monitor the model's progress specifically on them (without GT matching)

- a set of random testing images during inference each

cfg.TEST.EVAL_PERIODiterations.

Below I put some screenshots of example visualizations (note, iterations number in the header of the table is not precise, visualizations are provided just as an example).

| iter 1k | iter ~2k | iter ~3k |

|---|---|---|

|

|

|

Future features

- [ ] Test enabling mask RoI head and how CSD affects segmentation performance

- [ ] Test performance on COCO and LVIS datasets

- [x] Add support for splitting a dataset into labeled and unlabeled parts (e.g. using only 1/5% of data as labeled data)

Credits

I thank the authors of Unbiased Teacher and Few-Shot Object Detection (FsDet) for publicly releasing their code that assisted me with structuring this project.