A Scalable Inference Engine for Diffusion Transformers (DiTs) on multi-GPU Clusters

📝 Papers | 🚀 Quick Start | 🎯 Supported DiTs | 📚 Dev Guide | 📈 Discussion | 📝 Blogs [](https://discord.gg/YEWzWfCF9S)Table of Contents

- 🔥 Meet xDiT

- 📢 Updates

- 🎯 Supported DiTs

- 📈 Performance

- 🚀 QuickStart

- 🖼️ ComfyUI with xDiT

- ✨ xDiT's Arsenal

- 📚 Develop Guide

- 🚧 History and Looking for Contributions

- 📝 Cite Us

🔥 Meet xDiT

Diffusion Transformers (DiTs) are driving advancements in high-quality image and video generation. With the escalating input context length in DiTs, the computational demand of the Attention mechanism grows quadratically! Consequently, multi-GPU and multi-machine deployments are essential to meet the real-time requirements in online services.

Parallel Inference

To meet real-time demand for DiTs applications, parallel inference is a must. xDiT is an inference engine designed for the parallel deployment of DiTs on large scale. xDiT provides a suite of efficient parallel approaches for Diffusion Models, as well as computation accelerations.

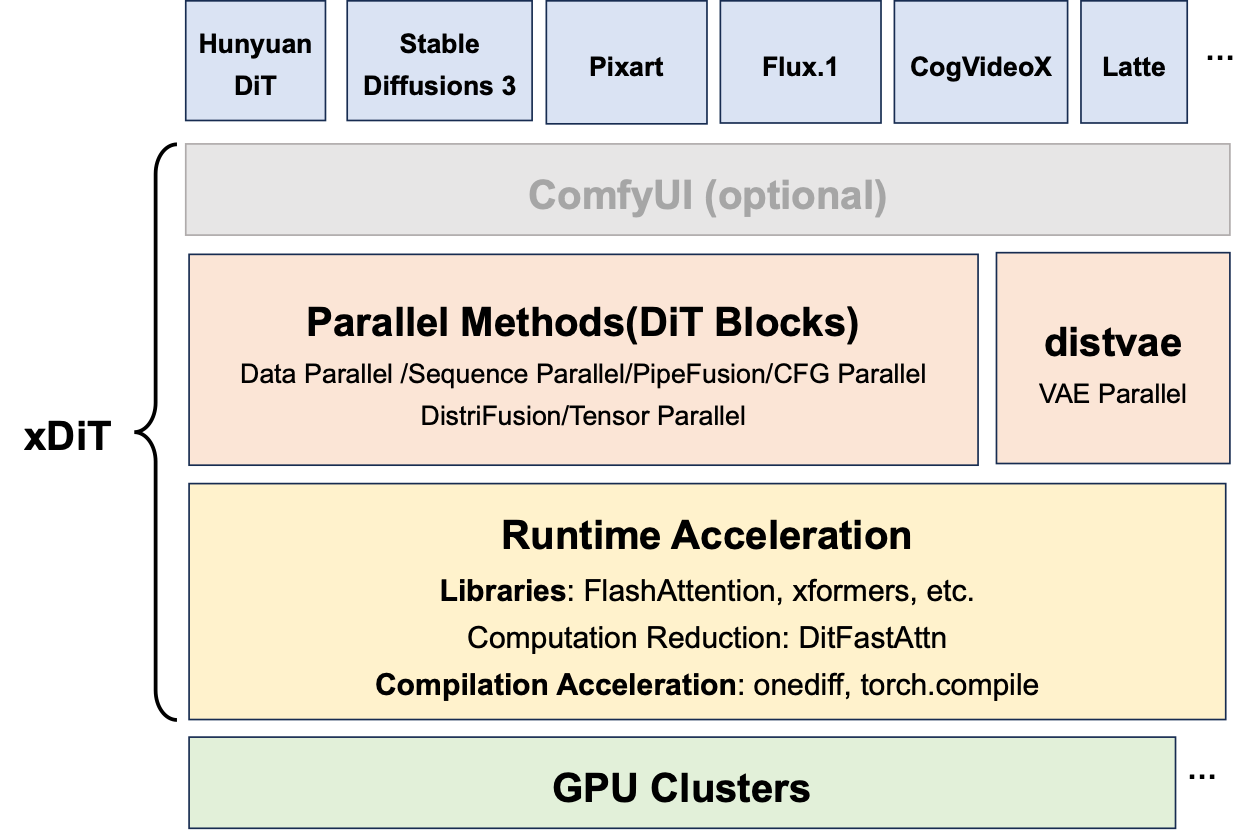

The overview of xDiT is shown as follows.

-

Sequence Parallelism, USP is a unified sequence parallel approach combining DeepSpeed-Ulysses, Ring-Attention proposed by use3.

-

PipeFusion, a sequence-level pipeline parallelism, similar to TeraPipe but takes advantage of the input temporal redundancy characteristics of diffusion models.

-

Data Parallel: Processes multiple prompts or generates multiple images from a single prompt in parallel across images.

-

CFG Parallel, also known as Split Batch: Activates when using classifier-free guidance (CFG) with a constant parallelism of 2.

The four parallel methods in xDiT can be configured in a hybrid manner, optimizing communication patterns to best suit the underlying network hardware.

As shown in the following picture, xDiT offers a set of APIs to adapt DiT models in huggingface/diffusers to hybrid parallel implementation through simple wrappers. If the model you require is not available in the model zoo, developing it yourself is straightforward; please refer to our Dev Guide.

We also have implemented the following parallel stategies for reference:

- Tensor Parallelism

- DistriFusion

Computing Acceleration

Optimization orthogonal to parallel focuses on accelerating single GPU performance.

First, xDiT employs a series of kernel acceleration methods. In addition to utilizing well-known Attention optimization libraries, we leverage compilation acceleration technologies such as torch.compile and onediff.

Furthermore, xDiT incorporates optimization techniques from DiTFastAttn, which exploits computational redundancies between different steps of the Diffusion Model to accelerate inference on a single GPU.

📢 Updates

- 🎉November 20, 2024: xDiT supports CogVideoX-1.5 and achieved 6.12x speedup compare to the implementation in diffusers!

- 🎉November 11, 2024: xDiT has been applied to mochi-1 and achieved 3.54x speedup compare to the official open source implementation!

- 🎉October 10, 2024: xDiT applied DiTFastAttn to accelerate single GPU inference for Pixart Models!

- 🎉September 26, 2024: xDiT has been officially used by THUDM/CogVideo! The inference scripts are placed in parallel_inference/ at their repository.

- 🎉September 23, 2024: Support CogVideoX. The inference scripts are examples/cogvideox_example.py.

- 🎉August 26, 2024: We apply torch.compile and onediff nexfort backend to accelerate GPU kernels speed.

- 🎉August 15, 2024: Support Hunyuan-DiT hybrid parallel version. The inference scripts are examples/hunyuandit_example.py.

- 🎉August 9, 2024: Support Latte sequence parallel version. The inference scripts are examples/latte_example.py.

- 🎉August 8, 2024: Support Flux sequence parallel version. The inference scripts are examples/flux_example.py.

- 🎉August 2, 2024: Support Stable Diffusion 3 hybrid parallel version. The inference scripts are examples/sd3_example.py.

- 🎉July 18, 2024: Support PixArt-Sigma and PixArt-Alpha. The inference scripts are examples/pixartsigma_example.py, examples/pixartalpha_example.py.

- 🎉July 17, 2024: Rename the project to xDiT. The project has evolved from a collection of parallel methods into a unified inference framework and supported the hybrid parallel for DiTs.

- 🎉May 24, 2024: PipeFusion is public released. It supports PixArt-alpha scripts/pixart_example.py, DiT scripts/ditxl_example.py and SDXL scripts/sdxl_example.py. This version is currently in the

legacybranch.

🎯 Supported DiTs

Supported by legacy version only, including DistriFusion and Tensor Parallel as the standalong parallel strategies:

🖼️ TACO-DiT: ComfyUI with xDiT

ComfyUI, is the most popular web-based Diffusion Model interface optimized for workflow. It provides users with a UI platform for image generation, supporting plugins like LoRA, ControlNet, and IPAdaptor. Yet, its design for native single-GPU usage leaves it struggling with the demands of today’s large DiTs, resulting in unacceptably high latency for users like Flux.1.

Using our commercial project TACO-DiT, a SaaS build on xDiT, we’ve successfully implemented a multi-GPU parallel processing workflow within ComfyUI, effectively addressing Flux.1’s performance challenges. Below is the example of using TACO-DiT to accelerate a Flux workflow with LoRA:

By using TACO-DiT, you could significantly reduce your ComfyUI workflow inference latency, and boosting the throughput with Multi-GPUs. Now it is compatible with multiple Plug-ins, including Controlnet and loras.

More features and details can be found in our Intro Video:

- [YouTube] TACO-DiT: Accelerating Your ComfyUI Generation Experience

- [Bilibili] TACO-DiT: 加速你的ComfyUI生成体验

Medium article is also available: Supercharge Your AIGC Experience: Leverage xDiT for Multiple GPU Parallel in ComfyUI Flux.1 Workflow.

Currently, if you need the parallel version of ComfyUI, please fill in this application form or contact xditproject@outlook.com.

📈 Performance

Mochi1

CogVideo

Flux.1

Latte

HunyuanDiT

SD3

Pixart

🚀 QuickStart

1. Install from pip

pip install xfuser

# Or optionally, with flash_attn

pip install "xfuser[flash_attn]"2. Install from source

pip install -e .

# Or optionally, with flash_attn

pip install -e ".[flash_attn]"Note that we use two self-maintained packages:

The flash_attn used for yunchang should be >= 2.6.0

3. Docker

We provide a docker image for developers to develop with xDiT. The docker image is thufeifeibear/xdit-dev.

4. Usage

We provide examples demonstrating how to run models with xDiT in the ./examples/ directory. You can easily modify the model type, model directory, and parallel options in the examples/run.sh within the script to run some already supported DiT models.

bash examples/run.shClick to see available options for the PixArt-alpha example

```bash python ./examples/pixartalpha_example.py -h ... xFuser Arguments options: -h, --help show this help message and exit Model Options: --model MODEL Name or path of the huggingface model to use. --download-dir DOWNLOAD_DIR Directory to download and load the weights, default to the default cache dir of huggingface. --trust-remote-code Trust remote code from huggingface. Runtime Options: --warmup_steps WARMUP_STEPS Warmup steps in generation. --use_parallel_vae --use_torch_compile Enable torch.compile to accelerate inference in a single card --seed SEED Random seed for operations. --output_type OUTPUT_TYPE Output type of the pipeline. --enable_sequential_cpu_offload Offloading the weights to the CPU. Parallel Processing Options: --use_cfg_parallel Use split batch in classifier_free_guidance. cfg_degree will be 2 if set --data_parallel_degree DATA_PARALLEL_DEGREE Data parallel degree. --ulysses_degree ULYSSES_DEGREE Ulysses sequence parallel degree. Used in attention layer. --ring_degree RING_DEGREE Ring sequence parallel degree. Used in attention layer. --pipefusion_parallel_degree PIPEFUSION_PARALLEL_DEGREE Pipefusion parallel degree. Indicates the number of pipeline stages. --num_pipeline_patch NUM_PIPELINE_PATCH Number of patches the feature map should be segmented in pipefusion parallel. --attn_layer_num_for_pp [ATTN_LAYER_NUM_FOR_PP ...] List representing the number of layers per stage of the pipeline in pipefusion parallel --tensor_parallel_degree TENSOR_PARALLEL_DEGREE Tensor parallel degree. --split_scheme SPLIT_SCHEME Split scheme for tensor parallel. Input Options: --height HEIGHT The height of image --width WIDTH The width of image --prompt [PROMPT ...] Prompt for the model. --no_use_resolution_binning --negative_prompt [NEGATIVE_PROMPT ...] Negative prompt for the model. --num_inference_steps NUM_INFERENCE_STEPS Number of inference steps. ```Hybriding multiple parallelism techniques togather is essential for efficiently scaling. It's important that the product of all parallel degrees matches the number of devices. Note use_cfg_parallel means cfg_parallel=2. For instance, you can combine CFG, PipeFusion, and sequence parallelism with the command below to generate an image of a cute dog through hybrid parallelism. Here ulysses_degree pipefusion_parallel_degree cfg_degree(use_cfg_parallel) == number of devices == 8.

torchrun --nproc_per_node=8 \

examples/pixartalpha_example.py \

--model models/PixArt-XL-2-1024-MS \

--pipefusion_parallel_degree 2 \

--ulysses_degree 2 \

--num_inference_steps 20 \

--warmup_steps 0 \

--prompt "A small dog" \

--use_cfg_parallel⚠️ Applying PipeFusion requires setting warmup_steps, also required in DistriFusion, typically set to a small number compared with num_inference_steps.

The warmup step impacts the efficiency of PipeFusion as it cannot be executed in parallel, thus degrading to a serial execution.

We observed that a warmup of 0 had no effect on the PixArt model.

Users can tune this value according to their specific tasks.

5. Launch a Http Service

You can also launch a http service to generate images with xDiT.

Launching a Text-to-Image Http Service

✨ The xDiT's Arsenal

The remarkable performance of xDiT is attributed to two key facets. Firstly, it leverages parallelization techniques, pioneering innovations such as USP, PipeFusion, and hybrid parallelism, to scale DiTs inference to unprecedented scales.

Secondly, we employ compilation technologies to enhance execution on GPUs, integrating established solutions like torch.compile and onediff to optimize xDiT's performance.

1. Parallel Methods

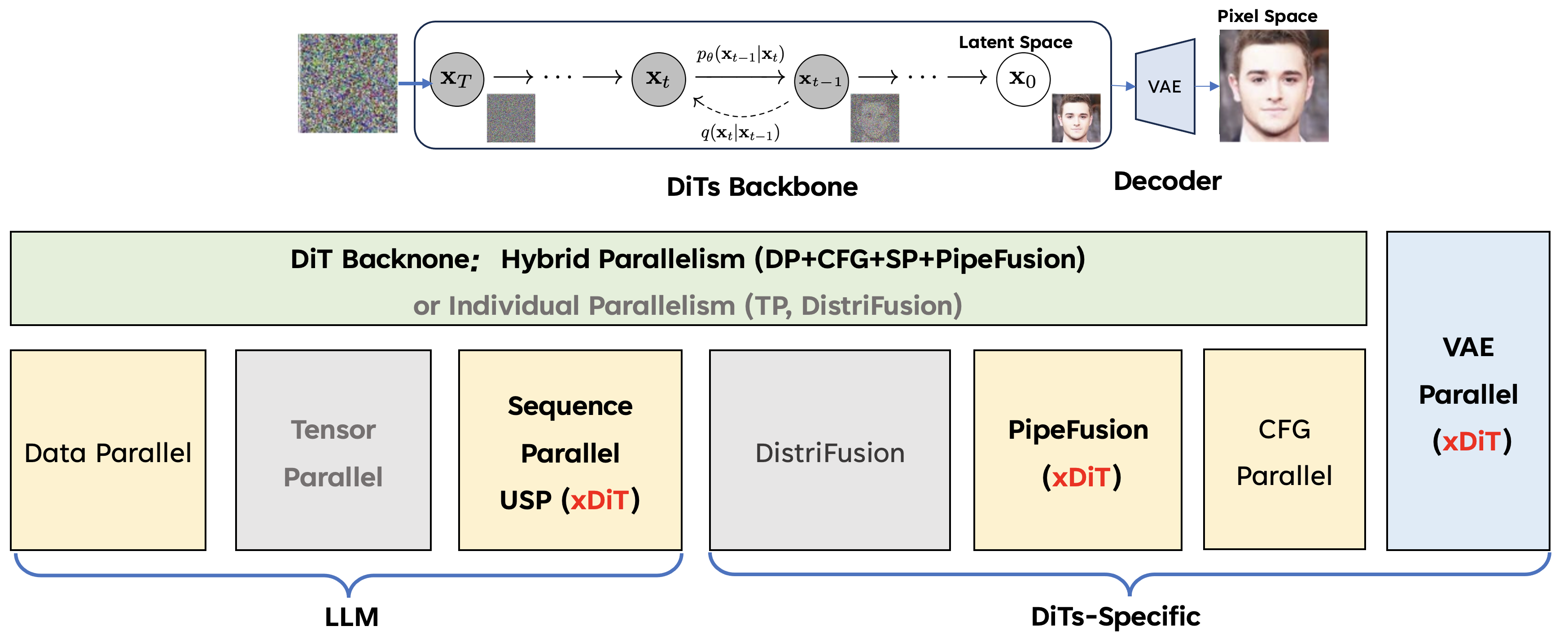

As illustrated in the accompanying images, xDiTs offer a comprehensive set of parallelization techniques. For the DiT backbone, the foundational methods—Data, USP, PipeFusion, and CFG parallel—operate in a hybrid fashion. Additionally, the distinct methods, Tensor and DistriFusion parallel, function independently. For the VAE module, xDiT offers a parallel implementation, DistVAE, designed to prevent out-of-memory (OOM) issues. The (xDiT) highlights the methods first proposed by use.

The communication and memory costs associated with the aforementioned intra-image parallelism, except for the CFG and DP (they are inter-image parallel), in DiTs are detailed in the table below. (* denotes that communication can be overlapped with computation.)

As we can see, PipeFusion and Sequence Parallel achieve lowest communication cost on different scales and hardware configurations, making them suitable foundational components for a hybrid approach.

𝒑: Number of pixels;\ 𝒉𝒔: Model hidden size;\ 𝑳: Number of model layers;\ 𝑷: Total model parameters;\ 𝑵: Number of parallel devices;\ 𝑴: Number of patch splits;\ 𝑸𝑶: Query and Output parameter count;\ 𝑲𝑽: KV Activation parameter count;\ 𝑨 = 𝑸 = 𝑶 = 𝑲 = 𝑽: Equal parameters for Attention, Query, Output, Key, and Value;

| attn-KV | communication cost | param memory | activations memory | extra buff memory | |

|---|---|---|---|---|---|

| Tensor Parallel | fresh | $4O(p \times hs)L$ | $\frac{1}{N}P$ | $\frac{2}{N}A = \frac{1}{N}QO$ | $\frac{2}{N}A = \frac{1}{N}KV$ |

| DistriFusion* | stale | $2O(p \times hs)L$ | $P$ | $\frac{2}{N}A = \frac{1}{N}QO$ | $2AL = (KV)L$ |

| Ring Sequence Parallel* | fresh | $2O(p \times hs)L$ | $P$ | $\frac{2}{N}A = \frac{1}{N}QO$ | $\frac{2}{N}A = \frac{1}{N}KV$ |

| Ulysses Sequence Parallel | fresh | $\frac{4}{N}O(p \times hs)L$ | $P$ | $\frac{2}{N}A = \frac{1}{N}QO$ | $\frac{2}{N}A = \frac{1}{N}KV$ |

| PipeFusion* | stale- | $2O(p \times hs)$ | $\frac{1}{N}P$ | $\frac{2}{M}A = \frac{1}{M}QO$ | $\frac{2L}{N}A = \frac{1}{N}(KV)L$ |

1.1. PipeFusion

PipeFusion: Displaced Patch Pipeline Parallelism for Diffusion Models

1.2. USP: Unified Sequence Parallelism

USP: A Unified Sequence Parallelism Approach for Long Context Generative AI

1.3. Hybrid Parallel

1.4. CFG Parallel

1.5. Parallel VAE

Single GPU Acceleration

Compilation Acceleration

We utilize two compilation acceleration techniques, torch.compile and onediff, to enhance runtime speed on GPUs. These compilation accelerations are used in conjunction with parallelization methods.

We employ the nexfort backend of onediff. Please install it before use:

pip install onediff

pip install -U nexfortFor usage instructions, refer to the example/run.sh. Simply append --use_torch_compile or --use_onediff to your command. Note that these options are mutually exclusive, and their performance varies across different scenarios.

DiTFastAttn

xDiT also provides DiTFastAttn for single GPU acceleration. It can reduce computation cost of attention layer by leveraging redundancies between different steps of the Diffusion Model.

DiTFastAttn: Attention Compression for Diffusion Transformer Models

📚 Develop Guide

The implement and design of xdit framework

🚧 History and Looking for Contributions

We conducted a major upgrade of this project in August 2024.

The latest APIs is located in the xfuser/ directory, supports hybrid parallelism. It offers clearer and more structured code but currently supports fewer models.

The legacy APIs is in the legacy branch, limited to single parallelism. It supports a richer of parallel methods, including PipeFusion, Sequence Parallel, DistriFusion, and Tensor Parallel. CFG Parallel can be hybrid with PipeFusion but not with other parallel methods.

For models not yet supported by the latest APIs, you can run the examples in the scripts/ directory under branch legacy. If you wish to develop new features on a model or require hybrid parallelism, stay tuned for further project updates.

We also welcome developers to join and contribute more features and models to the project. Tell us which model you need in xDiT in discussions.

📝 Cite Us

xDiT: an Inference Engine for Diffusion Transformers (DiTs) with Massive Parallelism

@misc{fang2024xditinferenceenginediffusion,

title={xDiT: an Inference Engine for Diffusion Transformers (DiTs) with Massive Parallelism},

author={Jiarui Fang and Jinzhe Pan and Xibo Sun and Aoyu Li and Jiannan Wang},

year={2024},

eprint={2411.01738},

archivePrefix={arXiv},

primaryClass={cs.DC},

url={https://arxiv.org/abs/2411.01738},

}

PipeFusion: Patch-level Pipeline Parallelism for Diffusion Transformers Inference

@misc{fang2024pipefusionpatchlevelpipelineparallelism,

title={PipeFusion: Patch-level Pipeline Parallelism for Diffusion Transformers Inference},

author={Jiarui Fang and Jinzhe Pan and Jiannan Wang and Aoyu Li and Xibo Sun},

year={2024},

eprint={2405.14430},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2405.14430},

}

USP: A Unified Sequence Parallelism Approach for Long Context Generative AI

@misc{fang2024uspunifiedsequenceparallelism,

title={USP: A Unified Sequence Parallelism Approach for Long Context Generative AI},

author={Jiarui Fang and Shangchun Zhao},

year={2024},

eprint={2405.07719},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2405.07719},

}

Unveiling Redundancy in Diffusion Transformers (DiTs): A Systematic Study

@misc{sun2024unveilingredundancydiffusiontransformers,

title={Unveiling Redundancy in Diffusion Transformers (DiTs): A Systematic Study},

author={Xibo Sun and Jiarui Fang and Aoyu Li and Jinzhe Pan},

year={2024},

eprint={2411.13588},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2411.13588},

}