Reinforcement Learning-based mobile robot crowd navigation

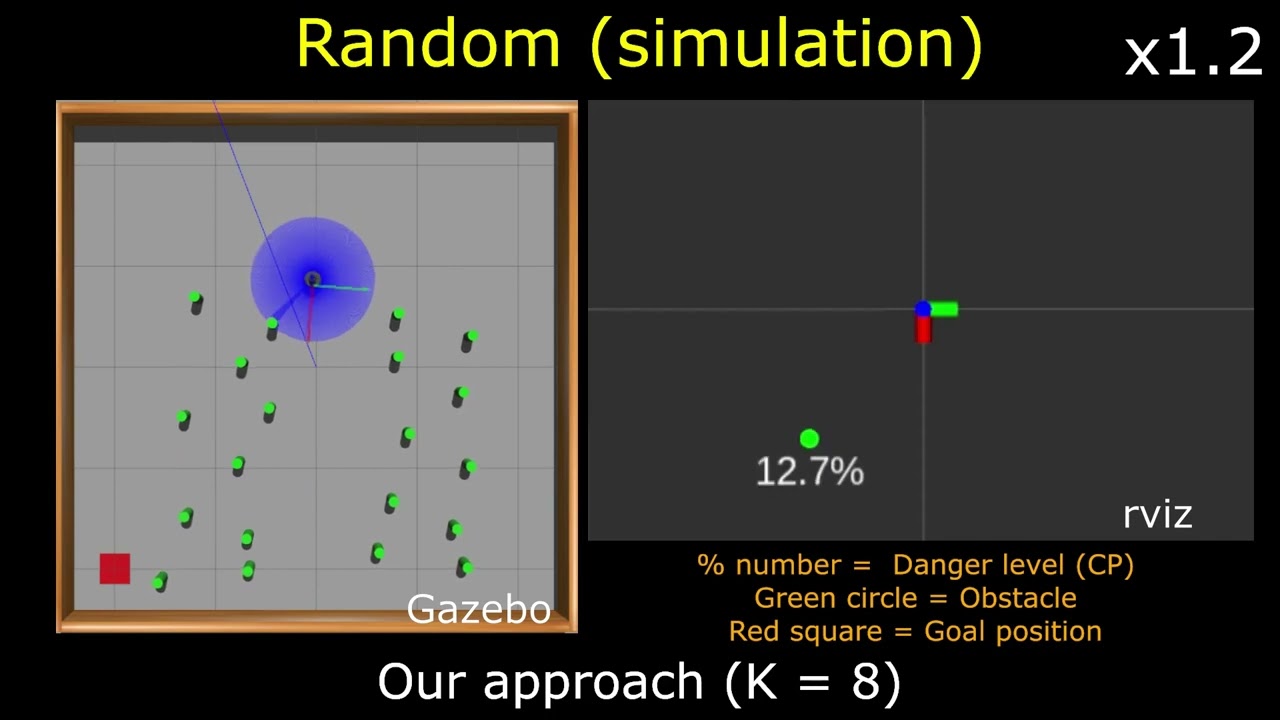

This repository contains codes to replicate my research work titled "Deep Reinforcement Learning-Based Mapless Crowd Navigation with Perceived Risk of the Moving Crowd for Mobile Robots".

In addition, it also provides a framework to train and test six different algorithms which are TD3, DDPG, SAC, Q-Learning, SARSA, and DQN. The initial results of this work have been presented at the 2nd Workshop Social Robot Navigation: Advances and Evaluation at IROS 2023. Turtlebot3 Burger mobile robot platform was used to train and test these algorithms. Unlike my other repository, I have completely removed the dependency of OpenAI so it is a much simpler process to get our codes to run in your own workspace.

If you have found this repository useful or have used this repository in any of your scientific work, please consider citing my work using this BibTeX Citation. A full mobile robot navigation demonstration video has been uploaded on YouTube.

Table of contents

- Installation

- Repository contents

- Getting started

- Hardware and software information

- BibTeX Citation

- Acknowledgments

Installation

- Firstly, the following packages (turtlebot3, turtlebot3_gazebo) and their dependencies should be cloned in your ROS workspace.

- Then, clone this repository and move the contents turtlebot3_simulations and turtlebot3_description to the installed packages.

- Finally, the ROS workspace should be compiled with

catkin_makeand sourced withsource devel/setup.bash. The compile process should return no error if all the dependencies are met.

Repository contents

turtlebot3_rl_sim - This folder contains files for the robot to run our version of TD3 (with Risk Perception of Crowd) as well as other algorithms of DDPG, TD3, DQN, Q-Learning, and SARSA for training and testing.

turtlebot3_description - This folder contains core files to run Turtlebot3 in the Gazebo simulator with the same settings used in our work.

turtlebot3_simulations - This folder contains the Gazebo simulation launch files, models, and worlds.

Getting Started

Start ROSCORE

- Run

roscorein your terminal.

Launch Gazebo world

- Run

roslaunch turtlebot3_gazebo turtlebot3_crowd_dense.launchin your terminal.

Place your robot in the Gazebo world

- Run

roslaunch turtlebot3_gazebo put_robot_in_world_training.launchin your terminal.

Simulating crowd behavior

- Run

rosrun turtlebot3_rl_sim simulate_crowd.pyin your terminal.

Start training with TD3

- Run

roslaunch turtlebot3_rl_sim start_td3_training.launchin your terminal.

Start testing with TD3

Firstly, we must use the following parameters in the start_td3_training.py script:

resume_epoch = 1500 # e.g. 1500 means it will use the model saved at episode 1500

continue_execution = True

learning = False

k_obstacle_count = 8 # K = 8 implementation

utils.record_data(data, result_outdir, "td3_training_trajectory_test") <-- Change the string name accordingly, to avoid overwriting the training results fileSecondly, edit the turtlebot3_world.yaml file to reflect the following settings:

min_scan_range: 0.0 # To get reliable social and ego score readings, depending on evaluation metrics

desired_pose:

x: -2.0

y: 2.0

z: 0.0

starting_pose:

x: 1.0

y: 0.0

z: 0.0Thirdly, edit the environment_stage_1_nobonus.py script to reflect the following settings:

self.k_obstacle_count = 8 #K = 8 implementation- Launch the Gazebo world:

- Run

roslaunch turtlebot3_gazebo turtlebot3_obstacle_20.launchin your terminal.

- Run

- Place your robot in the Gazebo world:

- Run

roslaunch turtlebot3_gazebo put_robot_in_world_testing.launchin your terminal.

- Run

- Simulating test crowd behaviors (OPTIONS:{crossing, towards, ahead, random}):

- Run

rosrun turtlebot3_rl_sim simulate_OPTIONS_20.pyin your terminal.

- Run

- Start the testing script:

- Run

roslaunch turtlebot3_rl_sim start_td3_training.launchin your terminal.

- Run

Real-world testing (deployment)

-

Physical deployment requires the Turtlebot3 itself and a remote PC to run.

-

On the Turtlebot3:

- Run

roslaunch turtlebot3_bringup turtlebot3_robot.launchin your terminal.

- Run

-

On the remote PC:

- Run

roscore - Run

roslaunch turtlebot3_bringup turtlebot3_remote.launchin your terminal. - Run

roslaunch turtlebot3_rl_sim start_td3_real_world_test.launchin your terminal.

- Run

Hardware and Software Information

Software

- OS: Ubuntu 18.04

- ROS version: Melodic

- Python version: 2.7 (Code is in Python3, so porting to a newer version of ROS/Ubuntu should have no issues)

- Gazebo version: 9.19

- CUDA version: 10.0

- CuDNN version: 7

Computer Specifications

- CPU: Intel i7 9700

- GPU: Nvidia RTX 2070

Mobile Robot Platform

BibTeX Citation

If you have used this repository in any of your scientific work, please consider citing my work (submitted to ICRA2024):

@misc{anas2023deep,

title={Deep Reinforcement Learning-Based Mapless Crowd Navigation with Perceived Risk of the Moving Crowd for Mobile Robots},

author={Hafiq Anas and Ong Wee Hong and Owais Ahmed Malik},

year={2023},

eprint={2304.03593},

archivePrefix={arXiv},

primaryClass={cs.RO}

}Acknowledgments

- Thank you Robolab@UBD for lending the Turtlebot3 robot platform and lab facilities.