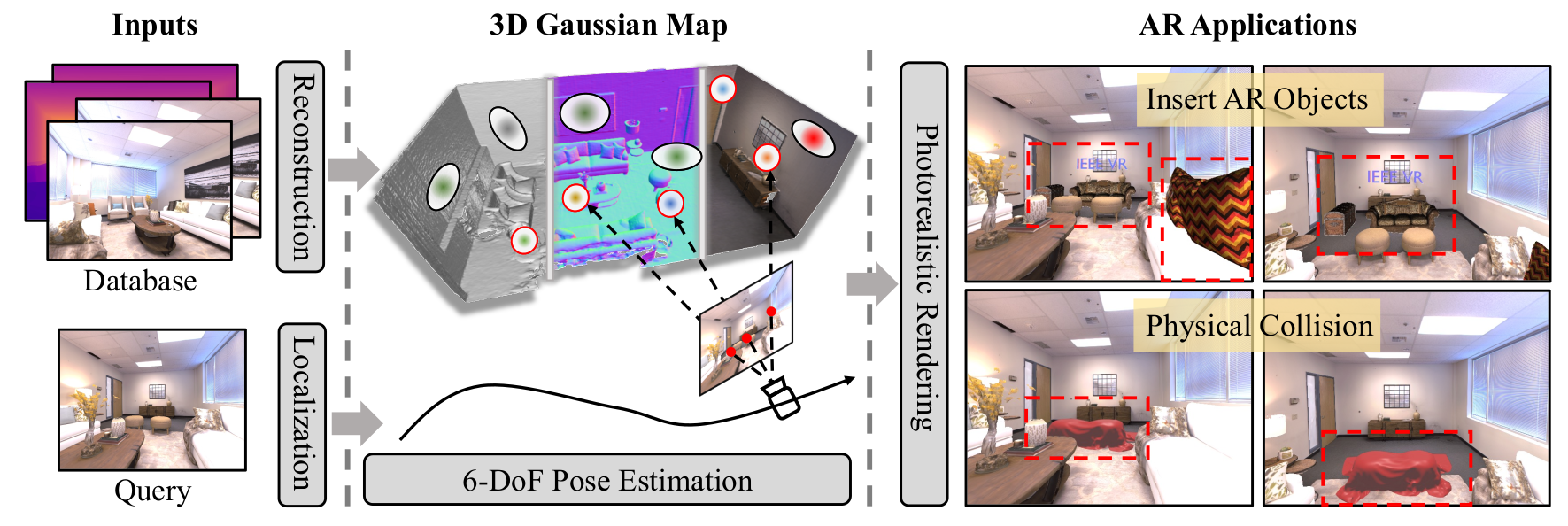

SplatLoc: 3D Gaussian Splatting-based Visual Localization for Augmented Reality

Hongjia Zhai · Xiyu Zhang · Boming Zhao · Hai Li · Yijia He · Zhaopeng Cui · Hujun Bao · Guofeng Zhang

[comment]: <> (PAPER

)Paper | Project Page

We present SplatLoc, an efficient and novel visual localization approach designed for Augmented Reality (AR). As illustrated in the figure, our system utilizes monocular RGB-D frames to reconstruct the scene using 3D Gaussian primitives. Additionally, with our learned unbiased 3D descriptor fields, we achieve 6-DoF camera pose estimation through precise 2D-3D feature matching. We demonstrate the potential AR applications of our system, such as virtual content insertion and physical collision simulation. We highlight virtual objects with red boxes.

TODO Lists

- [x] provide retrieval file, feature ply path, score map path

- [x] codes for training and evaluation

- [ ] codes for retrieval and 2D feature map

Env setup

Clone the code:

git clone https://github.com/zhaihongjia/SplatLoc --recursive

cd SplatLocIf you failed to get submodules, you can:

git clone https://github.com/zhaihongjia/SplatLoc

cd SplatLoc/submodules

git clone --recursive https://github.com/cvg/Hierarchical-Localization/

git clone https://gitlab.inria.fr/bkerbl/simple-knn.git

git clone https://github.com/dendenxu/diff-gaussian-rasterization.gitSetup the environment:

conda env create -f environment.yml

conda activate splatloc

pip install git+https://github.com/NVlabs/tiny-cuda-nn/#subdirectory=bindings/torchset NUM_CHANNELS in file submodules/diff-gaussian-rasterization/cuda_rasterizer/config.h as 4

Then, run following scripts:

cd submodules/diff-gaussian-rasterization

pip install -e .Dataset

You can download the datasets from Replica provided by Semantic-NeRF and 12-Scenes.

Generated retrieval results and SuperPoint feature clound

Currently, we provide the data generated by ours. You can find it at Google Drive.

You can download the data and set the path generated_folder in the configs. The preprocessing codes (retrieval and feature volume reconstruction) will be released later.

Train 3D Gaussian and feature decoder

Before training, you may set the following parameters of the xxxx.yaml in the configs folder.

generated_folder: to load the generated data provided by ours

save_dir: to save your results

dataset_path: to load your datasetRunning the following scripts to train model and evaluate performance:

sh replica.sh

sh scenes12.shFor more details, we show the codes for train and test on a scene in Replica dataset.

# train the 3D feature decoder

CUDA_VISIBLE_DEVICES=1 python train_decoder.py --config ./configs/replica_nerf/$scene.yaml

# train the 3D gaussian model

CUDA_VISIBLE_DEVICES=1 python train_gaussians.py --config ./configs/replica_nerf/$scene.yaml

# eval localization and rendering

CUDA_VISIBLE_DEVICES=1 python test.py --config ./configs/replica_nerf/$scene.yaml --eval_pose --eval_rendering

# eval 3D landmark selection (with 5000 landmarks)

CUDA_VISIBLE_DEVICES=1 python test.py --config ./configs/replica_nerf/$scene.yaml --eval_selection --landmark_num 5000The saved ply and evaluation results are stored in your save_dir in the base_config.yaml.

- point_cloud: saved 3D Gaussian model

- train_feat: saved 3D decoder

- eval_rendering.txt: rendering performance

- eval_pose.txt: localization performance

- eval_selection_xx.txt: localization performance of xx selected 3D landmarks

Acknowledgement

This work incorporates many open-source codes. We extend our gratitude to the authors of the software.

- 3D Gaussian Splatting

- MonoGS

- Differential Gaussian Rasterization provided by Zhen Xu

- Hierarchical-Localization

- SceneLandmarkLocalization

Citation

If you found this code/work to be useful in your own research, please considering citing the following:

@article{splatloc,

author={Zhai, Hongjia and Zhang, Xiyu and Zhao Boming and Li, Hai and He, Yijia and Cui, Zhaopeng and Bao, Hujun and Zhang, Guofeng},

journal={arXiv preprint arXiv:2409.14067},

title={{SplatLoc}: 3D Gaussian Splatting-based Visual Localization for Augmented Reality},

year={2024},

}