[中文主页] | [Docs] | [API] | [DJ-SORA]

Data-Juicer: A One-Stop Data Processing System for Large Language Models

Data-Juicer is a one-stop multimodal data processing system to make data higher-quality, juicier, and more digestible for LLMs.

Data-Juicer (including DJ-SORA) is being actively updated and maintained. We will periodically enhance and add more features, data recipes and datasets. We welcome you to join us in promoting LLM data development and research!

If you find Data-Juicer useful for your research or development, please kindly cite our work. Welcome to join our Slack channel, DingDing group, or WeChat group (scan the QR code below with WeChat) for discussion.

News

-

[2024-03-07] We release Data-Juicer v0.2.0 now!

In this new version, we support more features for multimodal data (including video now), and introduce DJ-SORA to provide open large-scale, high-quality datasets for SORA-like models.

[2024-03-07] We release Data-Juicer v0.2.0 now!

In this new version, we support more features for multimodal data (including video now), and introduce DJ-SORA to provide open large-scale, high-quality datasets for SORA-like models. -

[2024-02-20] We have actively maintained an awesome list of LLM-Data, welcome to visit and contribute!

[2024-02-20] We have actively maintained an awesome list of LLM-Data, welcome to visit and contribute! -

[2024-02-05] Our paper has been accepted by SIGMOD'24 industrial track!

[2024-02-05] Our paper has been accepted by SIGMOD'24 industrial track! -

[2024-01-10] Discover new horizons in "Data Mixture"—Our second data-centric LLM competition has kicked off! Please visit the competition's official website for more information.

-

[2024-01-05] We release Data-Juicer v0.1.3 now! In this new version, we support more Python versions (3.8-3.10), and support multimodal dataset converting/processing (Including texts, images, and audios. More modalities will be supported in the future). Besides, our paper is also updated to v3.

-

[2023-10-13] Our first data-centric LLM competition begins! Please visit the competition's official websites, FT-Data Ranker (1B Track, 7B Track), for more information.

-

[2023-10-8] We update our paper to the 2nd version and release the corresponding version 0.1.2 of Data-Juicer!

Table of Contents

- Data-Juicer: A One-Stop Data Processing System for Large Language Models

- Table of Contents

Features

-

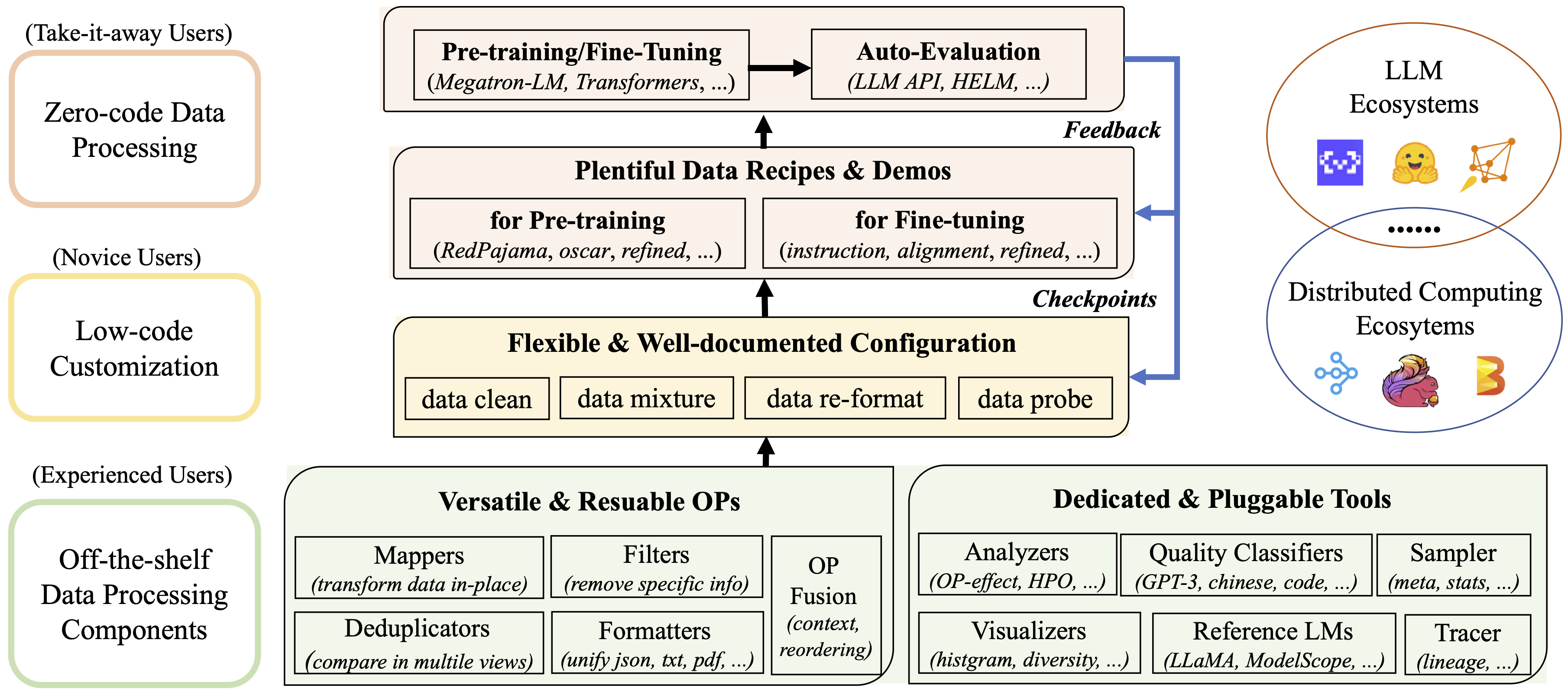

Systematic & Reusable: Empowering users with a systematic library of 80+ core OPs, 20+ reusable config recipes, and 20+ feature-rich dedicated toolkits, designed to function independently of specific LLM datasets and processing pipelines.

-

Data-in-the-loop: Allowing detailed data analyses with an automated report generation feature for a deeper understanding of your dataset. Coupled with multi-dimension automatic evaluation capabilities, it supports a timely feedback loop at multiple stages in the LLM development process.

-

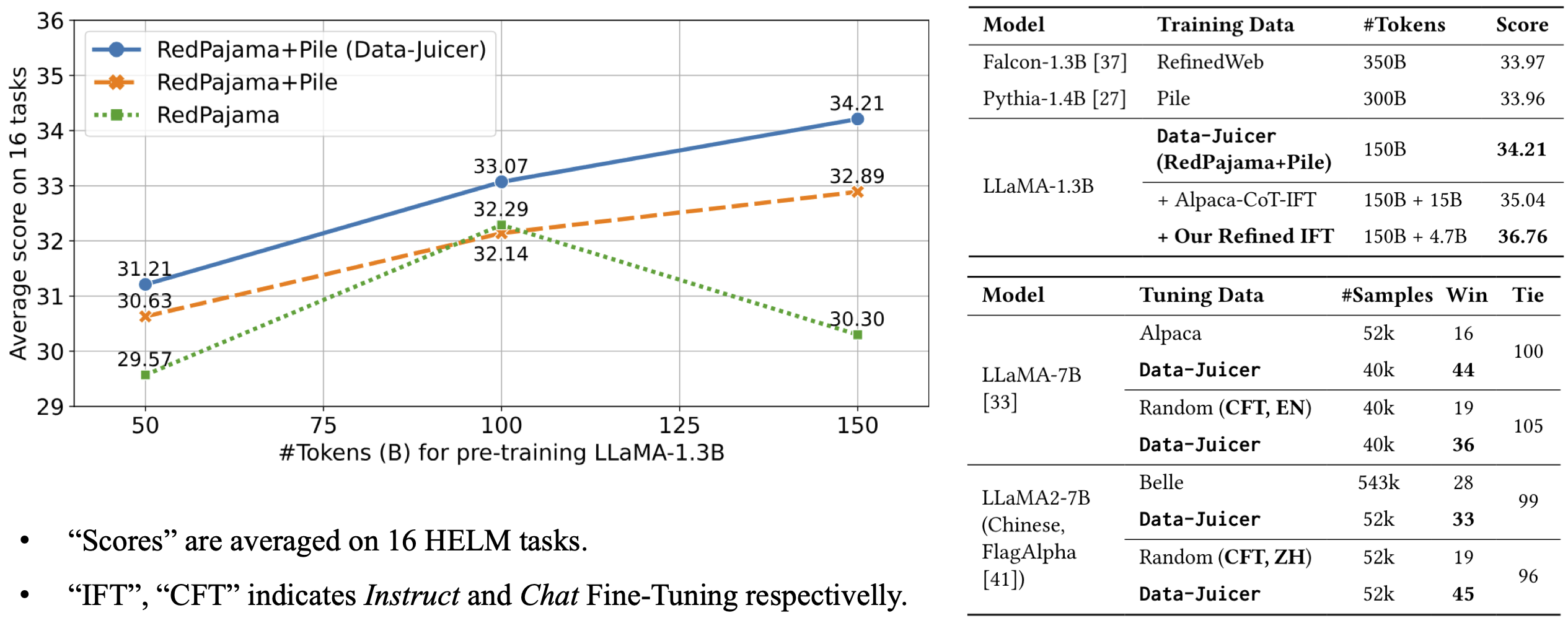

Comprehensive Data Processing Recipes: Offering tens of pre-built data processing recipes for pre-training, fine-tuning, en, zh, and more scenarios. Validated on reference LLaMA and LLaVA models.

-

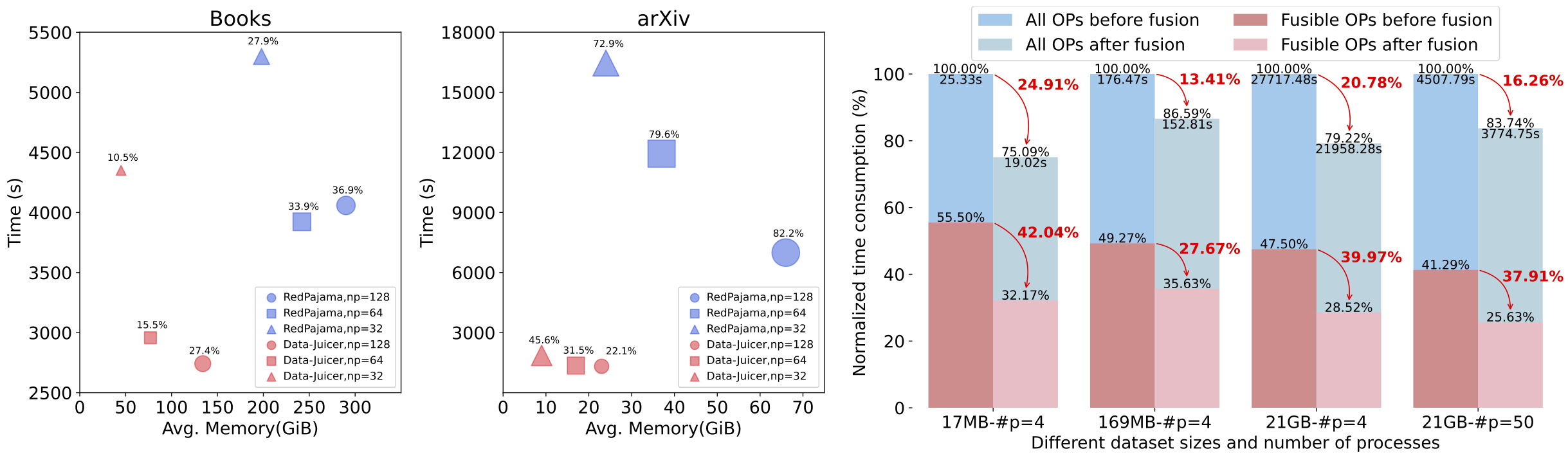

Enhanced Efficiency: Providing a speedy data processing pipeline requiring less memory and CPU usage, optimized for maximum productivity.

-

Flexible & Extensible: Accommodating most types of data formats (e.g., jsonl, parquet, csv, ...) and allowing flexible combinations of OPs. Feel free to implement your own OPs for customizable data processing.

-

User-Friendly Experience: Designed for simplicity, with comprehensive documentation, easy start guides and demo configs, and intuitive configuration with simple adding/removing OPs from existing configs.

Documentation Index

- Overview

- Operator Zoo

- Configs

- Developer Guide

- "Bad" Data Exhibition

- Dedicated Toolkits

- Third-parties (LLM Ecosystems)

- API references

- Awesome LLM-Data

- DJ-SORA

Demos

- Introduction to Data-Juicer [ModelScope] [HuggingFace]

- Data Visualization:

- Basic Statistics [ModelScope] [HuggingFace]

- Lexical Diversity [ModelScope] [HuggingFace]

- Operator Insight (Single OP) [ModelScope] [HuggingFace]

- Operator Effect (Multiple OPs) [ModelScope] [HuggingFace]

- Data Processing:

- Scientific Literature (e.g. arXiv) [ModelScope] [HuggingFace]

- Programming Code (e.g. TheStack) [ModelScope] [HuggingFace]

- Chinese Instruction Data (e.g. Alpaca-CoT) [ModelScope] [HuggingFace]

- Tool Pool:

- Dataset Splitting by Language [ModelScope] [HuggingFace]

- Quality Classifier for CommonCrawl [ModelScope] [HuggingFace]

- Auto Evaluation on HELM [ModelScope] [HuggingFace]

- Data Sampling and Mixture [ModelScope] [HuggingFace]

- Data Processing Loop [ModelScope] [HuggingFace]

Prerequisites

- Recommend Python>=3.8,<=3.10

- gcc >= 5 (at least C++14 support)

Installation

From Source

-

Run the following commands to install the latest basic

data_juicerversion in editable mode:cd <path_to_data_juicer> pip install -v -e . -

Some OPs rely on some other too large or low-platform-compatibility third-party libraries. You can install optional dependencies as needed:

cd <path_to_data_juicer>

pip install -v -e . # install a minimal dependencies, which support the basic functions

pip install -v -e .[tools] # install a subset of tools dependenciesThe dependency options are listed below:

| Tag | Description |

|---|---|

. or .[mini] |

Install minimal dependencies for basic Data-Juicer. |

.[all] |

Install all optional dependencies (including minimal dependencies and all of the following). |

.[sci] |

Install all dependencies for all OPs. |

.[dist] |

Install dependencies for distributed data processing. (Experimental) |

.[dev] |

Install dependencies for developing the package as contributors. |

.[tools] |

Install dependencies for dedicated tools, such as quality classifiers. |

Using pip

- Run the following command to install the latest released

data_juicerusingpip:

pip install py-data-juicer- Note:

- only the basic APIs in

data_juicerand two basic tools (data processing and analysis) are available in this way. If you want customizable and complete functions, we recommend you installdata_juicerfrom source. - The release versions from pypi have a certain lag compared to the latest version from source.

So if you want to follow the latest functions of

data_juicer, we recommend you install from source.

- only the basic APIs in

Using Docker

-

You can

-

either pull our pre-built image from DockerHub:

docker pull datajuicer/data-juicer:<version_tag> -

or run the following command to build the docker image including the latest

data-juicerwith provided Dockerfile:

docker build -t datajuicer/data-juicer:<version_tag> . -

Installation check

import data_juicer as dj

print(dj.__version__)Quick Start

Data Processing

- Run

process_data.pytool ordj-processcommand line tool with your config as the argument to process your dataset.

# only for installation from source

python tools/process_data.py --config configs/demo/process.yaml

# use command line tool

dj-process --config configs/demo/process.yaml- Note: For some operators that involve third-party models or resources which are not stored locally on your computer, it might be slow for the first running because these ops need to download corresponding resources into a directory first.

The default download cache directory is

~/.cache/data_juicer. Change the cache location by setting the shell environment variable,DATA_JUICER_CACHE_HOMEto another directory, and you can also changeDATA_JUICER_MODELS_CACHEorDATA_JUICER_ASSETS_CACHEin the same way:

# cache home

export DATA_JUICER_CACHE_HOME="/path/to/another/directory"

# cache models

export DATA_JUICER_MODELS_CACHE="/path/to/another/directory/models"

# cache assets

export DATA_JUICER_ASSETS_CACHE="/path/to/another/directory/assets"Distributed Data Processing

We have now implemented multi-machine distributed data processing based on RAY. The corresponding demos can be run using the following commands:

# Run text data processing

python tools/process_data.py --config ./demos/process_on_ray/configs/demo.yaml

# Run video data processing

python tools/process_data.py --config ./demos/process_video_on_ray/configs/demo.yaml-

To run multimodal data processing across multiple machines, it is necessary to ensure that all distributed nodes can access the corresponding data paths (for example, by mounting the respective data paths on a file-sharing system such as NAS).

-

Users can also opt not to use RAY and instead split the dataset to run on a cluster with Slurm/DLC.

Data Analysis

- Run

analyze_data.pytool ordj-analyzecommand line tool with your config as the argument to analyse your dataset.

# only for installation from source

python tools/analyze_data.py --config configs/demo/analyser.yaml

# use command line tool

dj-analyze --config configs/demo/analyser.yaml- Note: Analyser only compute stats of Filter ops. So extra Mapper or Deduplicator ops will be ignored in the analysis process.

Data Visualization

- Run

app.pytool to visualize your dataset in your browser. - Note: only available for installation from source.

streamlit run app.pyBuild Up Config Files

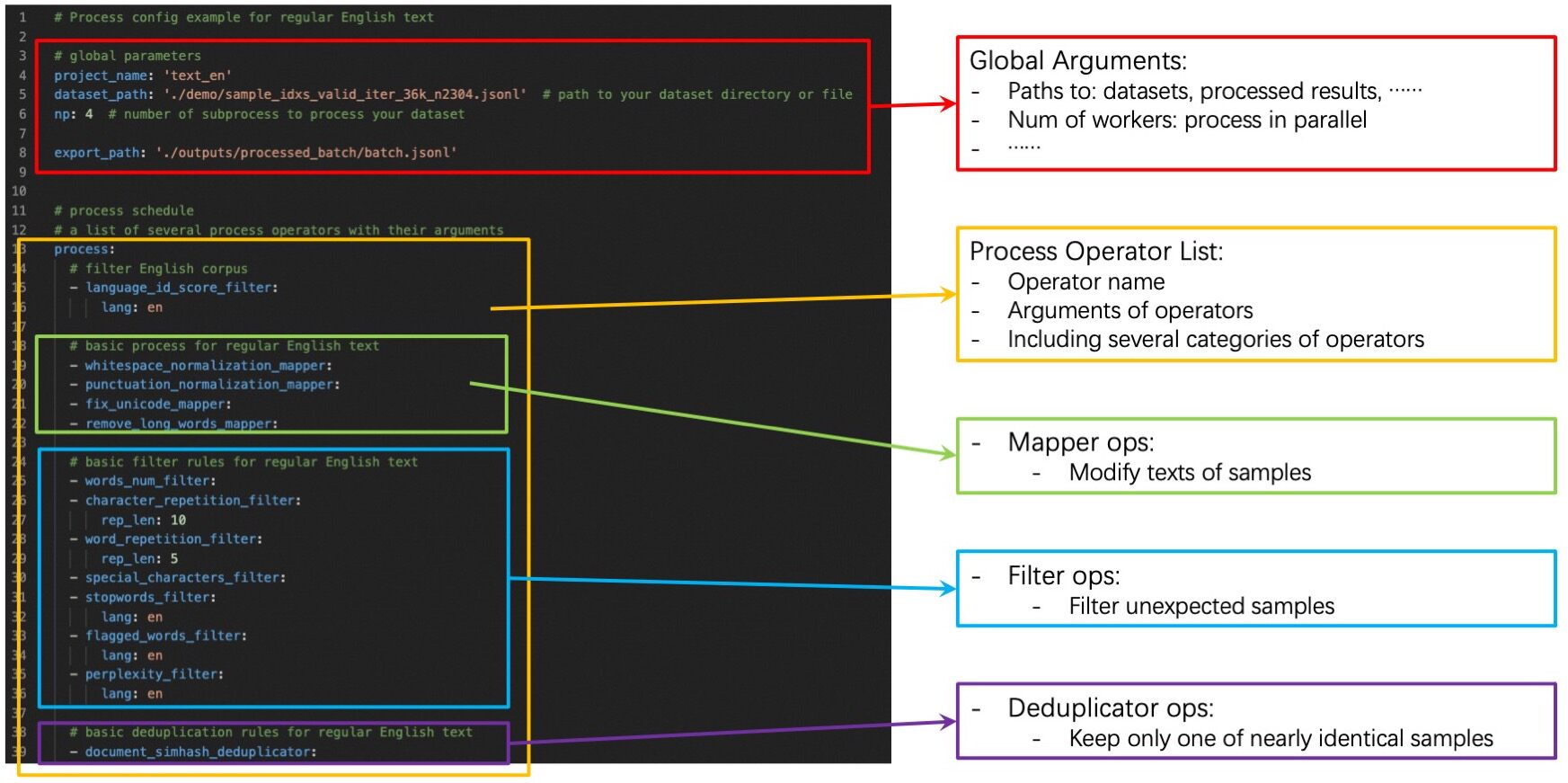

- Config files specify some global arguments, and an operator list for the

data process. You need to set:

- Global arguments: input/output dataset path, number of workers, etc.

- Operator list: list operators with their arguments used to process the dataset.

- You can build up your own config files by:

- ➖:Modify from our example config file

config_all.yamlwhich includes all ops and default arguments. You just need to remove ops that you won't use and refine some arguments of ops. - ➕:Build up your own config files from scratch. You can refer our

example config file

config_all.yaml, op documents, and advanced Build-Up Guide for developers. - Besides the yaml files, you also have the flexibility to specify just one (of several) parameters on the command line, which will override the values in yaml files.

- ➖:Modify from our example config file

python xxx.py --config configs/demo/process.yaml --language_id_score_filter.lang=en-

The basic config format and definition is shown below.

Preprocess Raw Data (Optional)

- Our formatters support some common input dataset formats for now:

- Multi-sample in one file: jsonl/json, parquet, csv/tsv, etc.

- Single-sample in one file: txt, code, docx, pdf, etc.

- However, data from different sources are complicated and diverse. Such as:

- Raw arXiv data downloaded from S3 include thousands of tar files and even more gzip files in them, and expected tex files are embedded in the gzip files so they are hard to obtain directly.

- Some crawled data include different kinds of files (pdf, html, docx, etc.). And extra information like tables, charts, and so on is hard to extract.

- It's impossible to handle all kinds of data in Data-Juicer, issues/PRs are welcome to contribute to process new data types!

- Thus, we provide some common preprocessing tools in

tools/preprocessfor you to preprocess these data.- You are welcome to make your contributions to new preprocessing tools for the community.

- We highly recommend that complicated data can be preprocessed to jsonl or parquet files.

For Docker Users

- If you build or pull the docker image of

data-juicer, you can run the commands or tools mentioned above using this docker image. - Run directly:

# run the data processing directly

docker run --rm \ # remove container after the processing

--name dj \ # name of the container

-v <host_data_path>:<image_data_path> \ # mount data or config directory into the container

-v ~/.cache/:/root/.cache/ \ # mount the cache directory into the container to reuse caches and models (recommended)

datajuicer/data-juicer:<version_tag> \ # image to run

dj-process --config /path/to/config.yaml # similar data processing commands- Or enter into the running container and run commands in editable mode:

# start the container

docker run -dit \ # run the container in the background

--rm \

--name dj \

-v <host_data_path>:<image_data_path> \

-v ~/.cache/:/root/.cache/ \

datajuicer/data-juicer:latest /bin/bash

# enter into this container and then you can use data-juicer in editable mode

docker exec -it <container_id> bashData Recipes

- Recipes for data process in BLOOM

- Recipes for data process in RedPajama

- Refined recipes for pre-training text data

- Refined recipes for fine-tuning text data

- Refined recipes for pre-training multi-modal data

License

Data-Juicer is released under Apache License 2.0.

Contributing

We are in a rapidly developing field and greatly welcome contributions of new features, bug fixes and better documentations. Please refer to How-to Guide for Developers.

If you have any questions, please join our discussion groups.

Acknowledgement

Data-Juicer is used across various LLM products and research initiatives, including industrial LLMs from Alibaba Cloud's Tongyi, such as Dianjin for financial analysis, and Zhiwen for reading assistant, as well as the Alibaba Cloud's platform for AI (PAI). We look forward to more of your experience, suggestions and discussions for collaboration!

Data-Juicer thanks and refers to several community projects, such as Huggingface-Datasets, Bloom, RedPajama, Pile, Alpaca-Cot, Megatron-LM, DeepSpeed, Arrow, Ray, Beam, LM-Harness, HELM, ....

References

If you find our work useful for your research or development, please kindly cite the following paper.

@inproceedings{chen2024datajuicer,

title={Data-Juicer: A One-Stop Data Processing System for Large Language Models},

author={Daoyuan Chen and Yilun Huang and Zhijian Ma and Hesen Chen and Xuchen Pan and Ce Ge and Dawei Gao and Yuexiang Xie and Zhaoyang Liu and Jinyang Gao and Yaliang Li and Bolin Ding and Jingren Zhou},

booktitle={International Conference on Management of Data},

year={2024}

}