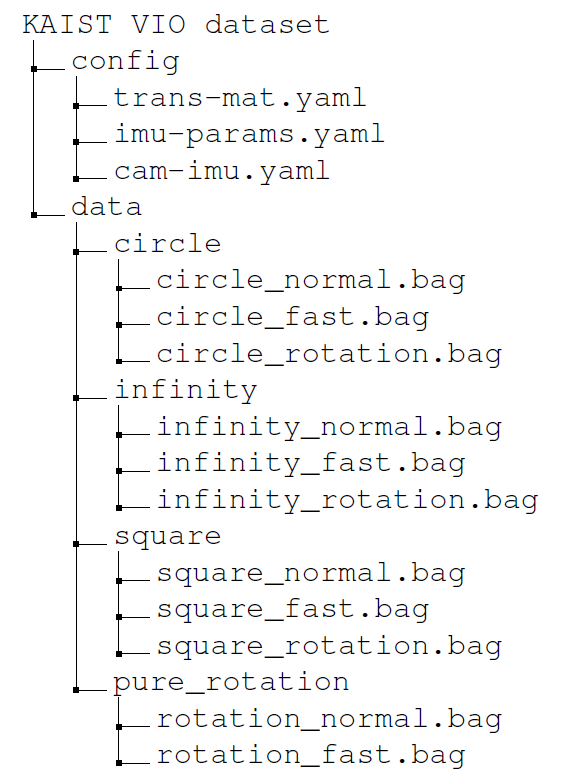

KAIST VIO dataset (RA-L'21 w/ ICRA Option)

Official page of "Run Your Visual-Inertial Odometry on NVIDIA Jetson: Benchmark Tests on a Micro Aerial Vehicle", which is accepted by RA-L with ICRA'21 option

[](https://www.youtube.com/watch?v=nZzgyhNimLI)

[](https://arxiv.org/abs/2103.01655)

[](https://ieeexplore.ieee.org/abstract/document/9416140)

This is the dataset for testing the robustness of various VO/VIO methods, acquired on a UAV.

You can download the whole dataset on KAIST VIO dataset

## Trajectories

## Downloads You can download a single ROS bag file from the link below. (or whole dataset from [KAIST VIO dataset](https://urserver.kaist.ac.kr/publicdata/KAIST_VIO_Dataset/kaist_vio_dataset.zip))

| Trajectory | Type | ROS bag download | | :---: | :--- | :---: | | **circle** | normal

fast

rotation | [link](https://urserver.kaist.ac.kr/publicdata/KAIST_VIO_Dataset/circle/circle.bag)

[link](https://urserver.kaist.ac.kr/publicdata/KAIST_VIO_Dataset/circle/circle_fast.bag)

[link](https://urserver.kaist.ac.kr/publicdata/KAIST_VIO_Dataset/circle/circle_head.bag) | | **infinity** | normal

fast

rotation | [link](https://urserver.kaist.ac.kr/publicdata/KAIST_VIO_Dataset/infinite/infinite.bag)

[link](https://urserver.kaist.ac.kr/publicdata/KAIST_VIO_Dataset/infinite/infinite_fast.bag)

[link](https://urserver.kaist.ac.kr/publicdata/KAIST_VIO_Dataset/infinite/infinite_head.bag) | | **square** | normal

fast

rotation | [link](https://urserver.kaist.ac.kr/publicdata/KAIST_VIO_Dataset/square/square.bag)

[link](https://urserver.kaist.ac.kr/publicdata/KAIST_VIO_Dataset/square/square_fast.bag)

[link](https://urserver.kaist.ac.kr/publicdata/KAIST_VIO_Dataset/square/square_head.bag) | | **rotation** | normal

fast | [link](https://urserver.kaist.ac.kr/publicdata/KAIST_VIO_Dataset/rotation/rotation.bag)

[link](https://urserver.kaist.ac.kr/publicdata/KAIST_VIO_Dataset/rotation/rotation_fast.bag) |>

## Dataset format

(the offset has already been applied to the bag data, and this YAML file has estimated offset values, just for reference. To benchmark your VO/VIO method more accurately, you can use your alignment method with other tools, like origin alignment or Umeyama alignment from [evo](https://github.com/MichaelGrupp/evo)) + imu-params.yaml: estimated noise parameters of Pixhawk 4 mini + cam-imu.yaml: Camera intrinsics, Camera-IMU extrinsics in kalibr format - publish ground truth as trajectory ROS topic - ground truth are recorded as 'geometry_msgs/PoseStamped' - but you may want to acquire 'nav_msgs/Path' rather than just 'Pose' for visuaslization purpose (e.g. Rviz) - For this, you can refer this package for 'geometry_msgs/PoseStamped'->'nav_msgs/Path': [tf_to_trajectory](https://github.com/engcang/tf_to_trajectory#execution)

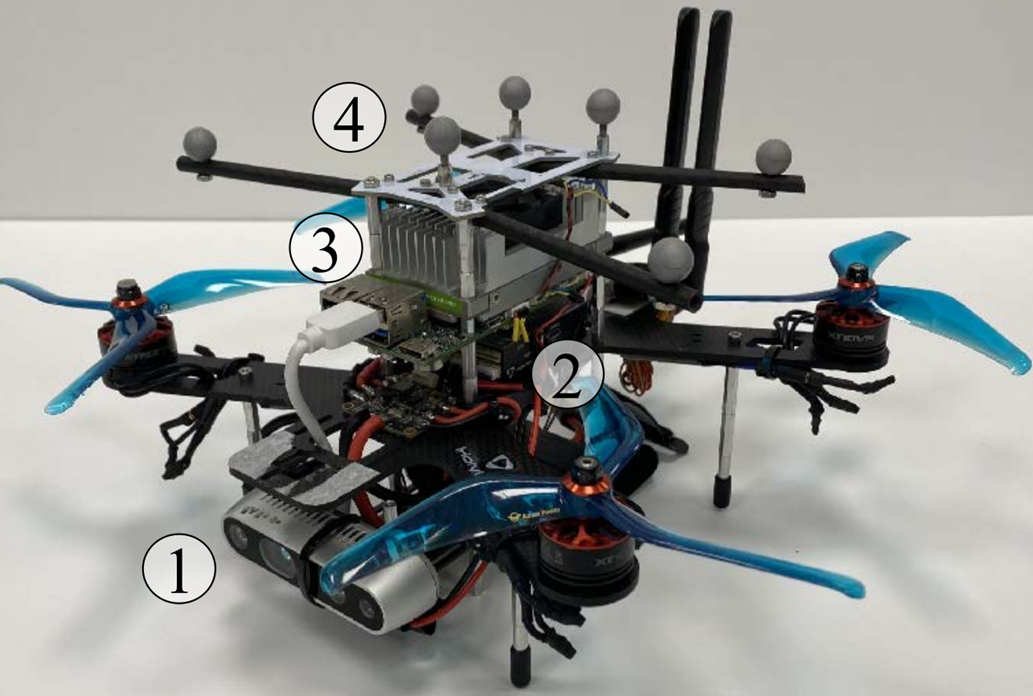

## Setup #### - Hardware

Fig.1 Lab Environment Fig.2 UAV platform

+ **VI sensor unit** + camera: Intel Realsense D435i (640x480 for infra 1,2 & RGB images) + IMU: Pixhawk 4 mini + VI sensor unit was calibrated by using [kalibr](https://github.com/ethz-asl/kalibr)

+ **Ground-Truth** + OptiTrack PrimeX 13 motion capture system with six cameras was used + including 6-DOF motion information.

#### - Software (VO/VIO Algorithms): How to set each (publicly available) algorithm on the jetson board | VO/VIO | Setup link | | :---: | :---: | | **VINS-Mono** | [link](https://github.com/zinuok/VINS-MONO) | | **ROVIO** | [link](https://github.com/zinuok/Rovio) | | **VINS-Fusion** | [link](https://github.com/zinuok/VINS-Fusion) | | **Stereo-MSCKF** | [link](https://github.com/zinuok/MSCKF_VIO) | | **Kimera** | [link](https://github.com/zinuok/Kimera-VIO-ROS) | ## Citation If you use the algorithm in an academic context, please cite the following publication: ``` @article{jeon2021run, title={Run your visual-inertial odometry on NVIDIA Jetson: Benchmark tests on a micro aerial vehicle}, author={Jeon, Jinwoo and Jung, Sungwook and Lee, Eungchang and Choi, Duckyu and Myung, Hyun}, journal={IEEE Robotics and Automation Letters}, volume={6}, number={3}, pages={5332--5339}, year={2021}, publisher={IEEE} } ``` ## Lisence This datasets are released under the Creative Commons license (CC BY-NC-SA 3.0), which is free for non-commercial use (including research).